In the design of Docker, the files in the container are temporarily stored, and as the container is deleted, the data inside the container will also be emptied. However, we can use the –volume/-v parameter to specify the mount volume when docker run starts the container, so that the path inside the container can be mounted to the host, and the data stored in the container will be synchronized later to the mounted host path. Doing this ensures that even if the container is deleted, the data saved to the host path still exists.

Unlike Docker, which can solve persistent storage problems by mounting volumes, the problems faced by K8s storage are much more complicated. Because K8s usually deploys nodes on multiple hosts, if the Docker container orchestrated by K8s crashes, K8s may restart the container on other nodes, which makes the container directory mounted on the original node host unusable.

Of course, there are also ways to solve the many limitations of K8s container storage. For example, a layer of abstraction can be made for storage resources. Usually, this layer of abstraction is called volume.

The volumes supported by K8s can basically be divided into three categories: configuration information, temporary storage, and persistent storage.

configuration information

Regardless of the type of application, configuration files or startup parameters are used. K8s abstracts the configuration information and defines several resources, mainly in the following three types:

ConfigMaps

Secret

Downward API

ConfigMaps

ConfigMap volume usually exists in the form of one or more key: value, and is mainly used to save application configuration data. Where value can be a literal value or a configuration file.

However, because ConfigMaps are not designed to hold large amounts of data, you cannot hold more than 1 MiB (megabytes) of data in a ConfigMap.

ConfigMaps can be created in two ways:

Created via the command line

Created by yaml file

Created via the command line

When creating a Configmap, the key: value can be specified through the –from-literal parameter. In the following example, foo=bar is the literal form, and bar=bar.txt is the configuration file form.

$ kubectl create configmap c1 --from-literal=foo=bar --from-literal=bar=bar.txt

The content of bar.txt is as follows:

baz

Use the kubectl describe command to view the content of the newly created Configmap resource named c1.

$ kubectl describe configmap c1

Name: c1

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

bar:

----

baz

foo:

----

bar

Events: <none>

Created by yaml file

Create configmap-demo.yaml with the following content:

kind: ConfigMap

apiVersion: v1

metadata:

name: c2

namespace: default

data:

foo: bar

bar: baz

Apply this file with the kubectl apply command.

$ kubectl apply -f configmap-demo.yaml

$ kubectl get configmap c2

NAME DATA AGE

c2 2 11s

$ kubectl describe configmap c2

Name: c2

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

foo:

----

bar

bar:

----

baz

Events: <none>

The result is no different from a Configmap created via the command line.

Example of use

After creating the Configmap, let’s see how to use it.

There are two ways to use the created Configmap:

Referenced by environment variables

Create use-configmap-env-demo.yaml with the following content:

apiVersion: v1

kind: Pod

metadata:

name: "use-configmap-env"

namespace: default

spec:

containers:

- name: use-configmap-env

image: "alpine"

# 一次引用单个值

env:

- name: FOO

valueFrom:

configMapKeyRef:

name: c2

key: foo

# 一次引用所有值

envFrom:

- prefix: CONFIG_ # 配置引用前缀

configMapRef:

name: c2

command: ["echo", "$(FOO)", "$(CONFIG_bar)"]

You can see that we have created a Pod named use-configmap-env, and the Pod’s container will refer to the content of the Configmap in two ways.

The first is to specify spec.containers.env, which can refer to a Configmap key: value pair for the container. Among them, valueFrom.configMapKeyRef indicates that we want to refer to Configmap, the name of Configmap is c2, and the referenced key is foo.

The second is to specify spec.containers.envFrom, you only need to specify the name of the Configmap through configMapRef.name, and it can pass all key: values in the Configmap to the container at one time. Among them, prefix can add a unified prefix in front of the referenced key.

The Pod’s container startup command is echo $(FOO) $(CONFIG_bar), which can print the contents of the Configmap referenced by env and envFrom respectively.

# 创建 Pod

$ kubectl apply -f use-configmap-env-demo.yaml

# 通过查看 Pod 日志来观察容器内部引用 Configmap 结果

$ kubectl logs use-configmap-env

bar baz

The results show that the content of Configmap can be referenced by environment variables inside the container.

Referenced by volume mount

Create use-configmap-volume-demo.yaml with the following content:

apiVersion: v1

kind: Pod

metadata:

name: "use-configmap-volume"

namespace: default

spec:

containers:

- name: use-configmap-volume

image: "alpine"

command: ["sleep", "3600"]

volumeMounts:

- name: configmap-volume

mountPath: /usr/share/tmp # 容器挂载目录

volumes:

- name: configmap-volume

configMap:

name: c2

Here a Pod called use-configmap-volume is created. Specify the container mount through spec.containers.volumeMounts, name specifies the volume name to mount, and mountPath specifies the internal mounting path of the container (that is, mount the Configmap to the specified directory inside the container). spec.volumes declares a volume, and configMap.name indicates the name of the Configmap to be referenced by this volume.

Then you can use the following command to create a Pod and verify whether the Configmap can be referenced inside the container.

# 创建 Pod

$ kubectl apply -f use-configmap-volume-demo.yaml

# 进入 Pod 容器内部

$ kubectl exec -it use-configmap-volume -- sh

# 进入容器挂载目录

/ # cd /usr/share/tmp/

# 查看挂载目录下的文件

/usr/share/tmp # ls

bar foo

# 查看文件内容

/usr/share/tmp # cat foo

bar

/usr/share/tmp # cat bar

baz

After the creation is complete, you can enter the container through the kubectl exec command. Check the container /usr/share/tmp/ directory, you can see two text files (foo, bar) named after the key in the Configmap, and the value corresponding to the key is the content of the file.

The above are two ways to inject the contents of the Configmap into the container. Applications inside the container can use configuration information by reading environment variables and file content respectively.

Secret

Now that you are familiar with the usage of Configmap, let’s look at the usage of Secret. Secret volumes are used to pass sensitive information to Pods, such as passwords, SSH keys, etc. Because although Secret is very similar to ConfigMap, it will base64 encode the stored data.

Secret can also be created in two ways:

Created via the command line

Created by yaml file

Created via the command line

Secret In addition to specifying key: value through the –from-literal parameter, there is another way. That is, the configuration is directly loaded from the file through the –form-file parameter, the file name is the key, and the file content is the value.

# generic 参数对应 Opaque 类型,既用户定义的任意数据

$ kubectl create secret generic s1 --from-file=foo.txt

The content of foo.txt is as follows:

foo=bar

bar=baz

You can see that unlike Configmap, creating a Secret requires specifying the type. In the above example, the command creates a Secret of type Opaque by specifying the generic parameter, which is also the default type of Secret. It should be noted that apart from the default type, Secret also supports other types, which can be viewed through official documents.However, in the initial learning stage, only the default type can be used, and the functions of several other types can be realized through the default type.

$ kubectl describe secret s1

Name: s1

Namespace: default

Labels: <none>

Annotations: <none>

Type: Opaque

Data

====

foo.txt: 16 bytes

Another difference from Configmap is that Secret only displays the byte size of the value, not the data directly. This is to save the ciphertext, which is also the meaning of the Secret name.

Created by yaml file

Create secret-demo.yaml with the following content:

apiVersion: v1

kind: Secret

metadata:

name: s2

namespace: default

type: Opaque # 默认类型

data:

user: cm9vdAo=

password: MTIzNDU2Cg==

Apply this file with the kubectl apply command.

$ kubectl apply -f secret-demo.yaml

$ kubectl get secret s2

NAME TYPE DATA AGE

s2 Opaque 2 59s

$ kubectl describe secret s2

Name: s2

Namespace: default

Labels: <none>

Annotations: <none>

Type: Opaque

Data

====

password: 7 bytes

user: 5 bytes

The Secret resource can also be created correctly. However, we can see that when creating a Secret through a yaml file, the specified data content must be base64 encoded. For example, the user and password we specified are both encoded results.

data:

user: cm9vdAo=

password: MTIzNDU2Cg==

In addition, the original string method can also be used. These two methods are equivalent. Examples are as follows:

data:

stringData:

user: root

password: "123456"

Relatively speaking, I recommend using base64 encoding.

Example of use

In the same way as Configmap, we can also use Secret through environment variables or volume mounting. Take the volume mount method as an example. First create use-secret-volume-demo.yaml with the following content:

apiVersion: v1

kind: Pod

metadata:

name: "use-secret-volume-demo"

namespace: default

spec:

containers:

- name: use-secret-volume-demo

image: "alpine"

command: ["sleep", "3600"]

volumeMounts:

- name: secret-volume

mountPath: /usr/share/tmp # 容器挂载目录

volumes:

- name: secret-volume

secret:

secretName: s2

That is, create a Pod named use-secret-volume-demo, and the container of the Pod refers to the content of the Secret through volume mounting.

# 创建 Pod

$ kubectl apply -f use-secret-volume-demo.yaml

# 进入 Pod 容器内部

$ kubectl exec -it use-secret-volume-demo -- sh

# 进入容器挂载目录

/ # cd /usr/share/tmp/

# 查看挂载目录下的文件

/usr/share/tmp # ls

password user

# 查看文件内容

/usr/share/tmp # cat password

123456

/usr/share/tmp # cat user

root

It can be found that after being mounted inside the container, the content of the Secret will be stored in plain text. The application inside the container can use Secret in the same way as Configmap.

As Configmap and Secret that can store configuration information, Configmap usually stores common configuration, and Secret stores sensitive configuration.

It is worth mentioning that when using environment variables to refer to Configmap or Secret, when the content of Configmap or Secret changes, the content referenced inside the container will not be automatically updated; using volume mount method to refer to Configmap or Secret, when the content of Configmap or Secret changes , the content referenced inside the container is automatically updated. If the application inside the container supports configuration file hot loading, it is recommended to refer to the Configmap or Secret content through volume mount pairs.

Downward API

DownwardAPI can inject the information of the Pod object itself into the container managed by the Pod.

Example of use

Create downwardapi-demo.yaml with the following content:

apiVersion: v1

kind: Pod

metadata:

name: downwardapi-volume-demo

labels:

app: downwardapi-volume-demo

annotations:

foo: bar

spec:

containers:

- name: downwardapi-volume-demo

image: alpine

command: ["sleep", "3600"]

volumeMounts:

- name: podinfo

mountPath: /etc/podinfo

volumes:

- name: podinfo

downwardAPI:

items:

# 指定引用的 labels

- path: "labels"

fieldRef:

fieldPath: metadata.labels

# 指定引用的 annotations

- path: "annotations"

fieldRef:

fieldPath: metadata.annotations

# 创建 Pod

$ kubectl apply -f downwardapi-demo.yaml

pod/downwardapi-volume-demo created

# 进入 Pod 容器内部

$ kubectl exec -it downwardapi-volume-demo -- sh

# 进入容器挂载目录

/ # cd /etc/podinfo/

# 查看挂载目录下的文件

/etc/podinfo # ls

annotations labels

# 查看文件内容

/etc/podinfo # cat annotations

foo="bar"

kubectl.kubernetes.io/last-applied-configuration="{\"apiVersion\":\"v1\",\"kind\":\"Pod\",\"metadata\":{\"annotations\":{\"foo\":\"bar\"},\"labels\":{\"app\":\"downwardapi-volume-demo\"},\"name\":\"downwardapi-volume-demo\",\"namespace\":\"default\"},\"spec\":{\"containers\":[{\"command\":[\"sleep\",\"3600\"],\"image\":\"alpine\",\"name\":\"downwardapi-volume-demo\",\"volumeMounts\":[{\"mountPath\":\"/etc/podinfo\",\"name\":\"podinfo\"}]}],\"volumes\":[{\"downwardAPI\":{\"items\":[{\"fieldRef\":{\"fieldPath\":\"metadata.labels\"},\"path\":\"labels\"},{\"fieldRef\":{\"fieldPath\":\"metadata.annotations\"},\"path\":\"annotations\"}]},\"name\":\"podinfo\"}]}}\n"

kubernetes.io/config.seen="2022-03-12T13:06:50.766902000Z"

/etc/podinfo # cat labels

app="downwardapi-volume-demo"

It is not difficult to find that the usage of DownwardAPI is the same as that of Configmap and Secret, which can be mounted inside the container through volume mounting, and then generate corresponding files in the directory where the container is mounted to store key: value. The difference is that because the content that can be referenced by DownwardAPI has been defined in the current yaml file, DownwardAPI does not need to be defined in advance and can be used directly.

summary

ConfigMap, Secret, DownwardAPI these three volumes exist not to save the data in the container, but to deliver pre-defined data to the container.

scratch volume

The next thing we want to focus on is the ephemeral volume, or ephemeral storage. The most commonly used temporary storage supported by K8s are the following two:

Ephemeral storage volumes are created and deleted along with the Pod, following the Pod’s lifecycle.

EmptyDir

Let’s first look at how emptyDir is used. EmptyDir is equivalent to using Docker in the form of an implicit Volume when mounting via –volume/-v. K8s will create a temporary directory on the host and mount it to the mountPath directory declared by the container, that is, a directory that is not explicitly declared on the host.

Example of use

Create emptydir-demo.yaml with the following content:

apiVersion: v1

kind: Pod

metadata:

name: "emptydir-nginx-pod"

namespace: default

labels:

app: "emptydir-nginx-pod"

spec:

containers:

- name: html-generator

image: "alpine:latest"

command: ["sh", "-c"]

args:

- while true; do

date > /usr/share/index.html;

sleep 1;

done

volumeMounts:

- name: html

mountPath: /usr/share

- name: nginx

image: "nginx:latest"

ports:

- containerPort: 80

name: http

volumeMounts:

- name: html

# nginx 容器 index.html 文件所在目录

mountPath: /usr/share/nginx/html

readOnly: true

volumes:

- name: html

emptyDir: {}

Here create a Pod named emptydir-nginx-pod, which contains two containers html-generator and nginx. html-generator is used to continuously generate html files, and nginx is a web service used to display the index.html files generated by html-generator.

The specific process is that html-generator keeps writing the current time to /usr/share/index.html, and mounts the /usr/share directory to a volume named html, while the nginx container writes /usr/share The /share/nginx/html directory is mounted to a volume named html, so that the two containers are mounted together through the same volume html.

Now apply this file with the kubectl apply command:

# 创建 Pod

$ kubectl apply -f emptydir-demo.yaml

pod/emptydir-nginx-pod created

# 进入 Pod 容器内部

$ kubectl exec -it pod/emptydir-nginx-pod -- sh

# 查看系统时区

/ # curl 127.0.0.1

Sun Mar 13 08:40:01 UTC 2022

/ # curl 127.0.0.1

Sun Mar 13 08:40:04 UTC 2022

According to the output of the curl 127.0.0.1 command inside the nginx container, it can be found that the content of the nginx container /usr/share/nginx/html/indedx.html file is the content of the html-generator container /usr/share/index.html file.

The principle of achieving this effect is that when we declare the volume type as emptyDir: {}, K8s will automatically create a temporary directory on the host directory. Then mount the html-generator container /usr/share/ directory and the nginx container /usr/share/nginx/html/ to this temporary directory at the same time. In this way, the directories of the two containers can realize data synchronization.

Note that container crashes do not cause Pods to be removed from the node, so the data in the emptyDir volume is safe during container crashes. In addition, besides emptyDir.medium can be set to {}, it can also be set to Memory to indicate memory mount.

HostPath

Unlike emptyDir, the hostPath volume can mount files or directories on the host node’s file system to the specified Pod. And when the Pod is deleted, the hostPath bound to it will not be deleted. When the newly created Pod is mounted to the hostPath used by the previous Pod, the content in the original hostPath still exists. But this is limited to new Pods and deleted Pods being scheduled on the same node, so strictly speaking hostPath is still ephemeral storage.

A typical application of the hostPath volume is to inject the time zone on the host node into the container through volume mounting, so as to ensure the time synchronization between the started container and the host node.

Example of use

Create hostpath-demo.yaml with the following content:

apiVersion: v1

kind: Pod

metadata:

name: "hostpath-volume-pod"

namespace: default

labels:

app: "hostpath-volume-pod"

spec:

containers:

- name: hostpath-volume-container

image: "alpine:latest"

command: ["sleep", "3600"]

volumeMounts:

- name: localtime

mountPath: /etc/localtime

volumes:

- name: localtime

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

To achieve time synchronization, you only need to mount the host directory /usr/share/zoneinfo/Asia/Shanghai to the /etc/localtime directory inside the container by volume mounting.

You can use the kubectl apply command to apply this file, and then enter the Pod container and use the date command to view the current time of the container.

# 创建 Pod

$ kubectl apply -f hostpath-demo.yaml

pod/hostpath-volume-pod created

# 进入 Pod 容器内部

$ kubectl exec -it hostpath-volume-pod -- sh

# 执行 date 命令输出当前时间

/ # date

Sun Mar 13 17:00:22 CST 2022 # 上海时区

You can see that the output is Sun Mar 13 17:00:22 CST 2022 , where CST represents the Shanghai time zone, which is the time zone of the host node. If the host time zone is not mounted inside the container by volume mounting, the default time zone of the container is the UTC time zone.

summary

The content of the temporary volume introduces the temporary storage solution and application of K8s. Among them, emptyDir is less applicable and can be used as a temporary cache or a time-consuming task checkpoint.

It should be noted that most Pods should ignore the host node and should not access the file system on the node. Although sometimes DaemonSet may need to access the file system of the host node, and hostPath can be used to synchronize the time zone of the host node to the container, but it is less used in other cases, especially the best practice of hostPath is to try not to use hostPath.

persistent volume

The life cycle of the temporary volume is the same as that of the Pod. When the Pod is deleted, K8s will automatically delete the temporary volume mounted on the Pod. And when the application in the Pod needs to save data to disk, and the data should exist even if the Pod is scheduled to other nodes, we need a real persistent storage.

There are many types of persistent volumes supported by K8s. The following are some of the volume types supported by v1.24:

awsElasticBlockStore – AWS Elastic Block Store (EBS)

azureDisk – Azure Disk

azureFile – Azure File

cephfs – CephFS volume

csi – Container Storage Interface (CSI)

fc – Fiber Channel (FC) storage

gcePersistentDisk – GCE persistent disk

glusterfs – Glusterfs volumes

iscsi – iSCSI (SCSI over IP) storage

local – the local storage device mounted on the node

nfs – Network File System (NFS) storage

portworxVolume – Portworx Volume

rbd – Rados block device (RBD) volume

vsphereVolume – vSphere VMDK volume

There is no need to panic when you see so many persistent volume types, because K8s has a unique set of ideas for persistent volumes so that developers don’t have to care about the persistent storage types behind them, that is, no matter what kind of persistent volumes developers use, their usage is different. is consistent.

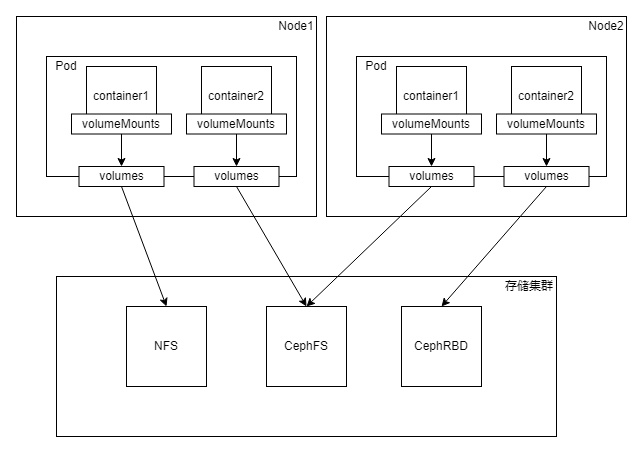

The K8s persistent volume design architecture is as follows:

Node1 and Node2 respectively represent two working nodes. When we create a Pod on a working node, we can specify the container mount directory through spec.containers.volumeMounts and the mount volume through spec.volumes. We used the mount volume to mount configuration information and temporary volumes before, and the same method can be used to mount persistent volumes. Each volumes points to a different storage type in the underlying storage cluster.

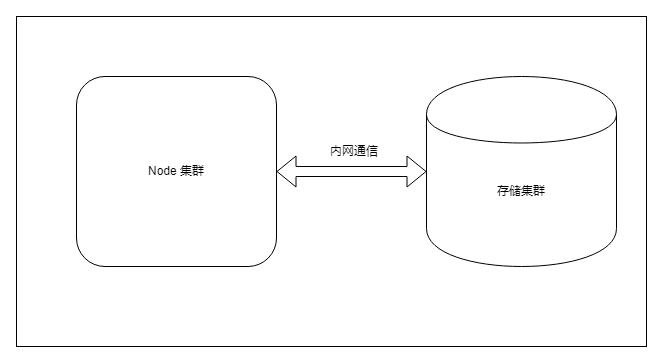

In order to ensure high availability, we usually build a storage cluster. Usually, the storage is operated by Pod, because Pods are deployed in Node, so it is better to build the storage cluster on the same intranet as the Node cluster, so that the speed is faster. The storage cluster can use any persistent storage supported by K8s, such as NFS, CephFS, and CephRBD in the above figure.

use NFS

The persistent mount method is similar to the temporary volume, and we also use an Nginx service for testing. This time we use NFS storage to demonstrate K8s’ support for persistent volumes (for the process of building an NFS test environment, please refer to the appendix at the end of the article), and create nfs-demo.yaml with the following content:

apiVersion: v1

kind: Pod

metadata:

name: "nfs-nginx-pod"

namespace: default

labels:

app: "nfs-nginx-pod"

spec:

containers:

- name: nfs-nginx

image: "nginx:latest"

ports:

- containerPort: 80

name: http

volumeMounts:

- name: html-volume

mountPath: /usr/share/nginx/html/

volumes:

- name: html-volume

nfs:

server: 192.168.99.101 # 指定 nfs server 地址

path: /nfs/data/nginx # 目录必须存在

Mount the directory /usr/share/nginx/html/ where the container index.html is located to the /nfs/data/nginx directory of the NFS service, and specify the NFS service in the spec.volumes configuration item. Among them, server indicates the address of the NFS server, and path indicates the path mounted on the NFS server. Of course, this path must be an existing path. Then apply this file with the kubectl apply command.

$ kubectl apply -f nfs-demo.yaml

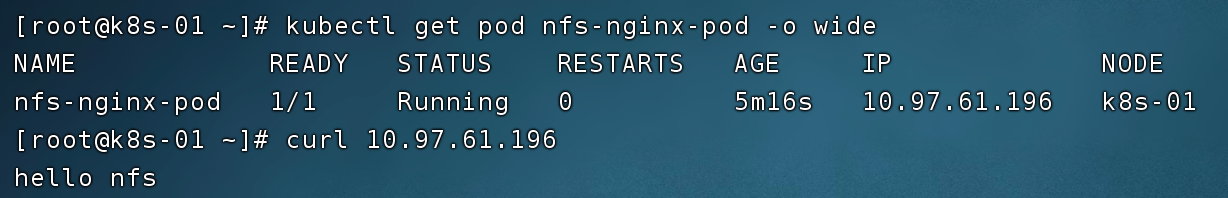

Next, let’s check the result of this Pod using NFS storage:

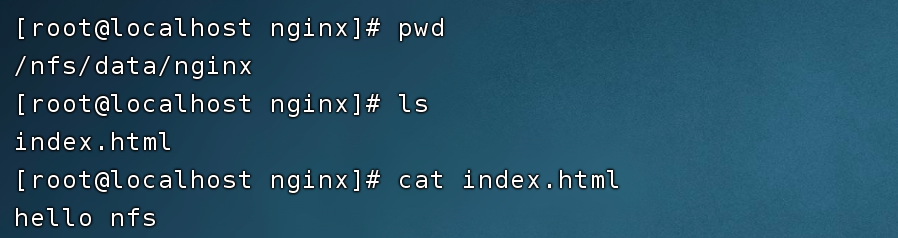

In the NFS node, we prepare an index.html file whose content is hello nfs.

Use the curl command to directly access the IP address of the Pod, and the index.html content of the Nginx service can be returned. The output of the result is hello nfs, which proves that the NFS persistent volume is mounted successfully.

Log in to the Pod container, and use the df -Th command to view the mounting information of the container directory. It can be found that the /usr/share/nginx/html/ directory of the container is mounted to the /nfs/data/nginx directory of the NFS service.

Now, when we execute kubectl delete -f nfs-demo.yaml to delete the Pod, the data in the data disk on the NFS server still exists, which is the persistent volume.

Persistent Volume Pain Points

Although the use of persistent volumes can solve the problem of easy loss of temporary volume data. However, the current usage of persistent volumes still has the following pain points:

Therefore, in order to solve these shortcomings, K8s abstracts three resources PV, PVC, and StorageClass for persistent storage. The three resources are defined as follows:

PV describes the persistent storage data volume

PVC describes the persistent storage properties that Pod wants to use, that is, storage volume declaration

The role of StorageClass is to apply for the creation of corresponding PV according to the description of PVC

The concepts of PV and PVC can correspond to the object-oriented thinking in programming languages, PVC is an interface, and PV is a concrete implementation.

With these three resource types, Pods can use persistent volumes through static provisioning and dynamic provisioning.

static supply

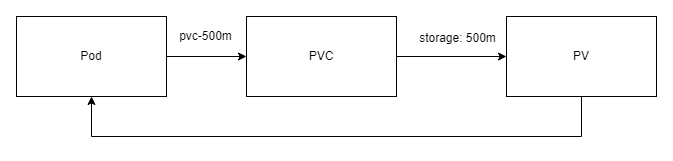

Static provisioning does not involve StorageClass, only PVC and PV. Its usage flow chart is as follows:

When using static provisioning, the Pod is no longer directly bound to the persistent storage, but is bound to the PVC, and then the PVC is bound to the PV. In this way, the containers in the Pod can use the persistent storage that is actually applied for by the PV.

Example of use

Create pv-demo.yaml with the following content:

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv-1g

labels:

type: nfs

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

storageClassName: nfs-storage

nfs:

server: 192.168.99.101

path: /nfs/data/nginx1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv-100m

labels:

type: nfs

spec:

capacity:

storage: 100m

accessModes:

- ReadWriteOnce

storageClassName: nfs-storage

nfs:

server: 192.168.99.101

path: /nfs/data/nginx2

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-500m

labels:

app: pvc-500m

spec:

storageClassName: nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 500m

---

apiVersion: v1

kind: Pod

metadata:

name: "pv-nginx-pod"

namespace: default

labels:

app: "pv-nginx-pod"

spec:

containers:

- name: pv-nginx

image: "nginx:latest"

ports:

- containerPort: 80

name: http

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html/

volumes:

- name: html

persistentVolumeClaim:

claimName: pvc-500m

The yaml file defines the following content:

Two PVs: the application capacities are 1Gi and 100m respectively, specified through spec.capacity.storage, and they specify the address and path of the NFS storage service through spec.nfs.

One PVC: apply for 500m storage.

A Pod: spec.volumes is bound to a PVC named pvc-500m instead of directly binding to the NFS storage service.

Apply the file with the kubectl apply command:

$ kubectl apply -f pv-demo.yaml

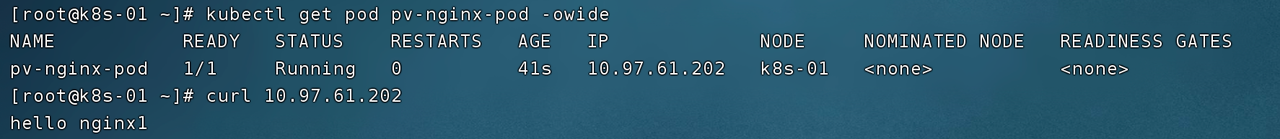

The creation is completed above, and the result viewing operation is as follows:

First, check the newly created Pod through the kubectl get pod command, and access the IP address of the Pod through the curl command, and get the response result of hello nginx1.

Then check the created PVC through kubectl get pvc:

STATUS field: Indicates that the PVC is already in the bound (Bound) state, that is, bound with the PV.

CAPACITY field: indicates that the PVC is bound to a 1Gi PV. Although the size of the PVC we applied for is 500m, since the two PV sizes we created are 1Gi and 100m, K8s will help us choose the optimal solution that meets the conditions. Because there is no PV with a size exactly equal to 500m, and 100m is not enough, the PVC will be automatically bound to a PV with a size of 1Gi.

Query the created PV resource through kubectl get pv, and you can find that the PV STATUS field with a size of 1Gi is Bound. The value of CLAIM identifies the name of the PVC bound to it.

Now we log in to the NFS server and confirm the contents of the files in the directories mounted by different persistent volumes (PV) on the NFS storage.

It can be seen that the content of index.html under the /nfs/data/nginx1 directory is hello nginx1, which is the response result of accessing the Pod service through the curl command above.

At this point, the persistent volume is used, and we summarize the entire persistent volume usage process. First create a Pod, and bind PVC to the Pod’s spec.volumes. The PVC here is just a storage statement, which represents what kind of persistent storage our Pod needs. It does not need to indicate the NFS service address, nor does it need to specify which PV to bind with, just create this PVC. Then we create two PVs, and the PV does not clearly indicate which PVC to bind to, but only needs to indicate its size and NFS storage service address. At this time, K8s will automatically bind PVC and PV for us, so that Pod will be associated with PV and can access persistent storage.

other

If you are careful, you may have noticed that the static provision mentioned above does not involve StorageClass, but when defining the yaml files of PVC and PV, the value of spec.storageClassName is still specified as nfs-storage. Because this is an easy-to-manage operation method, only PVCs and PVs with the same StorageClass can be bound. This field identifies the access mode of the persistent volume. Four access modes are supported in K8s persistence:

RWO – ReadWriteOnce – the volume can be mounted read-write by one node

ROX – ReadOnlyMany – Volume can be mounted read-only by multiple nodes

RWX – ReadWriteMany – the volume can be mounted read-write by multiple nodes

RWOP – ReadWriteOncePod – the volume can be mounted read-write by a single Pod (K8s 1.22 and above)

Only PVCs and PVs with the same read-write mode can be bound.

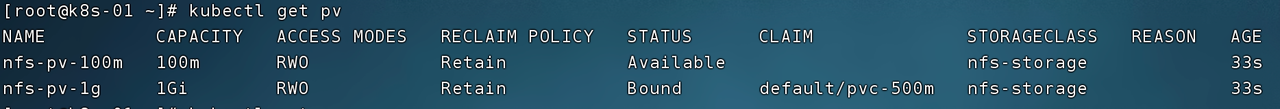

Now let’s continue the experiment, delete the Pod through the command kubectl delete pod pv-nginx-pod, and check the PVC and PV status again.

As can be seen from the figure above, the PVC and PV are still there after the Pod is deleted, which means that the Pod deletion does not affect the existence of the PVC. When the PVC is deleted, whether to delete the PV can be determined by setting the recycling policy. There are three PV recovery policies (pv.spec.persistentVolumeReclaimPolicy):

Retain —— manual recycling, that is to say, after deleting the PVC, the PV still exists and needs to be manually deleted by the administrator

Recycle —— basic erasure (equivalent to rm -rf /*) (the new version is obsolete and not recommended, it is recommended to use dynamic provisioning)

Delete —— delete PV, that is, cascade delete

Now use the command kubectl delete pvc pvc-500m to delete the PVC and check the PV status.

It can be seen that the PV still exists, and its STATUS has changed to Released. The PV in this state cannot be bound to the PVC again, and needs to be manually deleted by the administrator, which is determined by the recycling policy.

Note: The PVC bound to the Pod, if the Pod is running, the PVC cannot be deleted.

Insufficient static supply

We have experienced the process of static provisioning together. Although it is clearer than directly binding NFS services in Pods, static provisioning is still insufficient.

First of all, it will cause a waste of resources. For example, in the above example, the PVC applies for 500m, but there is no PV that is exactly equal to 500m. This K8s will bind a 1Gi PV to it

There is also a fatal problem. If there is no PV that satisfies the conditions, the PVC cannot be bound to the PV and is in the Pending state, and the Pod will not be able to start. Therefore, the administrator needs to create a large number of PVs in advance to wait for the newly created ones. PVC is bound to it, or the administrator always monitors whether there is a PV that satisfies the PVC, and if it does not exist, it will be created immediately, which is obviously unacceptable

dynamic provisioning

Because static provisioning is insufficient, K8s introduces a more convenient way to use persistent volumes, that is, dynamic provisioning. The core component of dynamic supply is StorageClass – storage class. StorageClass has two main functions:

The first is resource grouping. When we use static provisioning above, specifying StorageClass is to group resources for easy management.

Second, StorageClass can help us automatically create new PVs based on resources requested by PVCs. This function is done by the provisioner storage plug-in in StorageClass.

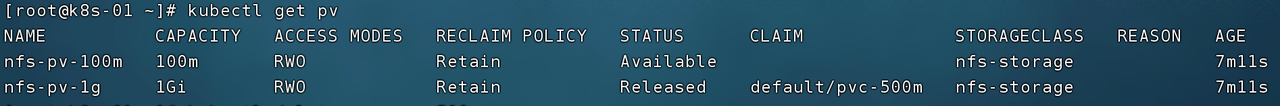

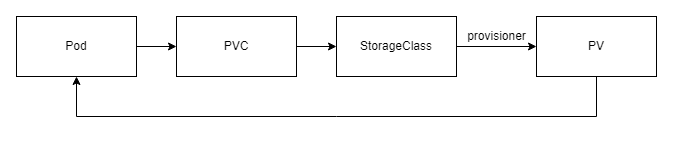

Its usage flow chart is as follows:

Compared to static provisioning, dynamic provisioning adds storage classes between PVCs and PVs. This PV does not need to be created in advance, as long as we apply for PVC and bind StorageClass with provisioner function, StorageClass will automatically create PV for us and bind it with PVC.

We can create corresponding StorageClass according to the provided persistent storage type, for example:

nfs-storage

cephfs-storage

rbd-storage

You can also set a default StorageClass by specifying the corresponding annotations when creating the StorageClass resource:

apiVersion: storage.K8s.io/v1

kind: StorageClass

metadata:

annotations:

storageclass.kubernetes.io/is-default-class: "true"

...

When the spec.storageClassName is not specified when creating a PVC, the PVC will use the default StorageClass.

Example of use

Still use NFS as persistent storage.

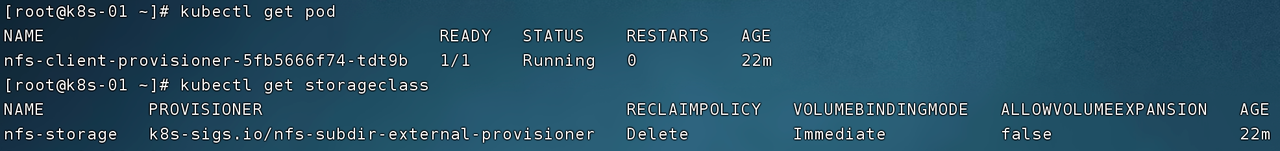

First of all, there needs to be a provisioner that can support automatic creation of PV, which can find some open source implementations in GitHub. The example uses the storage plug-in nfs-subdir-external-provisioner. The specific installation method is very simple. You only need to apply the several yaml files provided by it through the kubectl apply command. After completing the storage plug-in installation, you can create a StorageClass as follows:

apiVersion: storage.K8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

provisioner: K8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "true"

This StorageClass specifies the K8s-sigs.io/nfs-subdir-external-provisioner that the provisioner installed for us. Provisioner is essentially a Pod, which can be viewed through kubectl get pod. The StorageClass that specifies the provisioner has the ability to automatically create PVs, because Pods can automatically create PVs.

After the provisioner and StorageClass are created, the experiment of dynamic provisioning can be carried out. First create nfs-provisioner-demo.yaml with the following content:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

spec:

storageClassName: nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Mi

---

apiVersion: v1

kind: Pod

metadata:

name: "test-nginx-pod"

namespace: default

labels:

app: "test-nginx-pod"

spec:

containers:

- name: test-nginx

image: "nginx:latest"

ports:

- containerPort: 80

name: http

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html/

volumes:

- name: html

persistentVolumeClaim:

claimName: test-claim

Here we only define a PVC and a Pod, and no PV is defined. The spec.storageClassName of the PVC is specified as the StorageClass nfs-storage created above, and then you only need to create the PVC and Pod through the kubectl apply command:

$ kubectl apply -f nfs-provisioner-demo.yaml

persistentvolumeclaim/test-claim created

pod/test-nginx-pod created

Now check the PV, PVC and Pod, you can see that the PV has been automatically created, and the binding relationship between them has been realized.

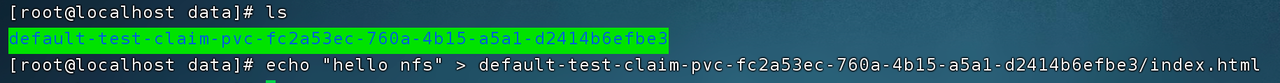

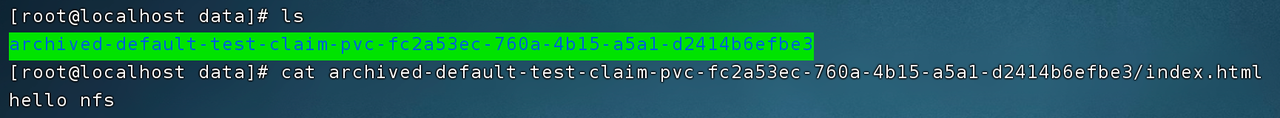

Then log in to the NFS service and write hello nfs data to the volume mounted remotely.

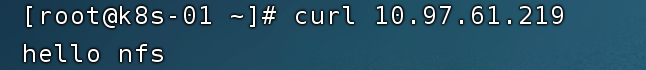

On the K8s side, you can use the curl command to verify the correctness of the mount.

At this time, if you delete the Pod and PVC through kubectl delete -f nfs-provisioner-demo.yaml, the PV will also be deleted, because the deletion strategy of PV is Delete. However, after deletion, the data in the NFS volume is still there, but it is archived into a directory starting with archived. This is the function realized by the storage plug-in K8s-sigs.io/nfs-subdir-external-provisioner, which is the power of the storage plug-in.

After completing all the operations, we can find that by defining the StorageClass that specifies the provisioner, not only the automatic creation of PV is realized, but also the function of automatic archiving when data is deleted is realized. This is the subtlety of K8s dynamic provisioning storage design. It can also be said that dynamic provisioning is the best practice for persistent storage.

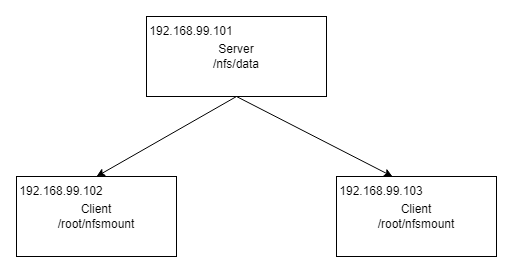

Appendix: NFS Experimental Environment Construction

The full name of NFS is Network File System, which is a kind of distributed storage, which can realize directory sharing between different hosts through a local area network.

The following is the architecture diagram of NFS: it consists of a Server node and two Client nodes.

The process of building NFS in Centos system is listed below.

Server node

# 安装 nfs 工具

yum install -y nfs-utils

# 创建 NFS 目录

mkdir -p /nfs/data/

# 创建 exports 文件,* 表示所有网络上的 IP 都可以访问

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

# 启动 rpc 远程绑定功能、NFS 服务功能

systemctl enable rpcbind

systemctl enable nfs-server

systemctl start rpcbind

systemctl start nfs-server

# 重载使配置生效

exportfs -r

# 检查配置是否生效

exportfs

# 输出结果如下所示

# /nfs/data

Client node

# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

# 安装 nfs 工具

yum install -y nfs-utils

# 挂载 nfs 服务器上的共享目录到本机路径 /root/nfsmount

mkdir /root/nfsmount

mount -t nfs 192.168.99.101:/nfs/data /root/nfsmount

#Understand #Kubernetes #storage #design #article #UPYUNunstructured #data #cloud #storage #cloud #processing #cloud #distribution #platform #News Fast Delivery