Google Research announced MusicLM, a model for generating high-fidelity music from textual descriptions. MusicLM treats the generative process of music as a hierarchical sequence-to-sequence modeling task and generates music at a frequency of 24 kHz.

No matter the text description is a paragraph, a story, or just a word, MusicLM can generate the corresponding music, and can also adjust the style of the music according to the age, time, place and other elements in the text.

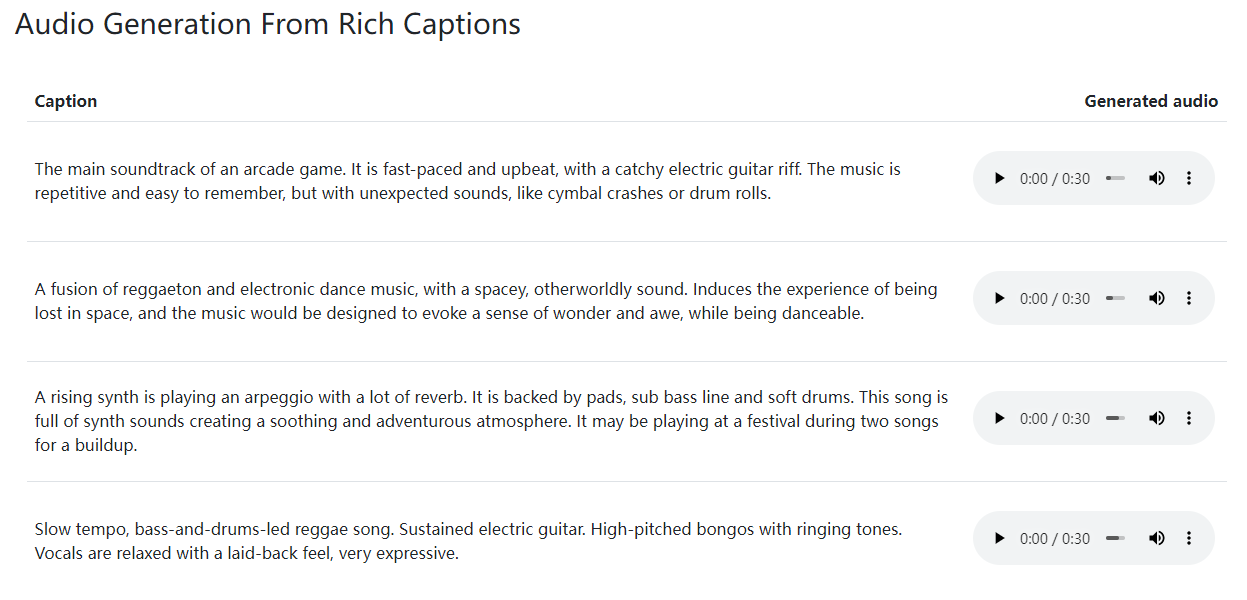

There are many samples on the MusicLM homepage, and it can be seen that the text description has many atmosphere description sentences, such as “the experience of being lost in space”, “creates an atmosphere of soothing and adventure”, “evokes a sense of wonder and awe Feeling”, there are also some specific application scenarios, such as “soundtrack for arcade games” and “suitable for dancing”. It can be seen that MusicLM can easily control these combinations of vague descriptions and specific scenes.

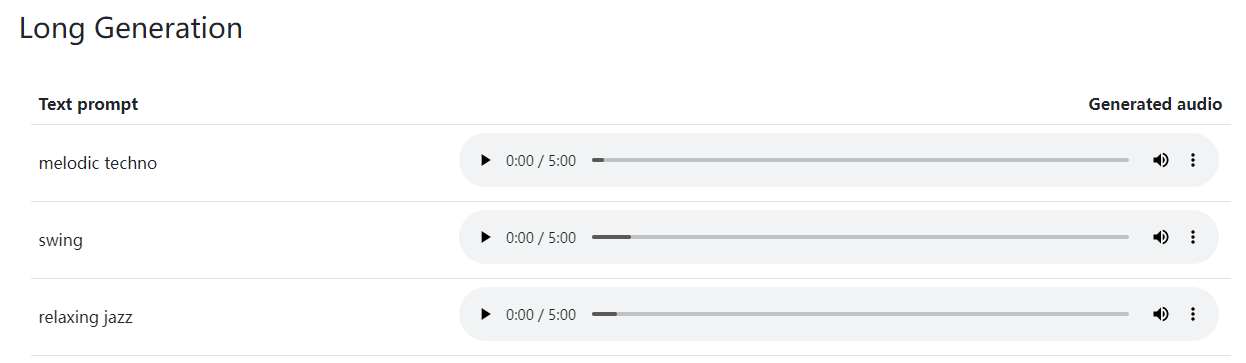

In addition to long texts, MusicLM can also create corresponding melodies from a word or phrase, such as “swing”, “relaxed jazz”, “melodic technique”, etc.:

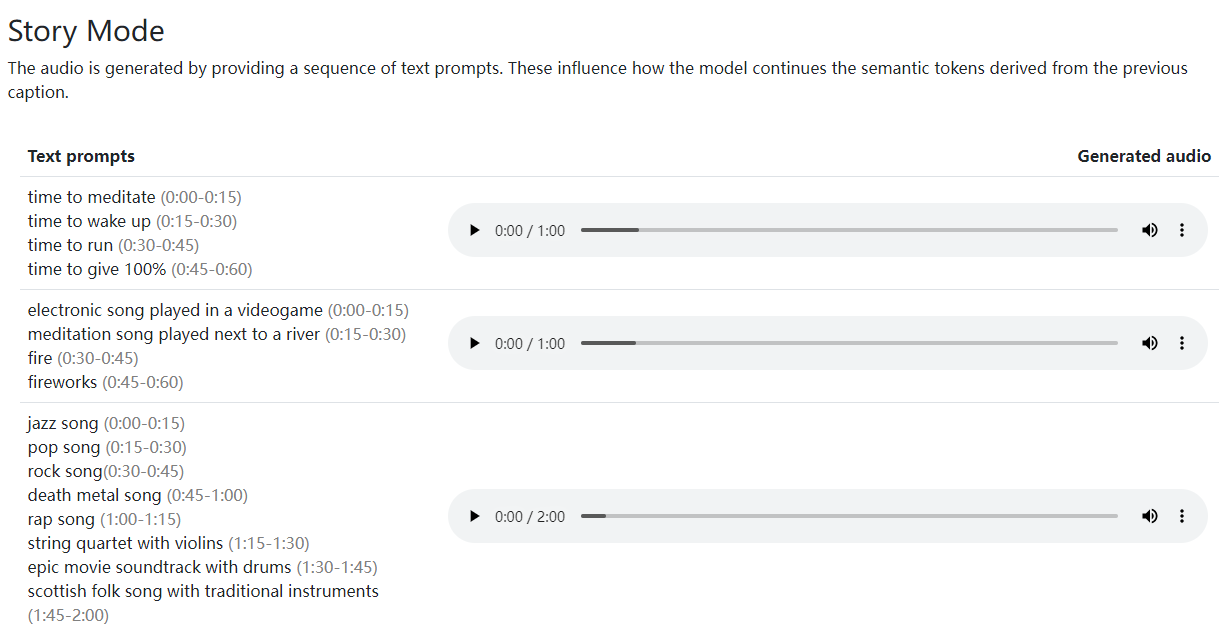

In addition, you can also generate music according to the “story mode”, just set the corresponding time stamp, and MusicLM will generate different styles of music according to the time stamp (but the conversion is very blunt, there is a feeling of “mutant painting style” ).

In addition to the above generation methods, MusicLM can also refine the music by inserting some keywords into the description text, such as “hum”, “acoustic guitar”, “fingerstyle guitar”, etc. You can also adjust the style of the music by using words such as “a house in Berlin in the 90s”, “a beach in the Caribbean”, and “19th century” to describe elements such as scenes and times.

According to Google Research, MusicLM outperforms previous audio-generating AI systems, including AudioLM, which Google launched a few months ago, in both audio quality and understanding of text descriptions.In order to support the MusicLM’s quality assessmentGoogle also released the MusicCaps Music Dataset, a dataset consisting of 5.5k music-text pairscontaining 5,521 musical examples, each labeled with descriptive text written by the musician.

However, MusicLM currently has no plans to make it public. According to Google’s explanation, first of all, although most of the music generated by MusicLM is still natural, it will often generate some weird works that “seem too early for human civilization”; secondly, about 1% of MusicLM’s melodies will be directly Plagiarizing the music data for training, based on this, I dare not disclose it. After all, the copyright issue of AI art works is raging. I believe that GitHub Copilot was sued and Stable Diffusion was sued due to copyright issues, which also brought a lot of shock to Google.

#Google #launches #MusicLM #model #generating #music #text #News Fast Delivery #Chinese #Open #Source #Technology #Exchange #Community