The non-profit organization LAION-AI released OpenFlamingo, a framework for training and evaluating large multimodal models (LMMs), part of DeepMind’s Flamingo model (a multimodal model capable of processing and reasoning about images, videos, and text) framework for dynamic content).

The Demo page of its dataset OpenFlamingo-9B shows the training results, and users can upload pictures for the model to recognize.

The editor tried it a little bit, and it took 11 seconds for a simple picture, which can describe the main body of the picture quite accurately:

For another picture with a lot of content, the recognition time is up to about 16 seconds, but the recognized content is still the main body in the center of the picture, and there are no other details.

It can be seen that the accuracy is not very ideal, and it needs to continue to iterate.

LAION-AI stated that the goal of OpenFlamingo is to develop a multimodal system that can handle various visual language tasks, with the ultimate goal of processing both visual and textual inputFunction with GPT-4match.

The first version of OpenFlamingo mainly includes the following contents:

- A Python framework for training Flamingo-style LMMs (based on Lucidrains’ flamingo implementation and David Hansmair’s flamingo-mini repository).

- with crossimages and textLarge-Scale Multimodal Datasets of Sequences.

- A contextual learning evaluation benchmark for visual-language tasks.

- OpenFlamingo-9B model(LLaMA based) first version

The OpenFlamingo-9B model is self-developed by LAION-AITrained on the multimodal C4 dataset, LAION-AI said it will soon release details of the dataset.

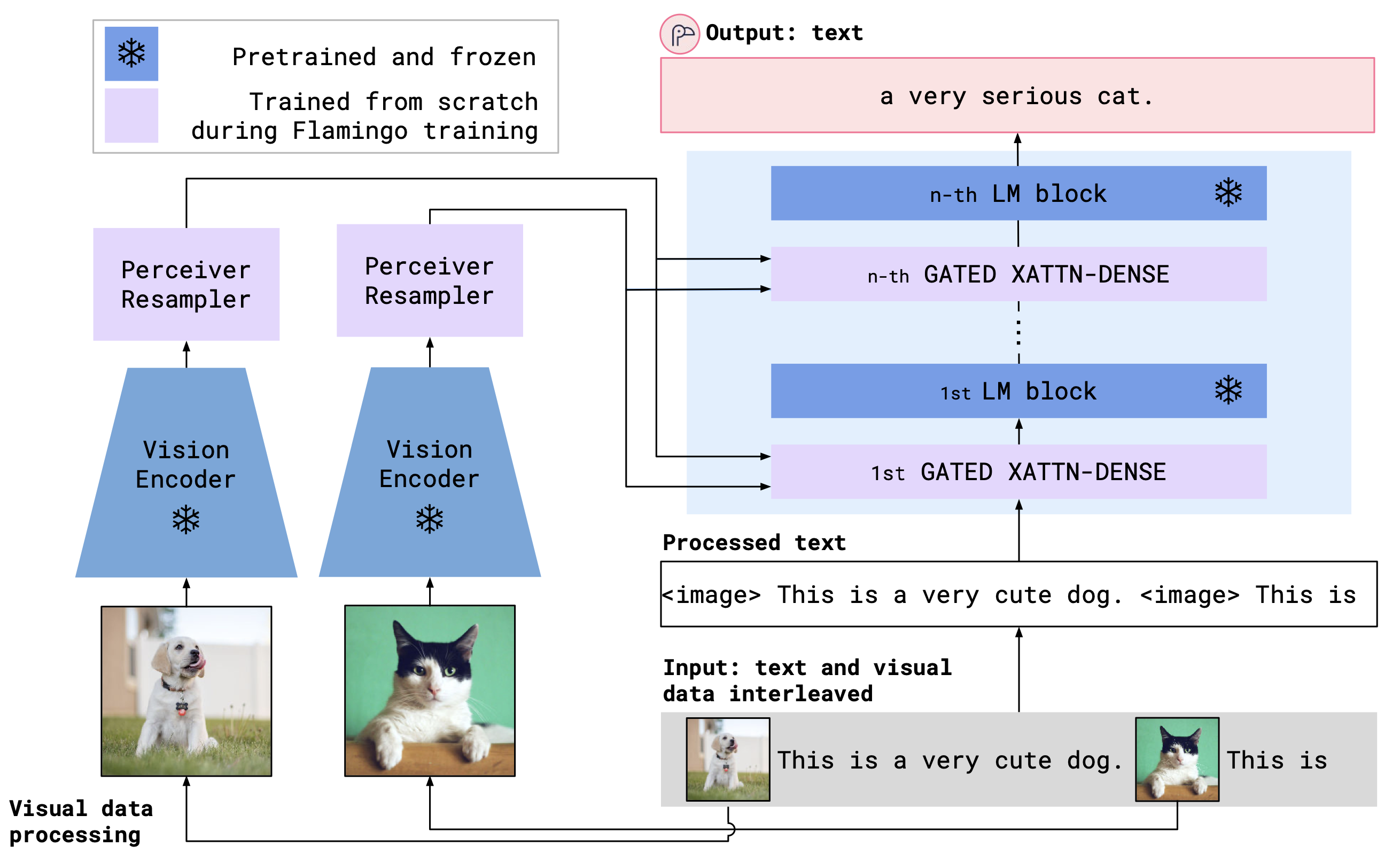

The overall architecture diagram of OpenFlamingo is as follows, it can be seen that the technical detailsLargely following DeepMind’s Flamingo model, the Flamingo model is trained on a large-scale web corpus with intersecting text and images, and OpenFlamingo is alsoUse cross-attention layers to fuse pre-trained vision encoders and language models.

#LAIONAI #releases #OpenFlamingo #open #source #alternative #GPT4 #News Fast Delivery