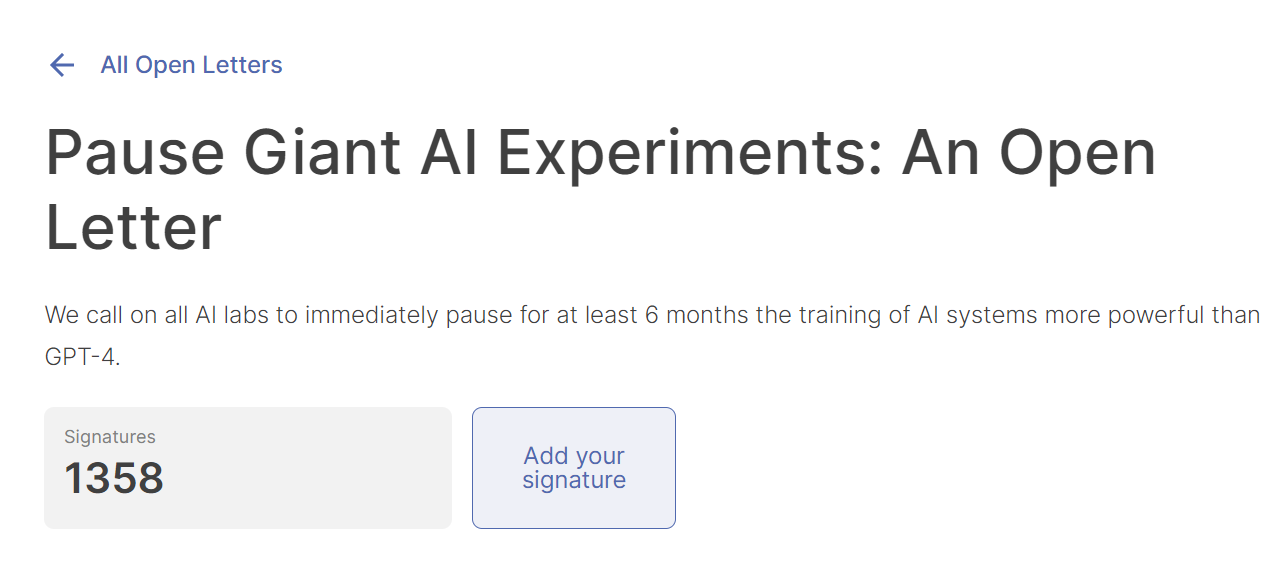

Musk (Elon Musk), co-founder of Apple Thousands of people including Steve Wozniak and Turing Award winner Yoshua BengioIndustry executives and experts have signed an open letter calling on all AI labs to immediately suspend the training of AI systems more powerful than GPT-4 for at least six months. and said,This suspension should be public and verifiable, and include all key players. If such a moratorium cannot be implemented quickly, the government should step in and suspend it.

This letter was sponsored by the nonprofit Future of Life Institute PublishedIn the letter, he elaborated on the risks that AI systems with strong competitive capabilities may bring to human society and civilization.

Numerous studies, and results from some of the top AI labs, suggest that AI systems with human-rival intelligence could pose profound risks to society and humanity.as widely recognized Asilomar AI principlesAs stated in , advanced AI could represent profound changes in the history of life on Earth and should be planned and managed with commensurate care and resources. Unfortunately, this level of planning and management is not happening; while AI labs have been locked in an out-of-control race in recent months to develop and deploy ever more powerful digital minds, no one—not even their creation neither can understand,predict, or reliably control.

Contemporary AI systems are now capable of competing with humans in general tasks. We must ask ourselves: should we allow machines to flood our information channels with propaganda and lies? Should we automate all jobs, including fulfilling ones? Should we develop non-human minds that may eventually outnumber us, outsmart us, obsolete and replace us? Should we risk losing control of our civilization? Such decisions must never be delegated to unelected technical leaders. Robust AI systems should only be developed when we are confident that their effects are positive and their risks manageable.This confidence must be well-founded and increases with the size of the potential impact on the system. OpenAI Recent Statements on General Artificial Intelligencestates, “At some point, it may be important to conduct an independent review before commencing training of future systems and, for state-of-the-art efforts, agree to limit the growth rate of computation used to create new models.” We agree. That point is now.

The open letter suggests that AI labs and independent experts should use this moratorium to jointly develop and implement a set of shared safety protocols for advanced AI design and development, subject to rigorous review and oversight by independent external experts. “These protocols should ensure that systems that comply with them are secure beyond doubt”.

At the same time, AI developers must collaborate with policymakers to significantly accelerate the development of robust AI governance systems. It should at least include:

- A new competent regulatory body dedicated to AI;

- Supervise and track high-capacity AI systems and large computing power pools;

- Attribution and watermarking system to help distinguish real from synthetic and track model leaks;

- Robust audit and certification ecosystem;

- Liability determination for harm caused by artificial intelligence;

- Provide strong public funding for AI security technology research;

- And well-resourced institutions to deal with the enormous economic and political damage (especially to democracy) that AI will cause.

“let usEnjoy a long AI summer instead of falling into fall unprepared.”

The letter did not detail the dangers revealed by GPT-4,But including the NYU that signed the letter Researchers including Gary Marcus have long argued that chatbots are great liars and have the potential to be superspreaders of disinformation. But there are also dissidents. The writer Cory Doctorow compares the artificial intelligence industry to a “pump and dump” program, and believes that the potential and threats of artificial intelligence systems have been greatly exaggerated.

Some foreign media also believe that this open letterUnlikely to have any impact on the current climate of AI research. Because technology companies such as Google and Microsoft rush to deploy new products, they often ignore some concerns about safety and ethics. But it also shows that there is growing opposition to this “ship it now and fix it later” approach; this opposition has the potential to find its way into the political sphere for consideration by actual lawmakers.

It is worth mentioning that Bill Gates, the co-founder of Microsoft, also talked about the impact of ChatGPT and generative artificial intelligence on education, medical care, productivity improvement, fairness, etc. in his personal blog recently, and claimed that GPT It was one of the two most revolutionary technologies he had seen in his lifetime.

“I am equally excited about this moment. This new technology can help improve the lives of people everywhere. At the same time, the world needs to set the rules of the road so that any disadvantages of artificial intelligence far outweigh its benefits, so that everyone can Enjoy these benefits no matter where they live or how much money they have. The age of AI is full of opportunities and responsibilities.”

#Musk #Apple #cofounder #jointly #called #Suspend #training #advanced #systems #yqqlm