Bark is a transformer-based text-to-audio model created by Suno. Bark can generate highly realistic multilingual speech as well as other audio – including music, background noise and simple sound effects. The model can also produce non-verbal communication such as laughing, sighing and crying. To support the research community, we provide access to checkpoints of pretrained models ready for inference.

Usage

from bark import SAMPLE_RATE, generate_audio from IPython.display import Audio text_prompt = """ Hello, my name is Suno. And, uh — and I like pizza. [laughs] But I also have other interests such as playing tic tac toe. """ audio_array = generate_audio(text_prompt) Audio(audio_array, rate=SAMPLE_RATE)

Bark supports various languages out of the box and automatically determines the language from the input text. When prompted to convert text with codes, Bark will even attempt to use local accents in various languages within the same voice.

text_prompt = """

Buenos días Miguel. Tu colega piensa que tu alemán es extremadamente malo.

But I suppose your english isn't terrible.

"""

audio_array = generate_audio(text_prompt)Bark can generate all types of audio and in principle sees no difference between speech and music. Sometimes Bark chooses to generate text as music, but you can help it by adding musical notes around the lyrics.

text_prompt = """

♪ In the jungle, the mighty jungle, the lion barks tonight ♪

"""

audio_array = generate_audio(text_prompt)Bark has the ability to completely clone sounds – including tone, pitch, emotion and rhythm. The model also tries to preserve music, ambient noise, etc. from the input audio. However, to reduce misuse of the technology, the development team limited the audio history prompts to a limited set of Suno-provided, fully synthesized options for each language to choose from. Specify the following patterns:{lang_code}_speaker_{number}

text_prompt = """

I have a silky smooth voice, and today I will tell you about

the exercise regimen of the common sloth.

"""

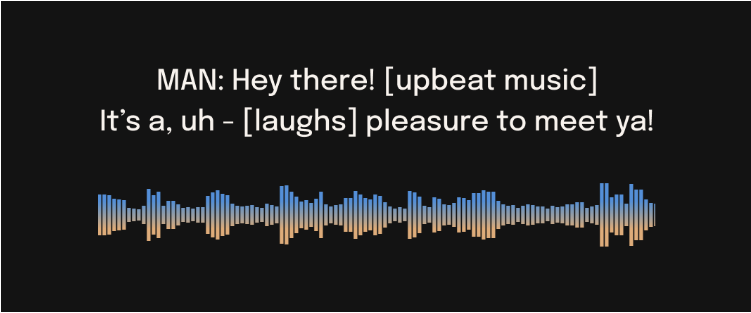

audio_array = generate_audio(text_prompt, history_prompt="en_speaker_1")You can provide specific speaker prompts such as narrator, man, woman, etc. But these cues are not always honored, especially when given conflicting audio history cues.

text_prompt = """

WOMAN: I would like an oatmilk latte please.

MAN: Wow, that's expensive!

"""

audio_array = generate_audio(text_prompt)Bark has been tested and works on both CPU and GPU (pytorch 2.0+, CUDA 11.7 and CUDA 12.0). Running Bark requires running >100M parameter transformer models. On modern GPUs and PyTorch nightly, Bark can generate audio in roughly real-time. Inference time can be 10-100 times slower on older GPUs, default colab or CPU.

If you don’t have new hardware available, or if you want to play with a larger version of the model, you can alsohereSign up for early access model playground.

#Bark #Homepage #Documentation #Downloads #Text #Prompt #Generate #Audio #Model #News Fast Delivery