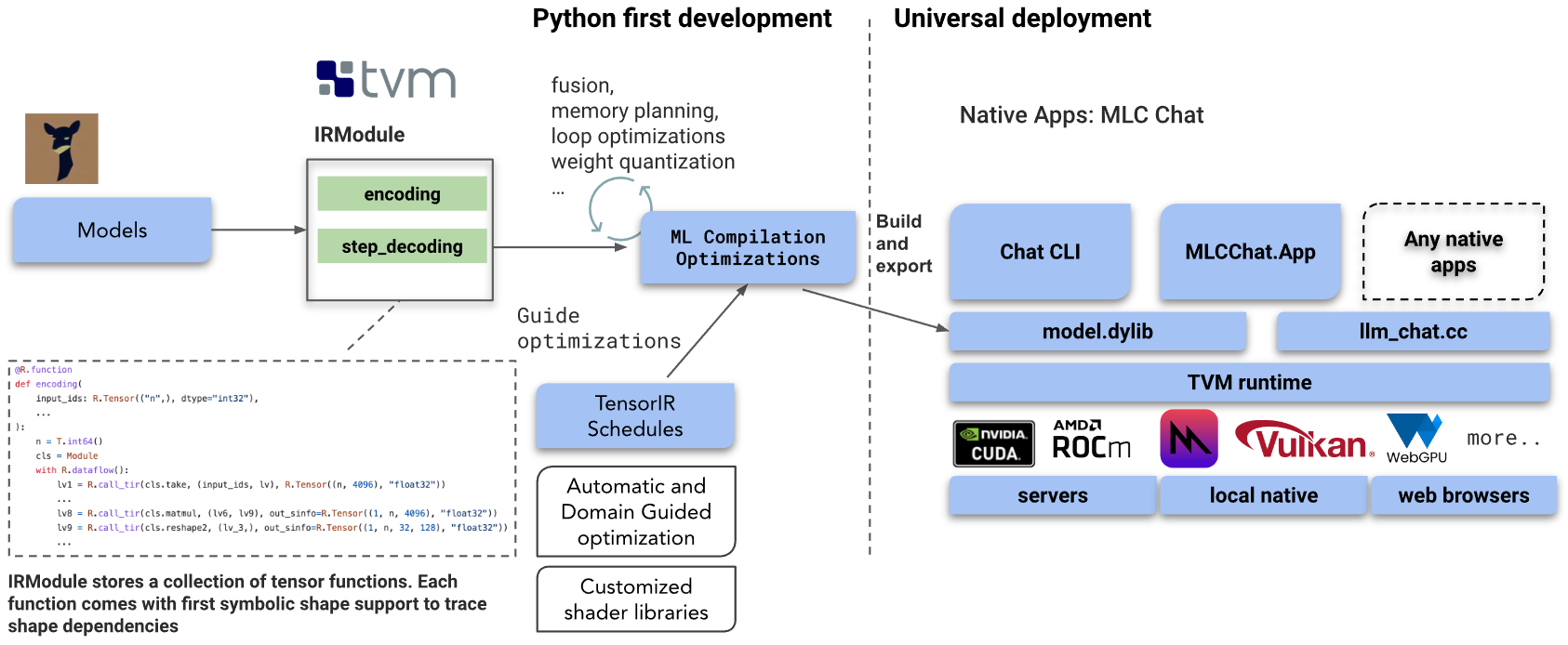

MLC LLM is a general solution that allows any language model to be deployed locally on various hardware backends and native applications.

In addition, MLC LLM also provides an efficient framework for users to further optimize model performance according to their needs. MLC LLM is designed to enable everyone to develop, optimize and deploy AI models locally on their personal devices without server support, accelerated by consumer-grade GPUs on phones and laptops.

Platforms supported by MLC LLM include:

-

iphone

-

Metal GPU and Intel/ARM MacBook;

-

Support for AMD and NVIDIA GPUs via Vulkan on Windows and Linux;

-

Use NVIDIA GPUs via CUDA on Windows and Linux;

-

WebGPU on the browser (with MLC LLM companion project Web LLM).

Overall WorkFlow of MLC LLM:

#MLC #LLM #Homepage #Documentation #Downloads #Local #Large #Language #Model #News Fast Delivery