PaLM 2 It is the next generation of large-scale language models launched by Google.Expertise in advanced reasoning tasks, including code and mathematics, classification and question answering, translation and multilingualism, and natural language generation.

Google claims that PaLM 2 is a state-of-the-art language model, outperforming all previous LLMs, including PaLM. Currently, PaLM 2 is used in Med-PaLM 2 and Sec-PaLM, and powers Google’s generative AI functions and tools such as Bard and the PaLM API.

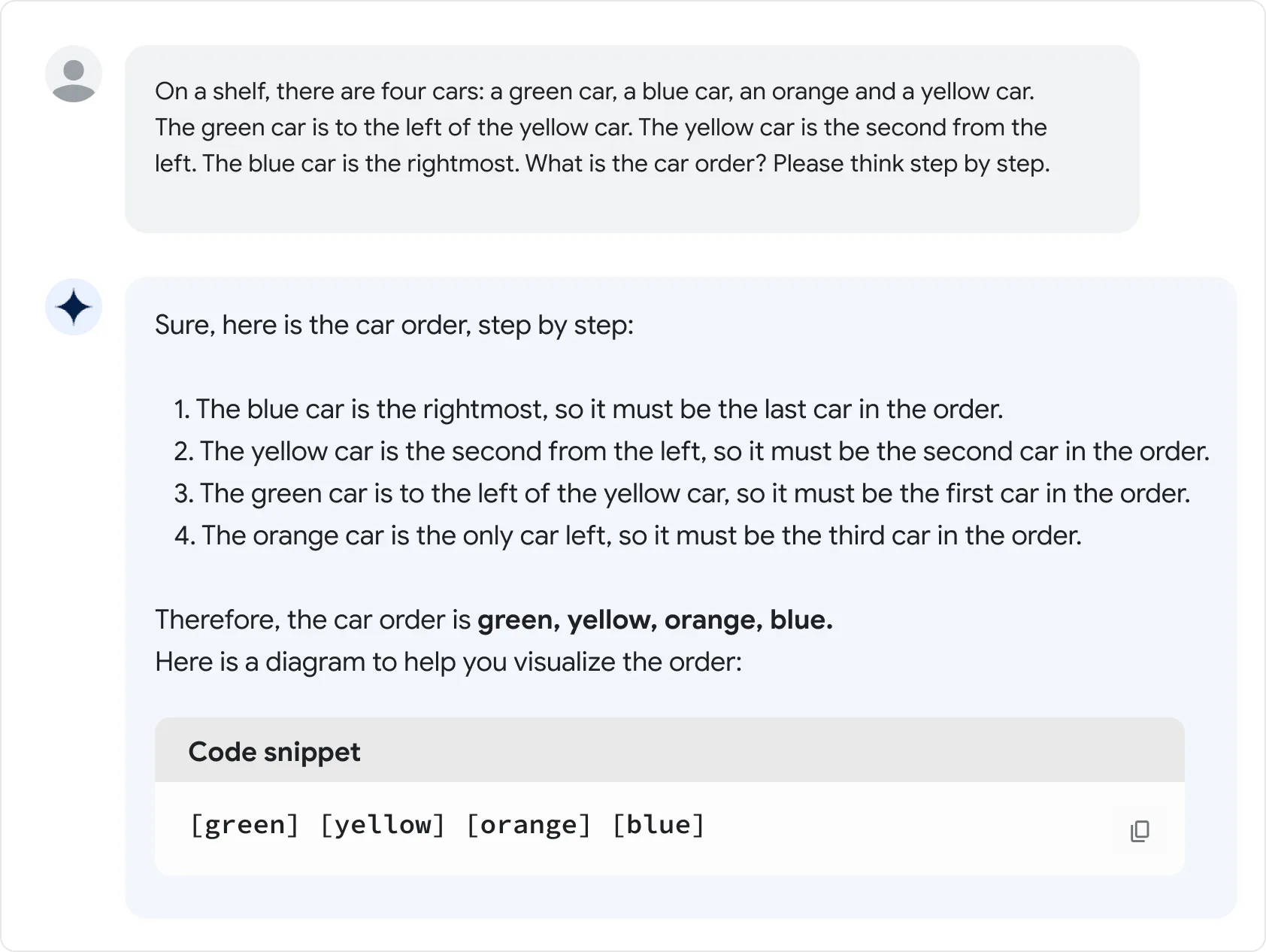

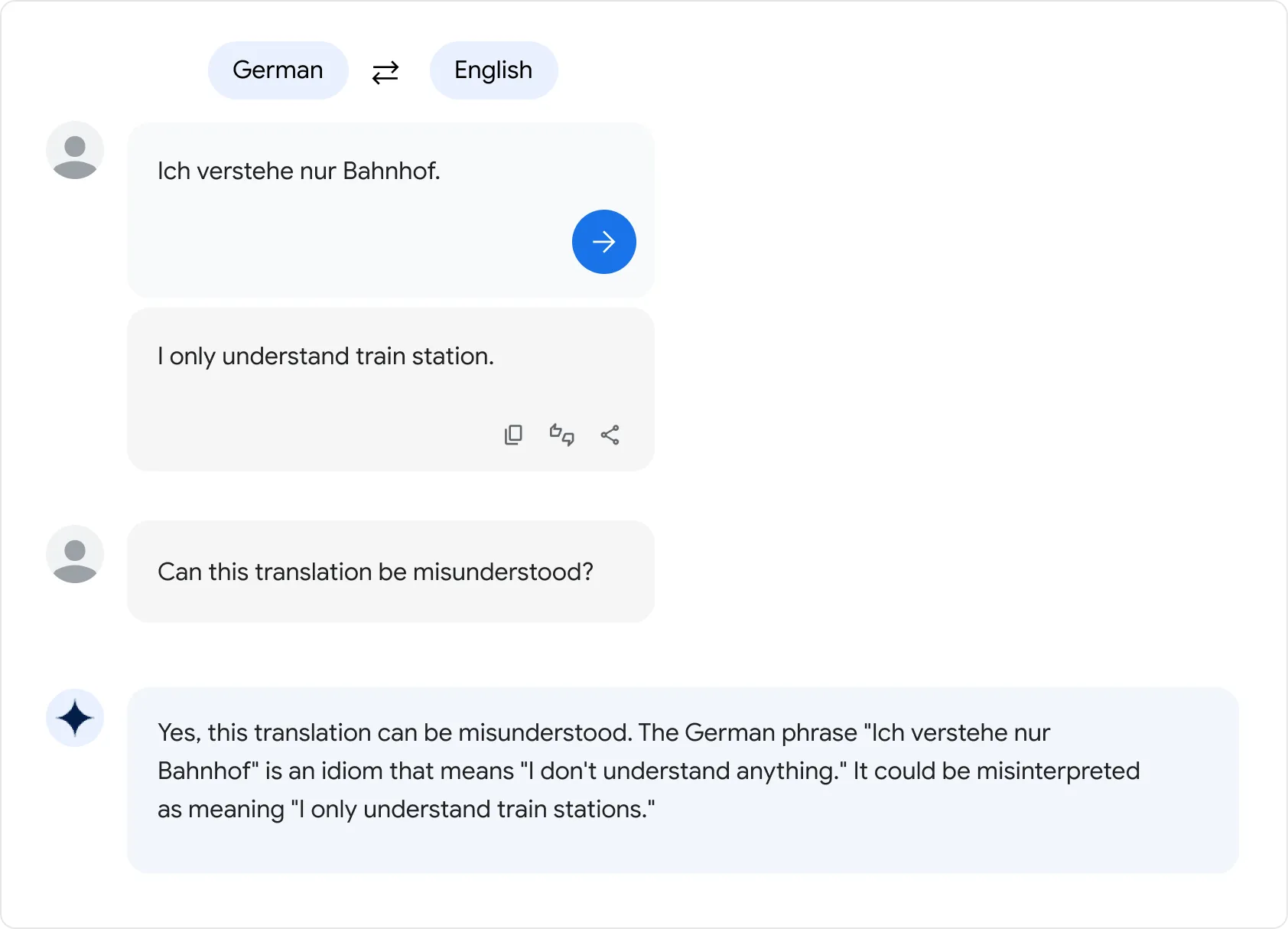

reasoning:PaLM 2 can decompose complex tasks into simpler subtasks and is better at understanding the nuances of human language than previous LLMs such as PaLM. For example, PaLM 2 is good at understanding riddles and idioms, which requires understanding the ambiguity and figurative meaning of words rather than their literal meaning.

Multilingual translation:PaLM 2 was trained on a corpus of more than 100 languages, making PaLM 2 good at multilingual tasks, including finer phrasing than previous models.

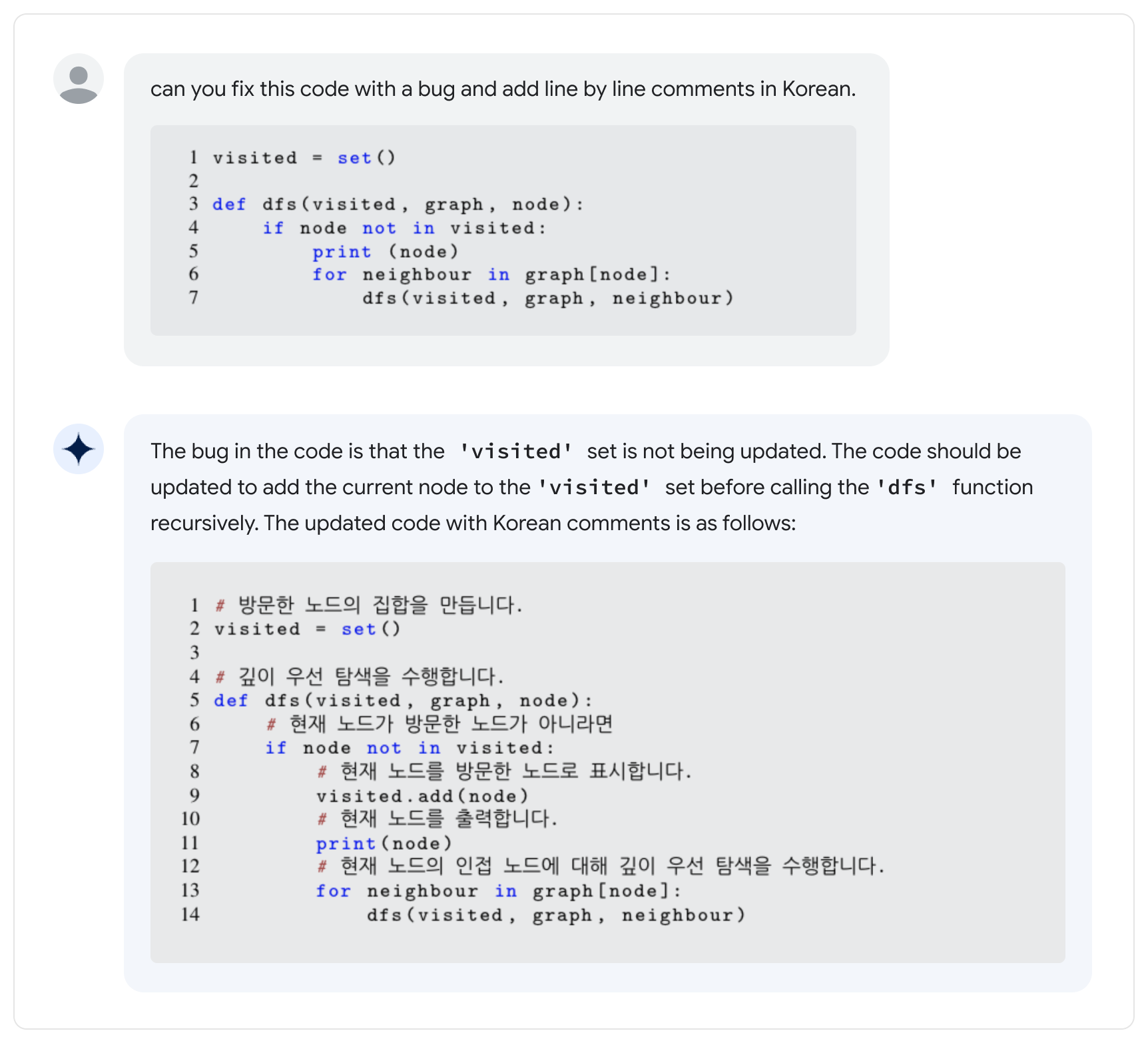

coding:PaLM 2 can also understand, generate and debug code and has been pre-trained in more than 20 programming languages. This means it excels at popular programming languages like Python and JavaScript, but is also capable of generating specialized code in languages like Prolog, Fortran, and Verilog. Combining this with its language capabilities can help teams collaborate across languages.

PaLM 2 is good at tasks like high-level reasoning, translation, and code generation because of the way it is built. It improves upon its predecessor, PaLM, by unifying three distinct research advances in large language models:

- Using Computational Optimal Scaling: The basic idea of Computational Optimal Scaling is to scale model size and training dataset size proportionally. This new technology makes PaLM 2 smaller than PaLM, but more efficient, with better overall performance, including faster inference, fewer parameters to serve, and lower cost to serve.

- Improved dataset mixing: Previous LLMs, such as PaLM, used pre-training datasets that were mainly English text. PaLM 2 improves its corpus with more languages and a diverse pre-training mix that includes hundreds of human and programming languages, mathematical equations, scientific papers, and web pages.

- Updated model architecture and objectives: PaLM 2 has an improved architecture and is trained on a variety of different tasks, all of which help PaLM 2 learn different aspects of language.

#PaLM #Homepage #Documentation #Downloads #Googles #Generation #Large #Language #Model #News Fast Delivery