Guided reading

Deep learning has been widely implemented in practical business scenarios for natural language processing and other fields, and optimization of its inference performance has become an important part of the deployment process. Improvement of inference performance: On the one hand, the ability to deploy hardware can be fully utilized to reduce user response time while saving costs; In order to improve business accuracy indicators.

In this paper, the inference performance optimization work is carried out for the deep learning model in the address standardization service.passhigh performance operatorquantization, compilation optimization and other optimization methods, inAccuracy index is not reducedOn the premise of the AI model, theModelend-to-endInference speedHighestObtained 4.11 timesimprovement.

1. Model inference performance optimization methodology

Model inference performance optimization is one of the important aspects of AI service deployment. On the one hand, it can improve the efficiency of model inference and fully release the performance of hardware. On the other hand, under the premise of keeping the inference delay unchanged, it can make the business use a more complex model, thereby improving the accuracy index. However, inference performance optimization will encounter some difficulties in practical scenarios.

1.1 Difficulties in Natural Language Processing Scenario Optimization

Typical natural language processing (Natural Language Processing, NLP)task,Recurrent Neural Network (RNN) and BERT[7](Bidirectional Encoder Representations from Transformers.) are two types of model structures with higher usage rates. In order to facilitate the realization of the elastic expansion and contraction mechanism and the high cost performance of online service deployment,Natural language processing tasks are often deployed in e.g.x86 CPU platforms such as Intel® Xeon® processors. However, with the complexity of business scenarios, the inference computing performance requirements of services are getting higher and higher.Taking the above RNN and BERT models as examples, the performance challenges of deploying them on the CPU platform are as follows:

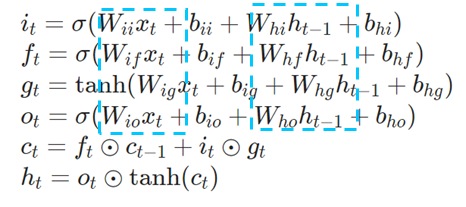

Recurrent Neural Networks are a class of sequential (sequence) data is input, recursion is performed in the evolution direction of the sequence and all nodes (recurrent units) are connected in a chain. Common RNNs in practical use include LSTM, GRU, and some derived variants. In the calculation process, as shown in the figure below, each output of the subsequent stage in the RNN structure depends on the corresponding input and output of the previous stage. Therefore, RNN can complete sequence-type tasks and has been widely used in NLP and even computer vision in recent years. Compared with BERT, RNN requires less computation and the model parameters are shared, but its computation timing dependency makes parallel computation of sequences impossible.

Schematic diagram of RNN structure

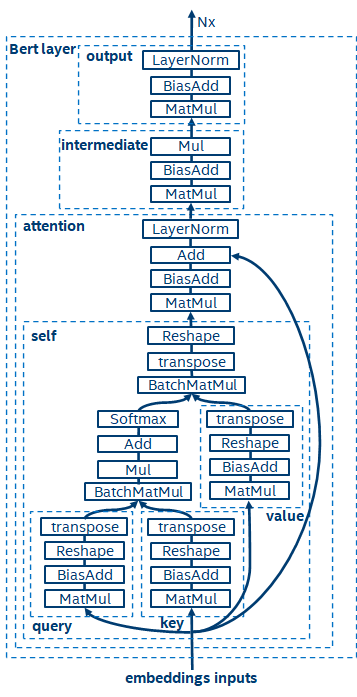

BERT[7]It is proved that unsupervised pre-training can be done on large datasets with a deep network structure, and then the model can be fine-tuned for specific tasks. It not only improves the accuracy performance for these specific tasks, but also simplifies the training process. The model structure of BERT is simple and easy to expand. By simply deepening and widening the network, better accuracy can be obtained than the RNN structure. On the other hand, the accuracy improvement comes at the cost of larger computational overhead, and there are a large number of matrix multiplication operations in the BERT model, which is a huge challenge for the CPU.

BERT model structure diagram

1.2 Model inference optimization strategy

Based on the analysis of the above inference performance challenges, we believe that the optimization of model inference from the software stack level mainly includes the following strategies:

- Model compression: including quantization, sparsity, pruning, etc.

- High-performance operators for specific scenarios

- AI compiler optimization

quantify

Model quantization refers to the process of approximating floating-point activation values or weights (usually represented by 32-bit floating-point numbers) as low-bit integers (16-bit or 8-bit), and then completing the calculation under the low-bit representation.In general, model quantization canCompressed model parametersthereby reducing model storage overhead; and by reducing memory access and effectively utilizing low-bit computing instructions (such as Intel® Deep Learning Boost Vector Neural Network Instructions, VNNI),getInference speedimprovement.

given floating point valuewe can map it to the low-bit value by the following formula:

inandis obtained through a quantization algorithm. Based on this, taking the Gemm operation as an example, it is assumed that there is a floating-point calculation process:

We can complete the corresponding calculation process in the low-bit domain:

high performance operator

In the deep learning framework, in order to maintain generality and take into account various processes (such as training), there is redundancy in the inference overhead of operators. When the model structure is determined, the inference process of the operator is only a subset of the original full process. Therefore, when the model structure is determined, we can implement high-performance inference operators and replace the general operators in the original model, thereby improving the inference speed.

The key to implementing high-performance operators on CPUs lies in reducing memory accesses and using more efficient instruction sets. In the calculation process of the original operator, on the one hand, there are a large number of intermediate variables, and these variables will perform a large number of read and write operations on the memory, thereby slowing down the speed of reasoning. In response to this situation, we can modify its calculation logic to reduce the overhead of intermediate variables; on the other hand, we can directly call the vectorized instruction set for some calculation steps inside the operator to accelerate it, such as Intel® Xeon® processing Efficient AVX512 instruction set on the device.

AI compiler optimization

With the development of the deep learning field, the structure of the model and the hardware deployed show a trend of diversified evolution. When deploying the model to each hardware platform, we usually call the runtime launched by each hardware manufacturer. In actual business scenarios, this may encounter some challenges, such as:

- The iteration speed of model structure and operator type is higher than that of the manufacturer’s runtime, so that some models cannot be quickly deployed based on the manufacturer’s runtime. At this time, you need to rely on the manufacturer to update, or use mechanisms such as plugins to implement missing operators.

- A business may contain multiple models, which may be trained by multiple deep learning frameworks, and the models may need to be deployed to multiple hardware platforms. At this time, it is necessary to convert these models with different formats to the format required by each hardware platform, and at the same time, problems such as changes in model accuracy and performance caused by different implementations of each inference framework must be considered, especially those such as quantization are highly sensitive to numerical differences. Methods.

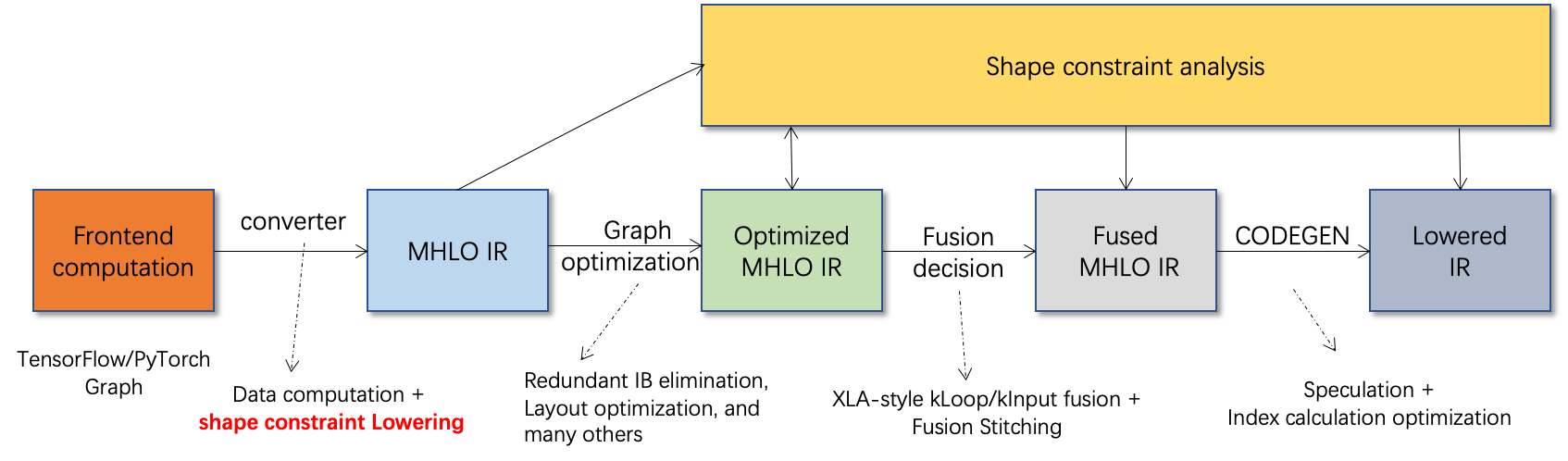

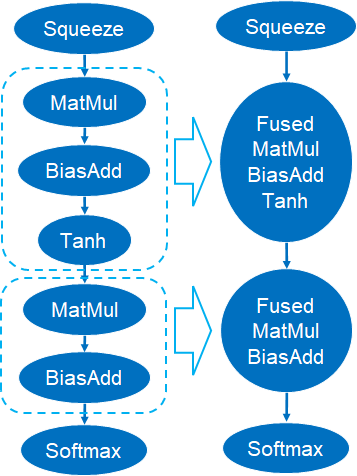

The AI compiler is proposed to solve the above problems, and it abstracts multiple levels to solve some of the above problems. First, it accepts the model calculation graph of each front-end framework as input, and generates a unified intermediate representation through various Converter transformations. Subsequently, graph optimization passes such as operator fusion and loop unrolling are applied to the intermediate representation to improve inference performance. Finally, the AI compiler will perform codegen for a specific hardware platform based on the optimized computational graph to generate executable code. In this process, optimization strategies such as stitch and shape constraint will be introduced.The AI Compiler has very goodRobustness, adaptability, ease of use, and the ability to reap significant optimization benefits.

In this article, the Alibaba Cloud machine learning platform PAI team jointlyIntel Data Center Software Team, Intel AI and Analytics Team,The NLP address standardization team of DAMO Academy has cooperated to implement a high-performance reasoning optimization solution to address the inference performance challenge of address standardization services.

2. Introduction to Address Standardization

Public security and government affairs, e-commerce logistics, energy (water, electricity and gas), operators, new retail, finance, medical and other industries often involve a large amount of address data in the process of business development, and these data often do not form a standard structure specification, there are missing addresses, Multiple issues in one place. With the upgrade of digitalization, the problem of non-standard urban addresses has become more prominent.

Address application existing problems

address normalization[2](Address Purification)It is a high-performance and high-accuracy standard address algorithm service precipitated by the NLP team of Alibaba Dharma Academy relying on Alibaba Cloud’s massive address corpus and its super-strong NLP algorithm strength. Address standardization products provide high-performance address algorithms from the perspective of standardizing address data and establishing a unified standard address library.

Advantages of address standardization

The address algorithm service can automatically standardize the processing of address data, and can effectively solve the problems of multiple addresses in one place, address identification, address authenticity identification, etc. Government agencies and developers provide address data cleaning and address standardization capabilities, so that address data can better support business. Address standardization products have the following characteristics:

- High accuracy rate: With a massive address corpus and super-strong NLP algorithm technology, and continuous optimization and iteration, the address algorithm has a high accuracy rate

- Super performance: accumulated rich experience in project construction, can stably carry massive data

- Comprehensive services: provide more than 20 kinds of address services to meet the needs of different business scenarios

- Flexible deployment: support public cloud, hybrid cloud, and privatization deployment.

The optimized module belongs to the address standardizationsearch module. Address search refers to the information related to the address text entered by the user, based on the address database and search engine, searches and associates the address text entered by the user, and returns relevant Point of Interest (POI) information. The address search function not only improves user data processing experience, but also serves as the basis for downstream services of multiple addresses, such as longitude and latitude query, door address standardization, and address normalization, etc., so it plays a key role in the entire address service system.

Schematic diagram of address service search system

Specifically, the optimized model is based onMulti-task geography pre-trained language model baseproducedMulti-task vector recall modelandRefinement model.

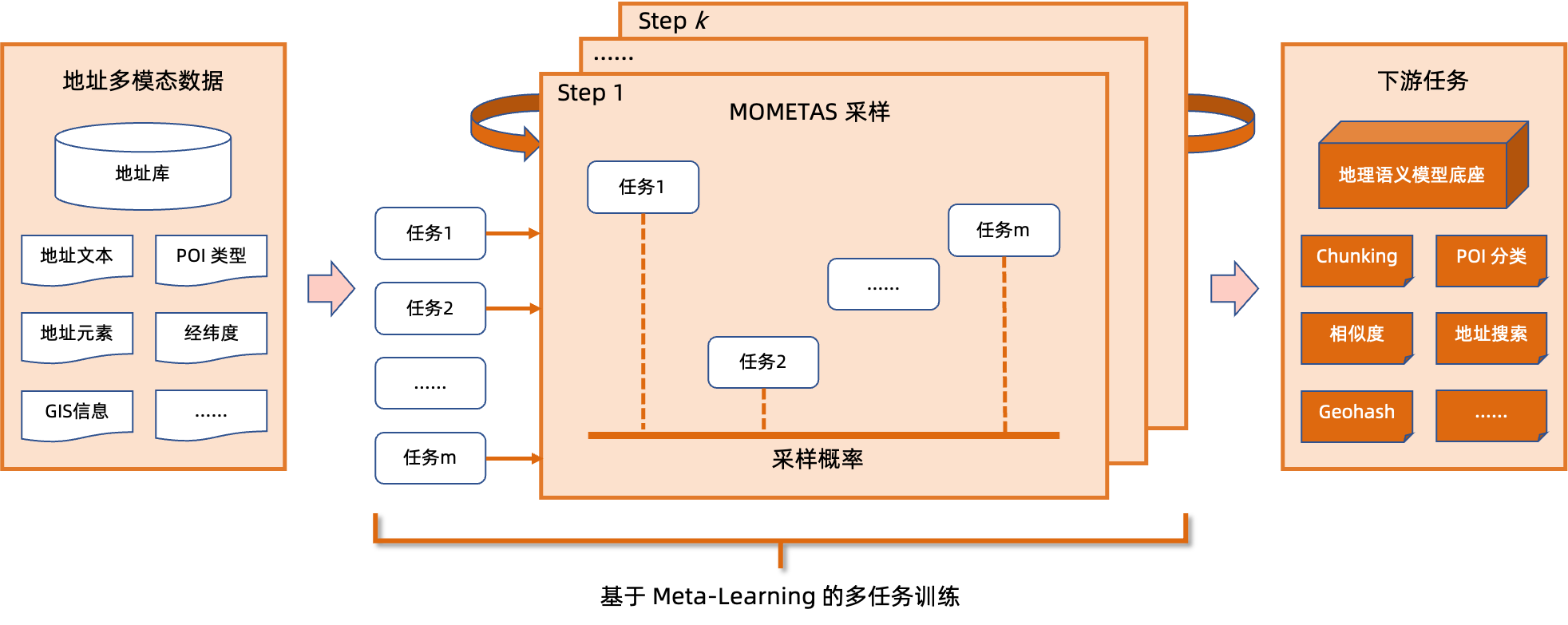

Multi-task geography pre-trained language model baseBased on the Masked Language Model (MLM) task, the classification of relevant points of interest and the identification of address elements (province, city, district, POI, etc.) are combined, and through Meta Learning, adaptive The sampling probability of multiple tasks is adjusted to incorporate general address knowledge into the language model.

Schematic diagram of the base of the multi-task address pre-training model

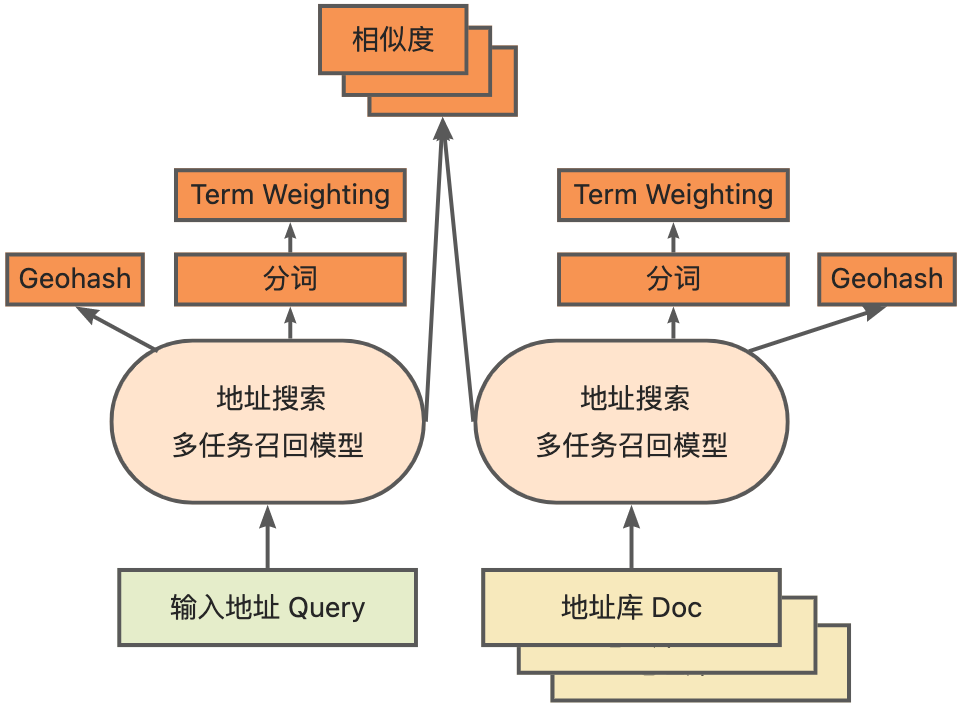

Multi-task vector recall modelBased on the above base training, it includes four tasks: twin tower similarity, Geohash (address encoding) prediction, word segmentation and Term Weighting (word weight).

Schematic diagram of multi-task vector recall model

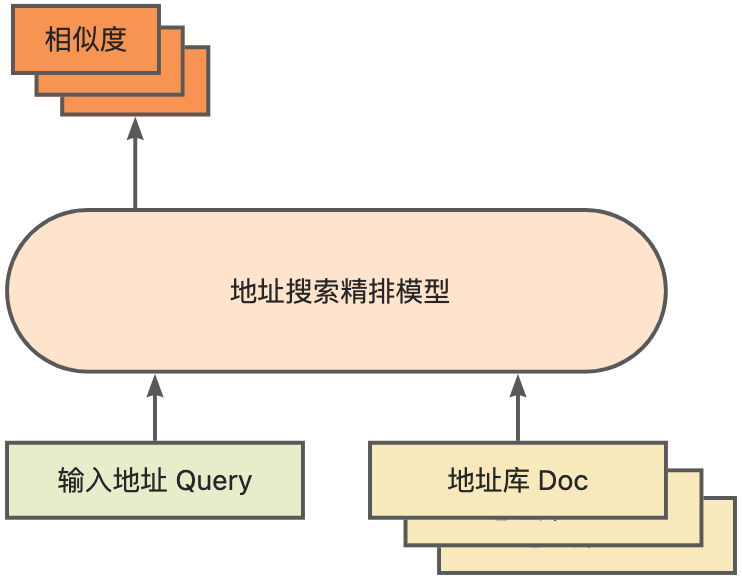

As the core module for calculating address similarity matching,Refinement modelOn the basis of the above base, massive click data and labeled data are introduced for training and training.[3]and through the model distillation technology, the efficiency of the model is improved[4]. The final reordering is done with the address library documents applied to the recall of the recall model.The 4-layer single model trained based on the above process can be used in the CCKS2021 Chinese NLP address correlation task[5]obtained better results than the 12-layer baseline model (seePerformance showpart).

Schematic diagram of the fine-arrangement model

3. Model inference optimization solution

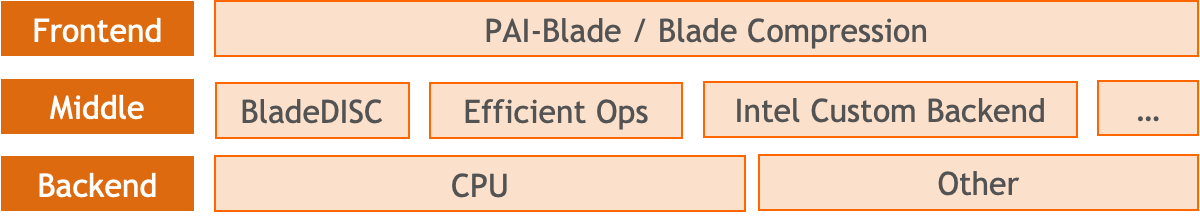

The Blade product launched by the PAI team of Alibaba Cloud’s machine learning platform supports all the optimization solutions mentioned above, provides a unified user interface, and has multiple software backends, such as high-performance operators, Intel Custom Backend, BladeDISC, and more.

Blade model inference optimization architecture diagram

3.1 Blade

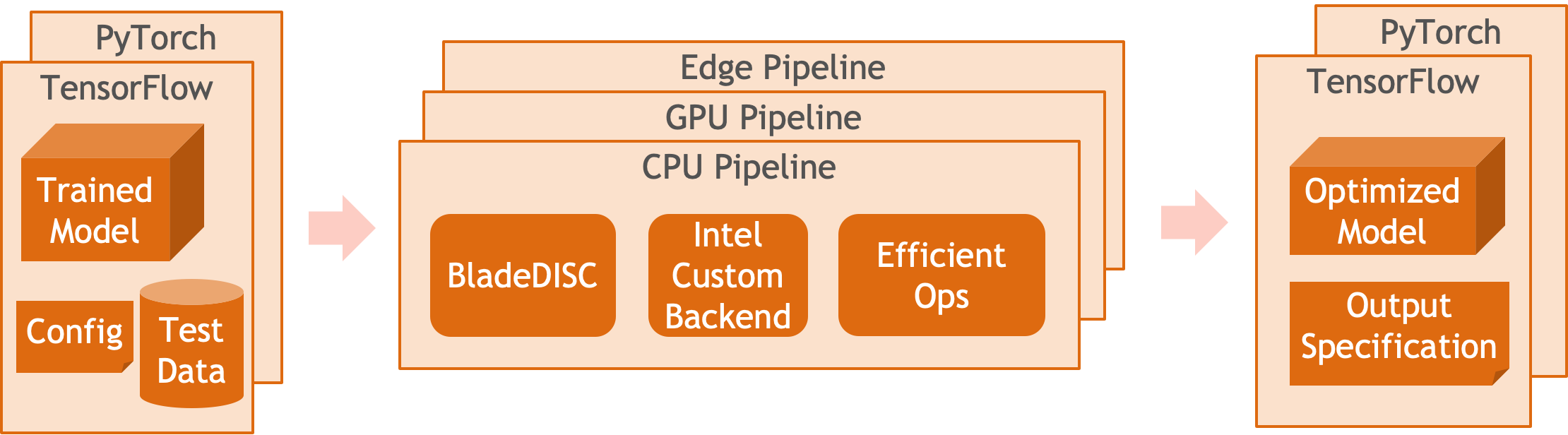

BladeIs the Alibaba Cloud machine learning PAI team (Platform of Artificial Intelligence) introduced a general inference optimization tool, which can be jointly optimized by the model system to make the model achieve the optimal inference performance. It organically integrates computational graph optimization, vendor optimization libraries such as Intel® oneDNN, BladeDISC compilation optimization, Blade high-performance operator library, Costom Backend, Blade mixed precision and other optimization methods. At the same time, the concise usage method lowers the threshold for model optimization and improves user experience and production efficiency.

PAI-Blade supports multiple input formats, including Tensorflow pb, PyTorch torchscript, etc. For the model to be optimized, PAI-BladeIt will be analyzed, and then a variety of possible optimization methods will be applied, and the most obvious acceleration effect will be selected from the various optimization results as the final optimization result.

Blade optimization diagram

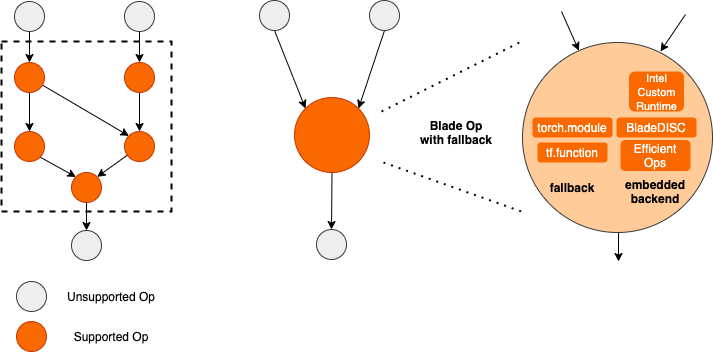

In order to obtain the maximum optimization effect on the premise of ensuring the success rate of deployment, PAI-Blade adopts the “circle graph” method for optimization, namely:

- Convert the part of the sub-computation graph to be optimized that can be supported by the inference backend/high-performance operator to the corresponding optimized sub-graph;

- Subgraphs that cannot be optimized fallback to the corresponding native framework (TF/Torch) for execution.

Blade circle diagram

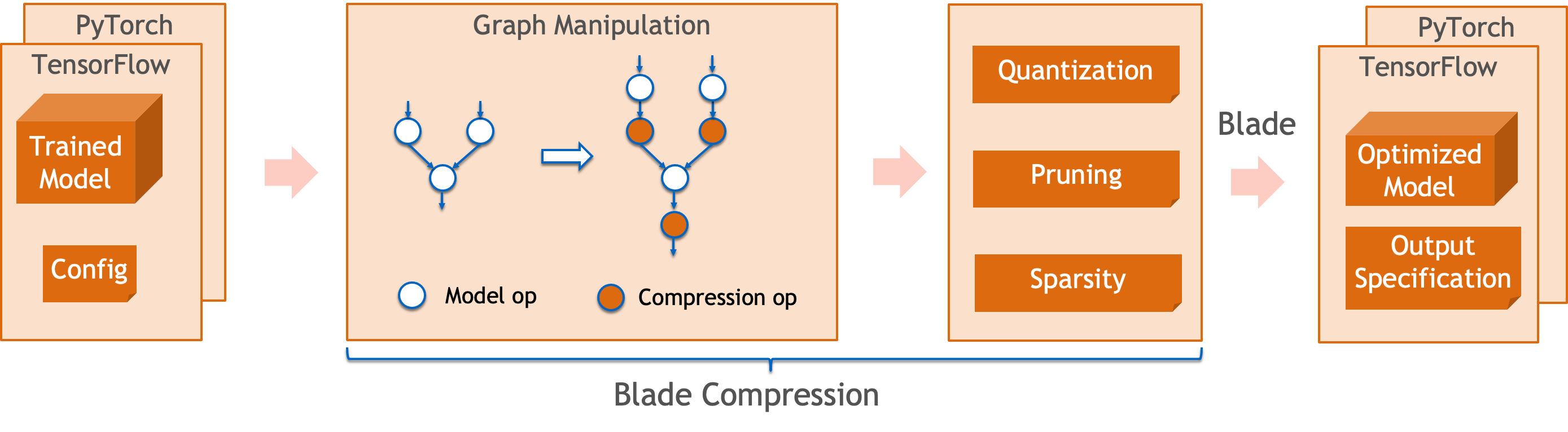

Blade CompressionIt is a model compression-oriented toolkit launched by Blade, which aims to assist developers in efficient model compression and optimization. It includes a variety of model compression functions, including quantization, pruning, sparsification, and more. The compressed model can be easily further optimized by Blade to obtain the ultimate optimization of model system federation.

In terms of quantization, Blade Compression:

- providedConcise use interfaceby calling a few simple APIs, you can complete the steps of quantization map change, calibration (calibration), quantization training (Quantization-aware Training, QAT), exporting quantization model and other steps.

- providedMultiple backend supportthrough the configuration of the config file, the quantification process for different devices and different backends can be completed.

- Integrate various algorithms developed by the PAI-Blade team in the actual production business to obtainHigher quantization accuracy.

At the same time, we provide a wealth of atomic capability APIs to facilitate customized development for specific situations.

Blade Compression schematic

BladeDISCIt is a machine learning scenario launched by the PAI team of Alibaba Cloud’s machine learning platform.Dynamic Shape Deep Learning Compiler, is one of the backends of the Blade. It supports mainstream front-end frameworks (TensorFlow, PyTorch) and back-end hardware (CPU, GPU), as well as inference and training optimization.

BladeDISC Architecture Diagram

3.2 Intel® Xeon®-basedhigh performance operator

The sub-networks in the neural network model usually have long-term versatility and universality, such as Linear Layer and Recurrent Layers in PyTorch, which are the basic modules of model construction and are responsible for specific functions. model, and these modules are also the target of the AI compiler’s key optimization. Accordingly, in order to obtain the basic modules with the best performance, so as to achieve the best performance model, Intel has carried out multi-level optimization on these basic modules for the X86 architecture, including enabling efficient AVX512 instructions, operator internal calculation scheduling, operator Fusion, cache optimization, parallel optimization, and more.

In the address standardization service, the Recurrent Neural Network (RNN) model often appears, and the module that most affects the performance of the RNN model is the module such as LSTM or GRU. This chapter takes LSTM as an example, which is presented when the input of variable length and multiple batches is used. , how to achieve the ultimate performance optimization of LSTM.

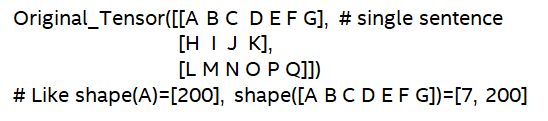

Usually, in order to meet the needs and requests of different users, cloud services that pursue high performance and low cost will batch different user requests to maximize the utilization of computing resources.As shown in the figure below, there are a total of 3 sentences that have been embedded, and the content and the length of the input are not the same.

raw input data

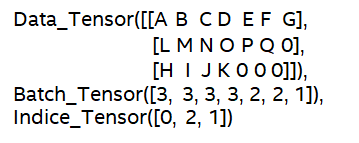

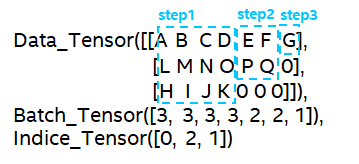

In order to make the LSTM calculation more efficient, it is necessary to use PyTorch’s pack_padded_sequence() function to padding and sort the Batched input. As shown in the figure below, a padded data tensor, a tensor describing the batch size of the data tensor, and a description data tensor The original ordinal tensor.

LSTM input data

So far, the input of LSTM has been prepared. The calculation process of LSTM is shown in the following figure. The input tensor is subjected to segmented batch calculation, and the zero-value calculation is skipped.

Computational steps of the LSTM for the input

The more in-depth calculation optimization of LSTM is shown in Figure 17 below. The matrix multiplication part in the formula is calculated and fused between the formulas. As shown in the figure below, the original 4 matrix multiplications are converted into 1 matrix multiplication, and the AVX512 instruction is used for numerical calculation. , and multi-threaded parallel optimization to achieve efficient LSTM operators. Among them, the numerical calculation refers to the elementwise element operation of matrix multiplication and post-order. For the matrix multiplication part, this scheme uses the oneDNN library for calculation, and the library has an efficient AVX512 GEMM implementation. For elementwise elementwise operation, this scheme The AVX512 instruction set is used for operator fusion, which improves the hit rate of data in the cache.

LSTM Computational Fusion[8]

3.3 Inference backend Custom Backend

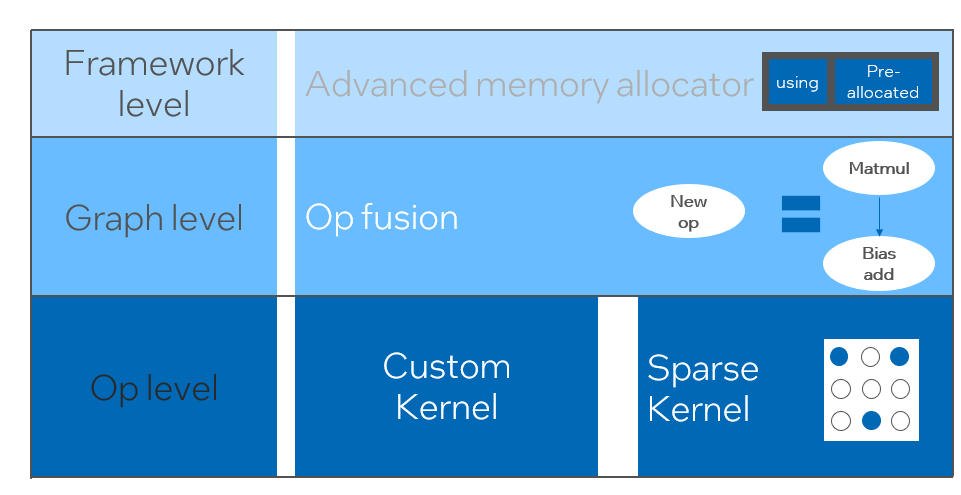

Intel custom backend[9]As the software backend of Blade, it strongly accelerates model quantization and sparse inference performance, mainlyThere are three levels of optimization.First, use the Primitive Cache strategy to optimize the memory, secondly, perform graph fusion optimization, and finally, at the operator level, realize the inclusion of sparsewith quantificationEfficient operator library including operators.

Intel Custom Backend Architecture Diagram

low precision quantization

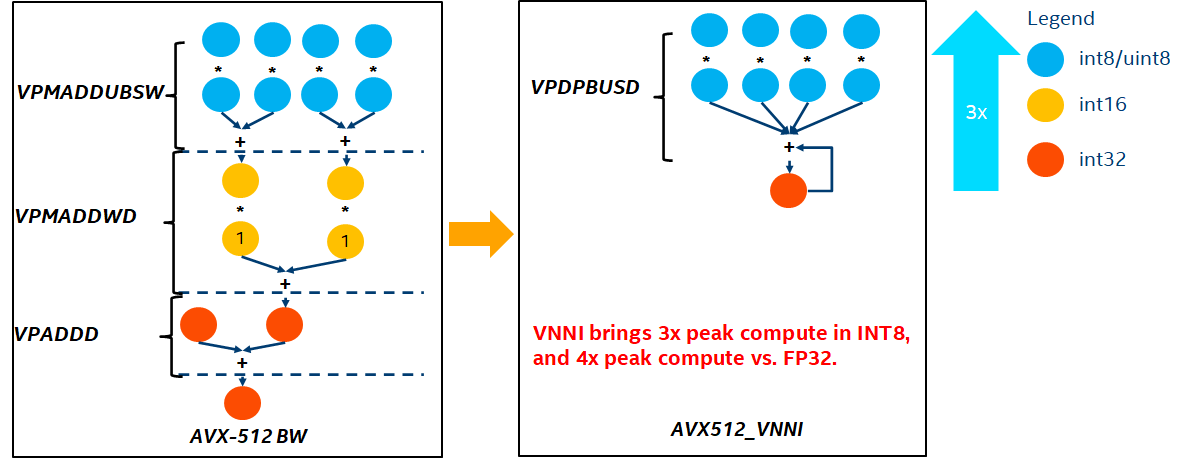

High-speed operators such as sparse and quantization, benefit fromIntel® DL BoostAccelerated instruction set, such as VNNI instruction set.

Introduction to VNNI Instructions

The above picture shows the VNNI instruction, 8bits can be accelerated by three AVX512 BW instructions, VPMADDUBSW first multiplies and adds 2 pairs of arrays composed of 8bits, Get 16bits data, VPMADDWD adds up adjacent data to get 32bits data, and finally VPADDD adds a constant, these three functions can form an AVX512_VNNI,This instruction can be used to speed up matrix multiplication in inference.

graph fusion

In addition, graph fusion is also provided in Custom Backend. For example, after matrix multiplication, the intermediate state temporary Tensor is not output, but the latter instruction is directly executed, that is, the post op of the latter item is fused with the former operator, thus reducing the data. Transfer to reduce the running time. The following figure is an example. After the operators in the red box are merged, additional data transfer can be eliminated and a new operator can be formed.

graph fusion

memory optimization

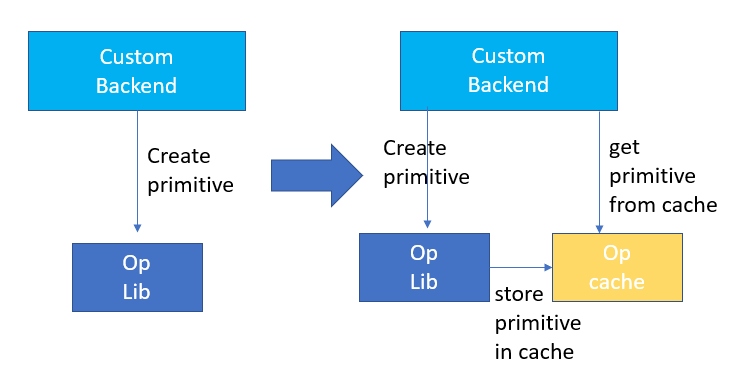

Memory allocation and release will communicate with the operating system, resulting in increased runtime delay. In order to reduce this part of the overhead, the design of Primitive Cache is added to Custom Backend. Primitive Cache is used to cache the Primitive that has been created, making Primitive Cache. Cannot be recycled by the system, reducing the creation overhead of the next call.

At the same time, a cache mechanism is established for operators that take a long time to speed up the operation of the operators, as shown in the following figure:

Primitive Cache

Quantization function As mentioned before, after the model size is reduced, the overhead of computation and access is greatly reduced, so the performance is greatly improved.

4. Overall performance display

We select two typical model structures in the address search service to verify the effect of the above optimization scheme. The test environment looks like this:

- Server model:Alibaba Cloud ecs.g7.large, 2 vCPU

- Test CPU model: Intel® Xeon® Platinum 8369B CPU @ 2.70GHz

- Test the number of CPU cores:1 vCPU

- PyTorch version: 1.9.0+cpu

- onnx version: 1.11.0

- onnxruntime version: 1.11.1

4.1 ESIM

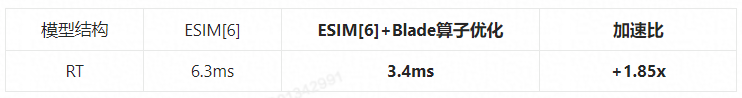

ESIM[6]YesAn enhanced version of LSTM designed for natural language inference, its inference overhead mainly comes from the LSTM structure in the model. Blade utilizationIntel Data Center Software TeamdevelopingHigh-performance general-purpose LSTM operatorSpeed it up, replace PyTorch The default LSTM in module (Baseline).The ESIM tested in this test contains two LSTM structuresthe performance before and after single-operator optimization is shown in the table:

Inference performance before and after LSTM single-operator optimization

Before and after optimization, the end-to-end inference speed of ESIM is shown in the table.Accuracy remains the same.

Inference performance before and after ESIM model optimization

4.2 BERT

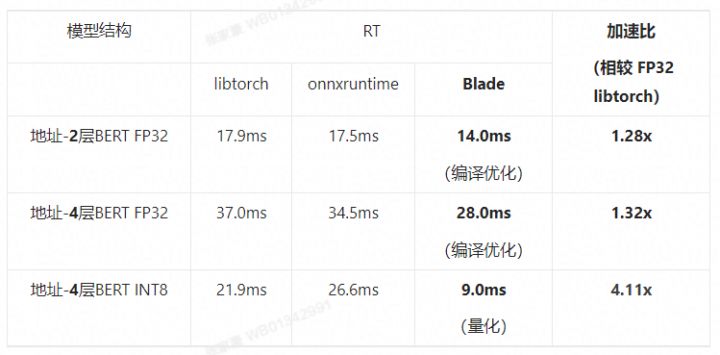

BERT[7]In recent years inNatural Language Processing (NLP) , computer vision (CV) and other fields are widely adopted. Blade has compiled and optimized (FP32), quantized (INT8) and other means for this structure.

In the speed test, the shape of the test data is fixed at 10×53, and the speed performance of various backends and various optimization methods is shown in the table below. It can be seen that the model inference speed after blade compilation optimization or INT8 quantization is better than libtorch and onnxruntime, where the backend of inference is Intel Custom Backend & BladeDisc. It is worth noting that the speed of the 4-layer BERT after quantitative acceleration is 1.5 times that of the 2-layer BERT, which means that the business can use a larger model and obtain better business accuracy while increasing the speed.

Address BERT Inference Performance Demonstration

In terms of accuracy, we are based on the CCKS2021 Chinese NLP address correlation task[5]Demonstrate the relevant model performance, as shown in the following table. The macro F1 accuracy of the 4-layer BERT developed by the DAMO Academy address team is higher than the standard 12-layer BERT-base. Blade compilation optimization can achieve lossless accuracy, and the accuracy of the real quantized model after Blade Compression quantization training is slightly higher than the original floating-point model.

Address BERT Correlation Accuracy Results

references

[1] https://help.aliyun.com/document_detail/205129.html

[2] https://www.aliyun.com/product/addresspurification/addrp

[3] Augmented SBERT: Data Augmentation Method for Improving Bi-Encoders for Pairwise Sentence Scoring Tasks (Thakur et al., NAACL 2021)

[4] Rethink Training of BERT Rerankers in Multi-stage Retrieval Pipeline (Gao et al., ECIR 2021)

[5] https://tianchi.aliyun.com/competition/entrance/531901/introduction

[6] Enhanced LSTM for natural language inference[J] (Chen Q, Zhu X, Ling Z, et al., ACL 2017)

[7] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding (Devlin J, Chang MW, Lee K, et al., ACL 2019)

[8] https://pytorch.org/docs/stable/generated/torch.nn.LSTM.html

[9] https://github.com/intel/neural-compressor/commits/inc_with_engine

#Address #Standardization #Service #Deep #Learning #Model #Reasoning #Optimization #Practice #Alibaba #Cloud #Big #Data #Technology #Personal #Space #News Fast Delivery

Address Standardization Service AI Deep Learning Model Reasoning Optimization Practice – Alibaba Cloud Big Data AI Technology Personal Space – News Fast Delivery