vivo Internet Server Team- Wei Qianzi,Li Haoxuan

In the course of Java development, JNI has always been an indispensable role, but in actual project development, JNI technology is rarely used. After the author’s hard work, I finally applied JNI to the actual project. In this article, the author will briefly introduce the JNI technology, and introduce the simple principle and performance analysis. By sharing our practical process, we will take readers to experience the application of JNI technology.

1. Background

In computationally intensive scenarios, the Java language needs to spend more time optimizing the extra overhead caused by GC. And in the optimization of some low-level instructions, C++, a “nucleophilic” language, has better advantages and a lot of practical experience in the industry. So as a Java programmer for many years, can I run C++ code on a Java service? The answer is yes.

JNI (Java Native Interface) technology is the solution proposed for this scenario. Although JNI technology allows us to perform in-depth performance optimization, its more cumbersome development method can not help but cause headaches for newcomers. This article introduces how to complete the development of JNI through step by step, as well as the effect and thinking of our optimization.

Before starting the text, we can think about three questions:

Why choose to use JNI technology?

How to apply JNI technology in Maven project?

Is JNI really useful?

2. About JNI: Why choose it?

2.1 Basic Concepts of JNI

The full name of JNI is called Java Native Interface, which translates to Java Native Interface. Friends who like to read the JDK source code will find that some method declarations in the JDK have native modifiers, and no specific implementation can be found. In fact, it is in non-Java languages, which is the embodiment of JNI technology.

As early as the JDK1.0 version, there has been JNI. The official definition of JNI is:

Java Native Interface (JNI) is a standard programming interface for writing Java native methods and embedding the Java virtual machine into native applications. The primary goal is binary compatibility of native method libraries across all Java virtual machine implementations on a given platform.

JNI is a standard programming interface for writing Java native methods and embedding the JVM in Native applications. It is for binary compatibility of native method libraries for JVMs on cross platforms.

JNI was originally a set of interface protocols designed to ensure cross-platform compatibility. And because Java was born very early, JNI technology calls C/C++ and system lib library in most cases, and the support for other languages is relatively limited. With the development of time, JNI has gradually attracted the attention of developers. For example, Android’s NDK and Google’s JNA are extensions of JNI, making this technology easier for developers to use.

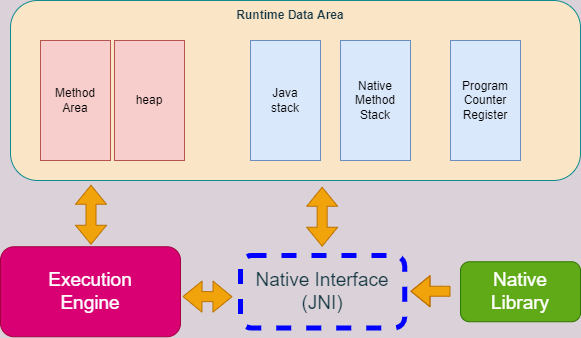

We can take a look at the JNI related modules in the JVM, as shown in Figure 1:

Figure 1 – JVM memory and engine execution relationship

In the memory area of the JVM, the Native Interface is an important link, connecting the execution engine and the runtime data area. The methods of the native interface (JNI) manage native methods in the native method stack, and load the native method library when the Execution Engine executes.

JNI is like breaking the shackles of JVM. It has the same ability as JVM. It can directly use the registers in the processor. Can access the data of the JVM virtual machine runtime, such as some heap memory overflow or something 🙂

2.2 Functions of JNI

JNI has powerful functions, so what can it do? The official documentation gives the reference answer.

The standard Java class library does not support platform-dependent features required by applications.

You already have a library written in another language and want to make it accessible to Java code through JNI.

You want to implement a small amount of time-consuming code in a lower-level language such as assembly.

Of course there are some extensions, such as:

Do not want the Java code written to be decompiled;

Need to use system or existing lib library;

Expect to use a faster language to handle a large number of calculations;

Frequent operations on images or local files;

Call the system-driven interface.

There may be other scenarios where JNI can be used, and it can be seen that JNI technology has very good application potential.

3. JNI combat: exploring the whole process of stepping on the pit

There is a computationally intensive scenario in our business where we need to load data files locally for model inference. After several rounds of optimization in the Java version, the project team found that there was no major progress, mainly because the reasoning took a long time and there was performance jitter when loading the model. After investigation, if you want to further improve the speed of calculating and loading files, you can use JNI technology to write a C++ lib library, which is called by Java native methods, and it is expected that there will be a certain improvement.

However, the project team has no practical experience with JNI at present. Whether the final performance can be improved is still a question mark. In the spirit of a new-born calf not afraid of tigers, I summoned the courage to take the initiative to claim this optimization task. Let me share with you the process of practicing JNI and the problems I encountered, so as to give you some ideas.

3.1 Scene preparation

The actual combat does not start with Hello world, we directly finalize the scene,Think about which part of the logic should be implemented in C++.

The scenario is as follows:

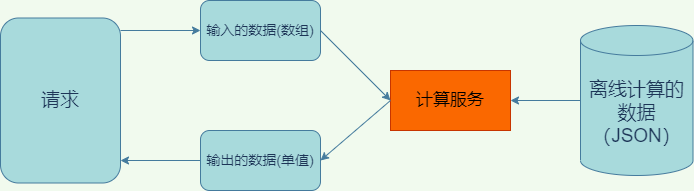

Figure 2 Actual combat scene

In the computing service, we convert the offline computing data into a map structure, enter a set of keys to search in the map and apply the algorithm formula to evaluate the value. By analyzing the JVM stack information and flame graph, it is found that the performance bottleneck is mainly in a large number of logistic regression operations and GC. Due to the large-scale Map structure being cached, the heap memory is occupied a lot, so GC Mark-and -Sweep takes a long time, so we decided to transform the two methods of loading files and logistic regression into native methods.

code show as below:

public static native long loadModel(String path);public static native void close(long ptr);public static native float compute(long ptr, long[] keys);

So why do we pass pointers and design a close method?

Consideration for compatibility with existing implementations: Although the entire calculation process is carried out in the C++ runtime, the life cycle management of objects is implemented in Java, so we choose to return the loaded and initialized model object pointer, and then every time Just pass that pointer when evaluating;

Considerations for correct memory release: Using Java’s own GC and model manager code mechanisms, explicitly call the close method when the model is unloaded to release the memory managed by the C++ runtime to prevent memory leaks.

Of course, this suggestion is only applicable to scenarios that require some data to be cached in memory when the lib is executed, and only use the native method for calculation, without considering this situation.

3.2 Environment Construction

The following is a brief introduction to the environment and project structure we use. This part does not introduce a lot. If you have any questions, you can refer to the references at the end of the article or check it online.

We are using a simple maven project, compiled and deployed using Docker’s ubuntu-20.04 container, which requires GCC, Bazel, Maven, openJDK-8, etc. to be installed in the container. If you are developing under Windows, you can also install the corresponding tools and compile them into .dll files, the effect is the same.

We create the directory of the maven project as follows:

/src # 主目录-/main--/cpp # c++ 仓库目录--/java # java 仓库目录--/resources # 存放 lib 的资源目录-/test--/javapom.xml # maven pom

3.3 The actual combat process

All are ready, so let’s get straight to the point:

package com.vivo.demo.model;import java.io.*;public class ModelComputer implements Closeable {static {loadPath("export_jni_lib");}private Long ptr;public ModelComputer(String path) {ptr = loadModel(path);}public static void loadPath(String name) {String path = System.getProperty("user.dir") + "\\src\\main\\resources\\";path += name;String osName = System.getProperty("os.name").toLowerCase();if (osName.contains("linux")) {path += ".so";} else if (osName.contains("windows")) {path += ".dll";}File file = new File(path);if (file.exists() && file.isFile()) {System.load(path);return;}String fileName = path.substring(path.lastIndexOf('/') + 1);String prefix = fileName.substring(0, fileName.lastIndexOf(".") - 1);String suffix = fileName.substring(fileName.lastIndexOf("."));try {File tmp = File.createTempFile(prefix, suffix);tmp.deleteOnExit();byte[] buff = new byte[1024];int len;try (InputStream in = ModelComputer.class.getResourceAsStream(path);OutputStream out = new FileOutputStream(tmp)) {while ((len = in.read(buff)) != -1) {out.write(buff, 0, len);}}System.load(tmp.getAbsolutePath());} catch (Exception e) {throw new RuntimeException();}}public static native long loadModel(String path);public static native void close(long ptr);public static native float compute(long ptr, long[] keys);public void close() {Long tmp = ptr;ptr = null;close(tmp);}public float compute(long[] keys) {return compute(ptr, keys);}}

Stepping on pit 1: java.lang.UnsatisfiedLinkError exception during startup

This is because the lib file is in the compressed package, and the function that loads lib is looking for the file under the system path. It reads the file from the compressed package to the temporary folder through InputStream and File operations, obtains its path, and loads it again. That’s it. The getPath method above can be referred to as an example of a solution: The path entered by the System.load() function must be the file name under the full path, or you can use System.loadLibrary() to load the lib library under java.library.path, not The suffix of the lib file is required.

Save the above Java code, the corresponding C++ header file can be generated through the Javah instruction, and the export_jni.h in the preceding directory structure is generated through this instruction.

javah -jni -encoding utf-8 -classpath com.vivo.demo.model.ModelComputer -o ../cpp/extern_jni.h

Open and you can see the generated files are as follows:

extern "C" {JNIEXPORT jlong JNICALL Java_com_vivo_demo_model_ModelComputer_loadModel(JNIEnv *, jclass, jstring);JNIEXPORT void JNICALL Java_com_vivo_demo_model_ModelComputer_close(JNIEnv *, jclass, jlong);JNIEXPORT jfloat JNICALL Java_com_vivo_demo_model_ModelComputer_compute(JNIEnv *, jclass, jlong, jlongArray);}

Stepping on pit 2: Javah fails to run

If the generation fails, you can refer to the “.h” file in the above JNI format and write it out by hand. As long as the format is correct, the effect is the same. Among them, jni.h is a file under the JDK path, which defines some JNI types, return values, exceptions, JavaVM structures and some methods (type conversion, field acquisition, JVM information acquisition, etc.). jni.h also depends on a jni_md.h file, which defines jbyte, jint and jlong. The definitions of these three types are different under different machines.

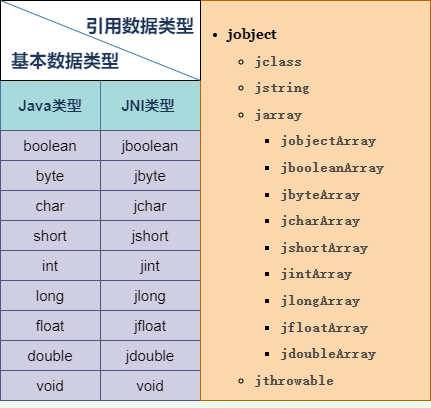

We can look at the correspondence between JNI common data types and Java:

Figure 3 JNI common data types

As shown in Figure 3, JNI defines some basic data types and reference data types, which can complete data conversion between Java and C++. JNIEnv is an interface pointer to local thread data. Generally speaking, we can complete the data conversion between Java and C++ through the methods in JNIEnv. Through it, C++ can access Java’s heap memory.

For basic data types, coercion can be performed by passing by value, which can be understood as only the name of the definition has changed, which is not much different from the basic data types of java.

For reference data types, JNI defines a reference of Object type, which means that java can pass any object to C++ by reference. For arrays and string types like basic types, if they are passed by reference, then C++ will access Java’s heap memory and access Java objects through the methods in JNIEnv. Although we don’t need to care about the specific logic, its performance consumption is higher than C++ pointer manipulation objects. So JNI copies the array and string to the local memory (buffer), which not only improves the access speed, but also reduces the pressure on the GC. The disadvantage is that it needs to use the methods provided by JNI to create and release.

// 可以使用下列三组函数,其中 tpye 为基本数据类型,后两组有 Get 和 Release 方法,Release 方法的作用是提醒 JVM 释放内存// 数据量小的时候使用此方法,原理是将数据复制到C缓冲区,分配在 C 堆栈上,因此只适用于少量的元素,Set 操作是对缓存区进行修改Get<type>ArrayRegionSet<type>ArrayRegion// 将数组的内容拷贝到本地内存中,供 C++ 使用Get<type>ArrayElementRelease<type>ArrayElement// 有可能直接返回 JVM 中的指针,否则的话也会拷贝一个数组出来,和 GetArrayElement 功能相同GetPrimitiveArrayCriticalReleasePrimitiveArrayCritical

Through the introduction of these three groups of methods, I have a general understanding of the data type conversion of JNI. If there is no operation of C++ to create and modify Java Objects, then writing C++ code is no different from normal C++ development. The following gives “export_jni.h” ” code example.

extern "C" {JNIEXPORT jlong JNICALL Java_com_vivo_demo_model_ModelComputer_loadModel(JNIEnv* env, jclass clazz, jstring path) {vivo::Computer* ptr = new vivo::Computer();const char* cpath = env->GetStringUTFChars(path, 0);ptr->init_model(cpath);env->ReleaseStringUTFChars(path, cpath);return (long)ptr;};JNIEXPORT void JNICALL Java_com_vivo_demo_model_ModelComputer_close(JNIEnv* env, jclass clazz, jlong ptr) {vivo::Computer* computer = (vivo::Computer*)ptr;delete computer;};JNIEXPORT jfloat JNICALL Java_com_vivo_demo_model_ModelComputer_compute(JNIEnv* env, jclass clazz, jlong ptr, jlongArray array) {jlong* idx_ptr = env->GetLongArrayElements(array, NULL);vivo::Computer* computer = (vivo::Computer*)ptr;float result = computer->compute((long *)idx_ptr);env->ReleaseLongArrayElements(array, idx_ptr, 0);return result;};}

After the C++ code is compiled, put the lib file in the specified location of the resource directory. For convenience, you can write a shell script to execute it with one click.

Stepping on the pit 3: Server startup time

java.lang.UnsatisfiedLinkError abnormal

It is this exception again. A solution has been introduced in the previous article, but it still occurs frequently in practical applications, such as:

There is a problem with the operating environment (such as compiling under Linux and running on Windows, which is not acceptable);

The bitness of the JVM is inconsistent with the bitness of the lib (for example, one is 32-bit and the other is 64-bit);

C++ function name is wrong;

There is no corresponding method in the generated lib file.

For these problems, as long as you carefully analyze the exception logs, you can solve them one by one, and there are tools to help us solve the problem.

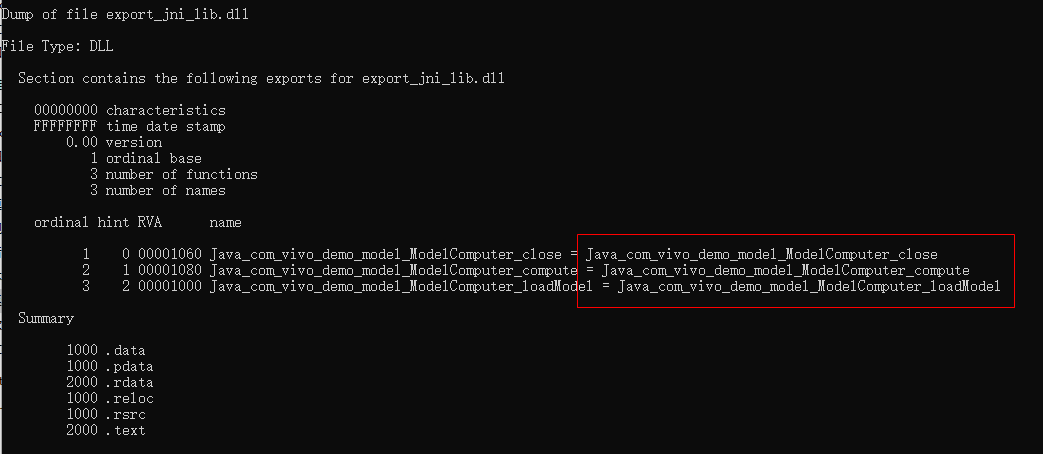

Use dumpbin/objdump to analyze lib, resolve UnsatisfiedLinkError faster

Different operating systems also provide different tools for checking functions in the lib library.

Under windows, you can use the dumpbin tool or the Dependency Walker tool to analyze whether the written C++ method exists in the lib. The dumpbin command is as follows:

dumpbin /EXPORTS xxx.dll

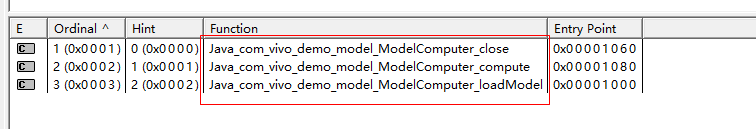

Figure 4 dumpbin to view the dll file

And Dependency Walker only needs to open the dll file to see the relevant information.

Figure 5 Dependency Walker viewing the dll file

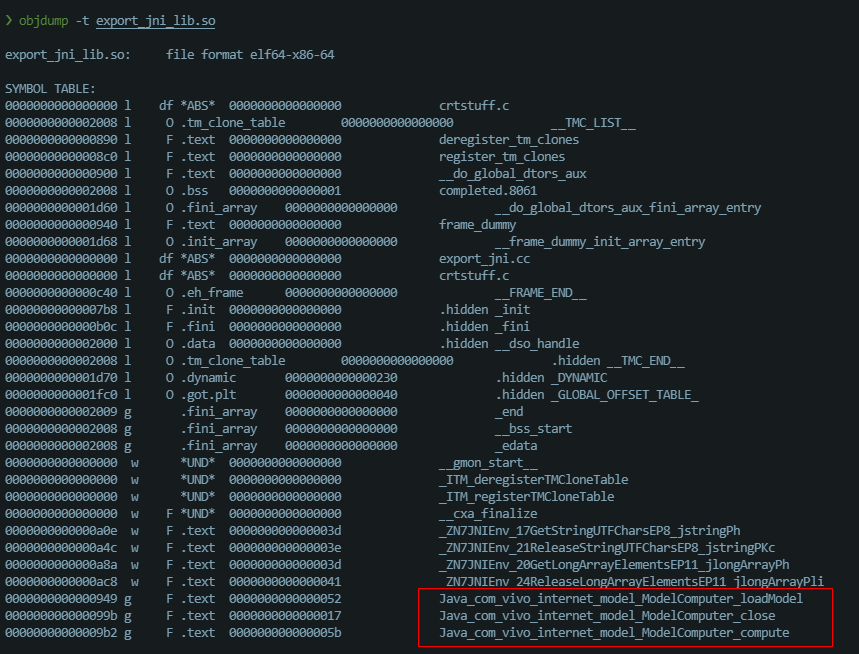

Under Linux, you can use the objdump tool to analyze the information in the so file.

The objdump command is as follows:

objdump -t xxx.so

Figure 6 objdump to view the so file

3.4 Performance Analysis

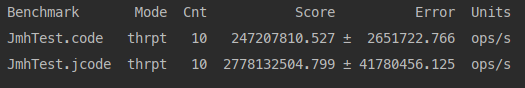

According to previous research, we noticed that Java’s invocation of native methods also has additional performance overhead, and we conducted a simple test with JMH for this. Figure 7 shows a comparison of JNI empty method calls and Java:

Figure 7 – Comparison of empty function calls (data from personal machine JMH test, for reference only)

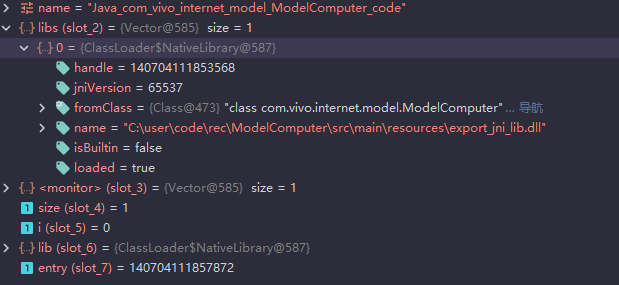

Among them, JmhTest.code calls the native empty method, and JmhTest.jcode calls the java empty method. It can be seen that the method of directly calling java is ten times faster than calling the native method. We made a simple analysis of the stack call, and found that the process of calling native is more cumbersome than calling the java method directly, and entered the findNative method of ClassLoad.

static long findNative(ClassLoader loader, String name) {Vector<NativeLibrary> libs =loader != null ? loader.nativeLibraries : systemNativeLibraries;synchronized (libs) {int size = libs.size();for (int i = 0; i < size; i++) {NativeLibrary lib = libs.elementAt(i);long entry = lib.find(name);if (entry != 0)return entry;}}return 0;}

The stack information is as follows:

Figure 8 Calling native stack information

The find method is a native method, and no relevant information can be printed on the stack, but it is not difficult to find out that calling the method in the lib library through the find method requires at least one round of mapping to find the corresponding C++ function execution, and then Return the result. Instantly recalling Figure 1, this kind of call link connects the local method stack, virtual machine stack, nativeLibrary and execution engine through the Native Interface. The logic is bound to be more complicated, and the relative call time will also increase.

With so much work, we almost forgot our goal: improve our computing and loading speed. After the above optimization, we conducted a full-link stress test in the stress-testing environment and found that even if there is additional overhead in the native call, the performance of the full-link is still significantly improved.

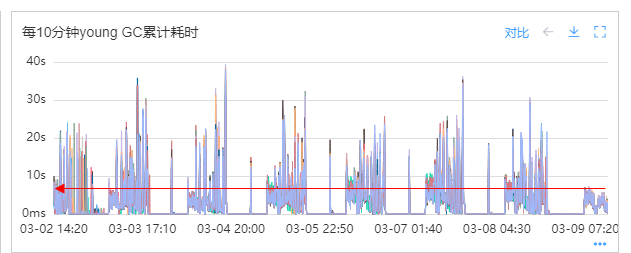

Our service reduces the core computing time of model inference by 80%, the time required for loading and parsing model files by 60% (minutes to seconds), and the average GC time by 30%. The benefits are very obvious.

Figure 9 Time-consuming comparison of young GC

Fourth, thinking and summary: the benefits of JNI

The successful application of JNI in some specific scenarios has opened up our optimization ideas, especially when there is no progress after many optimization attempts on Java, JNI is indeed worth a try.

Back to the original question: does JNI really work? My answer is: it doesn’t work very well. If you are an engineer who has little contact with C++ programming, it will take a lot of time to build and compile the environment in the first step, and then to the subsequent code maintenance, C++ tuning, etc., which is a very headache. . But I still highly recommend to understand this technology and its application, and think about how this technology can improve your server performance.

Maybe one day, JNI can be used for you!

References:

END

you may also like

This article is shared from the WeChat public account – vivo Internet Technology (vivoVMIC).

If there is any infringement, please contact support@oschina.cn to delete it.

This article participates in the “OSC Yuanchuang Program”, and you are welcome to join and share with us.

#JNI #combat #intensive #computing #scenarios #vivo #Internet #Technology #News Fast Delivery

JNI combat in intensive computing scenarios – vivo Internet Technology – News Fast Delivery