The official release of Java 19 brings a new feature that Java developers have coveted for a long time – virtual threads. Before Java had this new feature, Go language coroutines have been popular for a long time, and it can be said to be all-powerful in the field of concurrent programming. With the rapid development and promotion of the domestic Go language, coroutines seem to have become one of the essential features of the best language in the world. Java19 virtual thread is to fill this gap. This article will introduce you to Java19 virtual threads through the introduction of virtual threads and the comparison with Go coroutines.

Key points of this article:

- Java threading model

- Performance comparison between platform threads and virtual threads

- Java virtual thread vs Go coroutine

- How to use virtual threads

Java threading model

java thread and virtual thread

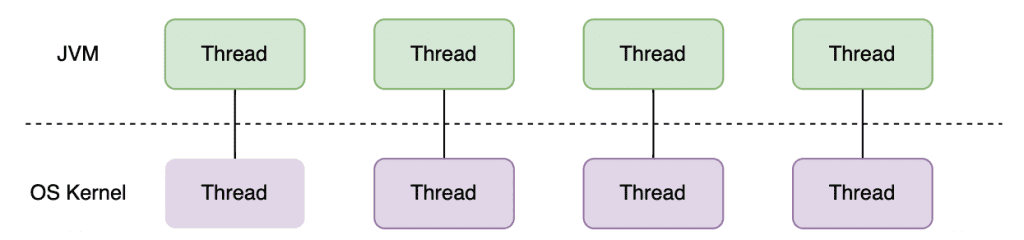

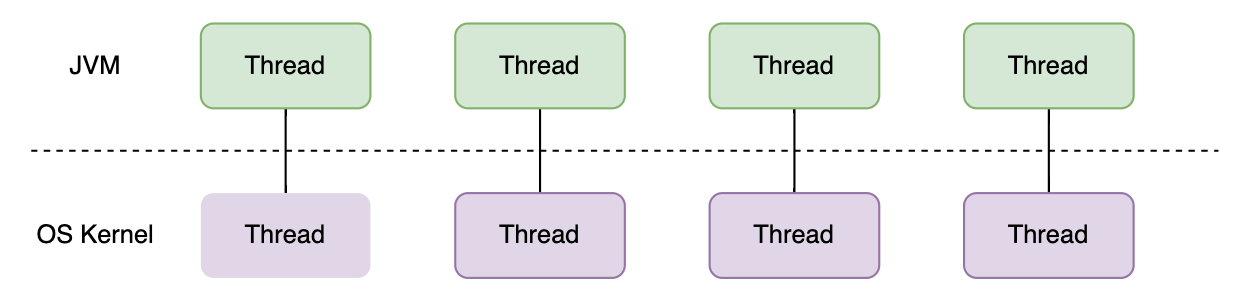

There is a one-to-one correspondence between our commonly used Java threads and system kernel threads, and the thread scheduler of the system kernel is responsible for scheduling Java threads. In order to increase the performance of the application, we will add more and more Java threads. Obviously, when the system schedules Java threads, it will occupy a lot of resources to handle thread context switching.

For decades, we have relied on the multithreading model described above to solve the problems of concurrent programming in Java. In order to increase the throughput of the system, we have to continuously increase the number of threads, but the threads of the machine are expensive and the number of available threads is limited. Even if we use various thread pools to maximize the cost-effectiveness of threads, threads often become the performance improvement bottleneck of our application before CPU, network or memory resources are exhausted, and cannot maximize the performance that the hardware should have.

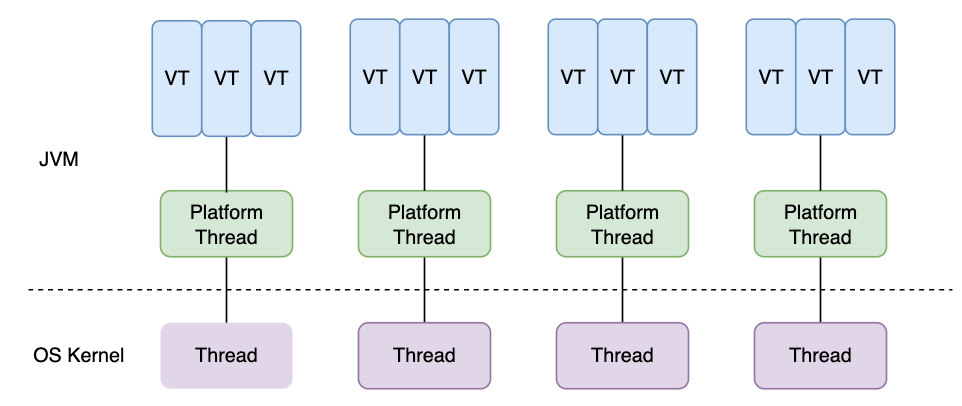

In order to solve this problem, Java19 introduced virtual threads (Virtual Thread). In Java19, the thread we used before is called platform thread, which still corresponds to the system kernel thread one-to-one. A large number (M) of virtual threads run on a smaller number (N) of platform threads (one-to-one correspondence with operating system threads) (M:N scheduling). Multiple virtual threads will be scheduled by the JVM to execute on a certain platform thread, and a platform thread will only execute one virtual thread at the same time.

Create a Java virtual thread

Added thread related API

Thread.ofVirtual()andThread.ofPlatform()is a new API for creating virtual and platform threads:

//输出线程ID 包括虚拟线程和系统线程 Thread.getId() 从jdk19废弃

Runnable runnable = () -> System.out.println(Thread.currentThread().threadId());

//创建虚拟线程

Thread thread = Thread.ofVirtual().name("testVT").unstarted(runnable);

testVT.start();

//创建虚平台线程

Thread testPT = Thread.ofPlatform().name("testPT").unstarted(runnable);

testPT.start();

useThread.startVirtualThread(Runnable)Quickly create a virtual thread and start:

//输出线程ID 包括虚拟线程和系统线程

Runnable runnable = () -> System.out.println(Thread.currentThread().threadId());

Thread thread = Thread.startVirtualThread(runnable);

Thread.isVirtual()Determine whether the thread is a virtual thread:

//输出线程ID 包括虚拟线程和系统线程

Runnable runnable = () -> System.out.println(Thread.currentThread().isVirtual());

Thread thread = Thread.startVirtualThread(runnable);

Thread.joinandThread.sleepWait for the virtual thread to end and make the virtual thread sleep:

Runnable runnable = () -> System.out.println(Thread.sleep(10));

Thread thread = Thread.startVirtualThread(runnable);

//等待虚拟线程结束

thread.join();

Executors.newVirtualThreadPerTaskExecutor()Create an ExecutorService that creates a new virtual thread for each task:

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

executor.submit(() -> System.out.println("hello"));

}

Supports replacement and migration of existing code using thread pools and ExecutorService.

Notice:

Because virtual threads are a preview feature in Java 19, the code presented in this article needs to run as follows:

- use

javac --release 19 --enable-preview Main.javacompile the program and usejava --enable-preview Mainrun; - or use

java --source 19 --enable-preview Main.javarun the program;

It’s a mule or a horse

Since it is to solve the problem of platform threads, we directly test the performance of platform threads and virtual threads.

The test content is very simple, execute 10,000 tasks of sleep one second in parallel, and compare the total execution time and the number of system threads used.

To monitor the number of system threads used by the test, write the following code:

ScheduledExecutorService scheduledExecutorService = Executors.newScheduledThreadPool(1);

scheduledExecutorService.scheduleAtFixedRate(() -> {

ThreadMXBean threadBean = ManagementFactory.getThreadMXBean();

ThreadInfo[] threadInfo = threadBean.dumpAllThreads(false, false);

System.out.println(threadInfo.length + " os thread");

}, 1, 1, TimeUnit.SECONDS);

The scheduling thread pool obtains and prints the number of system threads every second, which is convenient for observing the number of threads.

public static void main(String[] args) {

//记录系统线程数

ScheduledExecutorService scheduledExecutorService = Executors.newScheduledThreadPool(1);

scheduledExecutorService.scheduleAtFixedRate(() -> {

ThreadMXBean threadBean = ManagementFactory.getThreadMXBean();

ThreadInfo[] threadInfo = threadBean.dumpAllThreads(false, false);

System.out.println(threadInfo.length + " os thread");

}, 1, 1, TimeUnit.SECONDS);

long l = System.currentTimeMillis();

try(var executor = Executors.newCachedThreadPool()) {

IntStream.range(0, 10000).forEach(i -> {

executor.submit(() -> {

Thread.sleep(Duration.ofSeconds(1));

System.out.println(i);

return i;

});

});

}

System.out.printf("耗时:%d ms", System.currentTimeMillis() - l);

}

First we useExecutors.newCachedThreadPool()to execute 10000 tasks because newCachedThreadPool The maximum number of threads is Integer.MAX_VALUE, so theoretically at least a few thousand system threads will be created to execute.

The output is as follows (redundant output omitted):

//output

1

7142

3914 os thread

Exception in thread "main" java.lang.OutOfMemoryError: unable to create native thread: possibly out of memory or process/resource limits reached

at java.base/java.lang.Thread.start0(Native Method)

at java.base/java.lang.Thread.start(Thread.java:1560)

at java.base/java.lang.System$2.start(System.java:2526)

As can be seen from the above output, a maximum of 3914 system threads are created, and then an exception occurs when continuing to create threads, and the program terminates. It is unrealistic for us to improve the performance of the system through a large number of system threads, because threads are expensive and resources are limited.

Now we use a thread pool with a fixed size of 200 to solve the problem of not applying too many system threads:

public static void main(String[] args) {

//记录系统线程数

ScheduledExecutorService scheduledExecutorService = Executors.newScheduledThreadPool(1);

scheduledExecutorService.scheduleAtFixedRate(() -> {

ThreadMXBean threadBean = ManagementFactory.getThreadMXBean();

ThreadInfo[] threadInfo = threadBean.dumpAllThreads(false, false);

System.out.println(threadInfo.length + " os thread");

}, 1, 1, TimeUnit.SECONDS);

long l = System.currentTimeMillis();

try(var executor = Executors.newFixedThreadPool(200)) {

IntStream.range(0, 10000).forEach(i -> {

executor.submit(() -> {

Thread.sleep(Duration.ofSeconds(1));

System.out.println(i);

return i;

});

});

}

System.out.printf("耗时:%dms\n", System.currentTimeMillis() - l);

}

The output is as follows:

//output

1

9987

9998

207 os thread

耗时:50436ms

After using the fixed-size thread pool, there is no problem of creating a large number of system threads and causing failures. The task can be completed normally, and a maximum of 207 system threads are created, which takes a total of 50436ms.

Let’s take a look at the results of using virtual threads:

public static void main(String[] args) {

ScheduledExecutorService scheduledExecutorService = Executors.newScheduledThreadPool(1);

scheduledExecutorService.scheduleAtFixedRate(() -> {

ThreadMXBean threadBean = ManagementFactory.getThreadMXBean();

ThreadInfo[] threadInfo = threadBean.dumpAllThreads(false, false);

System.out.println(threadInfo.length + " os thread");

}, 10, 10, TimeUnit.MILLISECONDS);

long l = System.currentTimeMillis();

try(var executor = Executors.newVirtualThreadPerTaskExecutor()) {

IntStream.range(0, 10000).forEach(i -> {

executor.submit(() -> {

Thread.sleep(Duration.ofSeconds(1));

System.out.println(i);

return i;

});

});

}

System.out.printf("耗时:%dms\n", System.currentTimeMillis() - l);

}

The code using virtual threads is only one word different from using fixed size, and willExecutors.newFixedThreadPool(200)replace withExecutors.newVirtualThreadPerTaskExecutor().

The output is as follows:

//output

1

9890

15 os thread

耗时:1582ms

It can be seen from the output that the total execution time is 1582 ms, and a maximum of 15 system threads are used. The conclusion is clear that using virtual threads is much faster than platform threads and uses less system thread resources.

If we replace the task in the test program just now to perform a one-second calculation (for example, sorting a huge array), instead of just sleeping for one second, even if we put the number of virtual threads or platform threads Increasing to much larger than the number of processor cores will not yield significant performance gains. Because virtual threads are not faster threads, they have no advantage in running code faster than platform threads. Virtual threads exist to provide higher throughput, not speed (lower latency).

Using virtual threads can significantly improve program throughput if your application meets the following two characteristics:

- The program has a high number of concurrent tasks.

- IO-intensive, workload is not CPU bound.

Virtual threads can help improve throughput for server-side applications that have a lot of concurrency, and these tasks often have a lot of IO waits.

Java vs Go

Comparison of usage

Go coroutines vs Java virtual threads

Define a say() method, the body of the method is to loop sleep for 100ms, then output the index, and execute this method using a coroutine.

Go implementation:

package main

import (

"fmt"

"time"

)

func say(s string) {

for i := 0; i < 5; i++ {

time.Sleep(100 * time.Millisecond)

fmt.Println(s)

}

}

func main() {

go say("world")

say("hello")

}

Java implementation:

public final class VirtualThreads {

static void say(String s) {

try {

for (int i = 0; i < 5; i++) {

Thread.sleep(Duration.ofMillis(100));

System.out.println(s);

}

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

}

public static void main(String[] args) throws InterruptedException {

var worldThread = Thread.startVirtualThread(

() -> say("world")

);

say("hello");

// 等待虚拟线程结束

worldThread.join();

}

}

It can be seen that the writing methods of coroutines in the two languages are very similar. Generally speaking, the writing method of Java virtual threads is a little more troublesome. Go can easily create coroutines using a keyword.

Go pipeline vs Java blocking queue

In Go language programming, coroutines and pipelines complement each other, and use coroutines to calculate the sum of array elements (divide and conquer idea):

Go implementation:

package main

import "fmt"

func sum(s []int, c chan int) {

sum := 0

for _, v := range s {

sum += v

}

c <- sum // send sum to c

}

func main() {

s := []int{7, 2, 8, -9, 4, 0}

c := make(chan int)

go sum(s[:len(s)/2], c)

go sum(s[len(s)/2:], c)

x, y := <-c, <-c // receive from c

fmt.Println(x, y, x+y)

}

Java implementation:

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.BlockingQueue;

import java.util.concurrent.Executors;

public class main4 {

static void sum(int[] s, int start, int end, BlockingQueue<Integer> queue) throws InterruptedException {

int sum = 0;

for (int i = start; i < end; i++) {

sum += s[i];

}

queue.put(sum);

}

public static void main(String[] args) throws InterruptedException {

int[] s = {7, 2, 8, -9, 4, 0};

var queue = new ArrayBlockingQueue<Integer>(1);

Thread.startVirtualThread(() -> {

sum(s, 0, s.length / 2, queue);

});

Thread.startVirtualThread(() -> {

sum(s, s.length / 2, s.length, queue);

});

int x = queue.take();

int y = queue.take();

System.out.printf("%d %d %d\n", x, y, x + y);

}

}

Because there is no array slicing in Java, arrays and subscripts are used instead. There is no pipeline in Java, and the BlockingQueue, which is similar to the pipeline, can be used to achieve the function.

Coroutine implementation principle comparison

GO GMP model

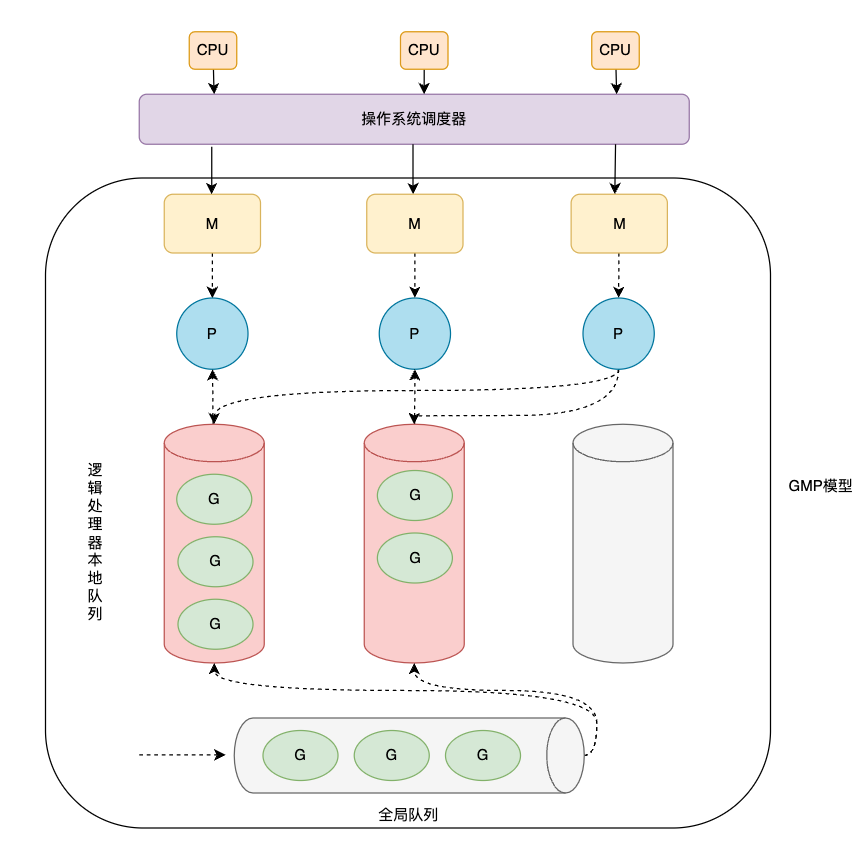

The Go language adopts a two-level thread model, and the coroutine and the system kernel thread are M:N, which is consistent with the Java virtual thread. In the end, the goroutine will still be handed over to the OS thread for execution, but an intermediary is required to provide context. This is the GMP model.

G: goroutine, similar to process control block, saves stack, state, id, function and other information. G can only be scheduled if it is bound to P.

M: machine, system thread, schedule after binding a valid P.

P: Logical processor that holds various queues G. For G, P is the cpu core. For M, P is the context.

sched: Scheduler, saves information such as GRQ (global run queue), M idle queue, P idle queue, and lock.

queue

The Go scheduler has two different run queues:

- GRQ, global run queue, has not been assigned to P’s G (before Go1.1, there was only GRO global run queue, but LRQ was added due to the performance problem of global queue locking to reduce lock waiting).

- LRQ, Local Run Queue, one LRQ per P, used to manage G assigned to P to execute. It is taken from GRQ when there is no G to be executed in LRQ.

hand off mechanism

When G performs a blocking operation, in order to prevent blocking M from affecting the execution of other Gs in LRQ, GMP will schedule idle M to execute blocking M other Gs in LRQ:

- G1 runs on M1, and P’s LRQ has 3 other Gs;

- G1 makes a synchronous call, blocking M;

- The scheduler separates M1 from P. At this time, only G1 runs under M1 without P.

- Bind P to idle M2, which chooses other G from LRQ to run.

- G1 ends the jam operation and moves back to LRQ. M1 will be placed in the free queue for standby.

work stealing mechanism

In order to maximize the release of hardware performance, GMP uses the task stealing mechanism to execute other Gs waiting to be executed when M is idle:

- There are two P, P1, P2.

- If all Gs of P1 are executed and LRQ is empty, P1 starts task stealing.

- In the first case, P1 gets G from GRQ.

- In the second case, P1 does not acquire G from GRQ, then P1 steals G from P2 LRQ.

The hand off mechanism is to prevent M from blocking, and task stealing is to prevent M from being idle.

Java Virtual Thread Scheduling

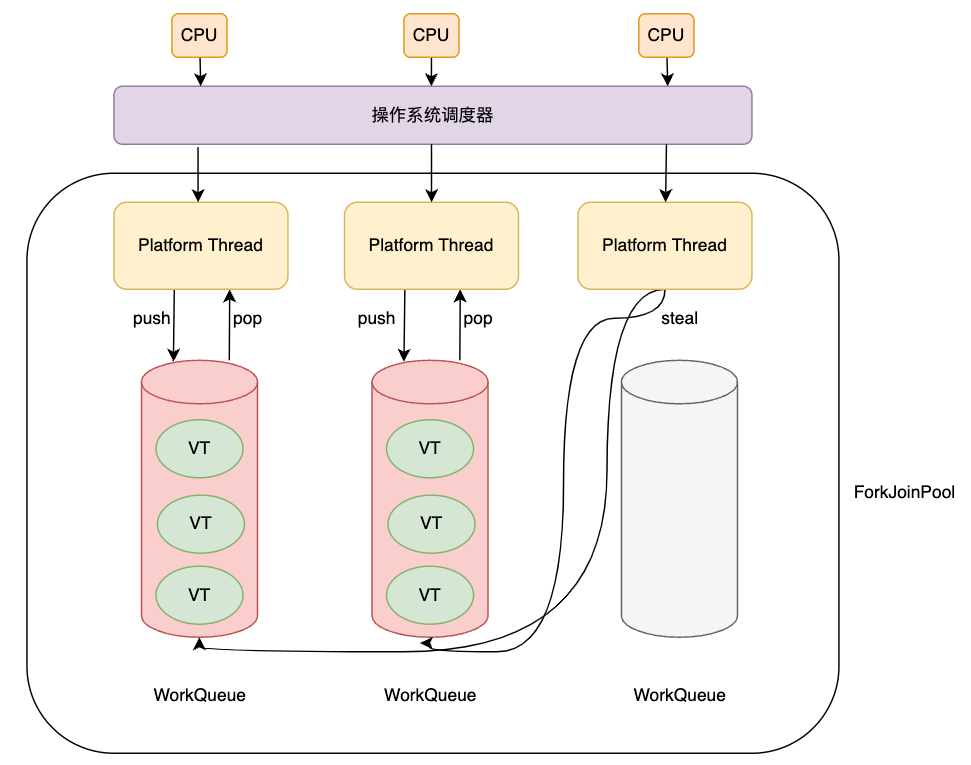

Based on platform threads implemented by operating system threads, the JDK relies on the thread scheduler in the operating system for scheduling. For virtual threads, JDK has its own scheduler. Instead of assigning virtual threads directly to system threads, the JDK’s scheduler assigns virtual threads to platform threads (this is the M:N scheduling of virtual threads mentioned earlier). Platform threads are scheduled by the operating system’s thread scheduling system.

The JDK’s virtual thread scheduler is a similarForkJoinPoolthread pool. The amount of parallelism of the scheduler depends on the number of platform threads of the scheduler virtual thread.The default is the number of CPU cores available, but a system property can be usedjdk.virtualThreadScheduler.parallelismmake adjustments.Note that hereForkJoinPoolandForkJoinPool.commonPool()different,ForkJoinPool.commonPool()Used to implement parallel streams and operate in LIFO mode.

ForkJoinPoolandExecutorServiceworks differently,ExecutorServiceThere is a waiting queue to store its tasks, and threads in it will receive and process these tasks.andForkJoinPoolEach thread has a wait queue, when a task run by a thread spawns another task, that task is added to that thread’s wait queue, and when we runParallel Streamthis happens when a large task is divided into two smaller tasks.

to preventthread starvationThe problem, when a thread has no more tasks in its waiting queue,ForkJoinPoolAnother pattern is also implemented, calledtask stealing, that is: a hungry thread can steal some tasks from another thread’s waiting queue. This is similar to the work stealing mechanism in the Go GMP model.

Execution of virtual threads

Typically, when a virtual thread performs I/O or other blocking operations in the JDK (such asBlockingQueue.take(), the virtual thread is unloaded from the platform thread. When the blocking operation is ready to complete (for example, network IO has received bytes of data), the scheduler mounts the virtual thread on the platform thread to resume execution.

The vast majority of blocking operations in the JDK offload the virtual thread from the platform thread, allowing the platform thread to perform other work tasks. However, a few blocking operations in the JDK do not unload virtual threads and therefore block platform threads.Because of operating system level (such as many file system operations) or JDK level (such asObject.wait())limits. When these blocking operations block platform threads, they will compensate for the loss of other platform threads blocking by temporarily increasing the number of platform threads.Therefore, the scheduler’sForkJoinPoolThe number of platform threads in may temporarily exceed the number of CPU cores available.The maximum number of platform threads available to the scheduler can use a system propertyjdk.virtualThreadScheduler.maxPoolSizemake adjustments. This blocking compensation mechanism is similar to the hand off mechanism in the Go GMP model.

A virtual thread is pinned to the platform thread on which it runs and cannot be unloaded during a blocking operation in the following two cases:

- when in

synchronizedWhen executing code in a block or method. - when executed

nativemethod or foreign function.

The fact that virtual threads are pinned will not affect the correctness of the program, but it may affect the concurrency and throughput of the system.If the virtual thread performs I/O while being pinned orBlockingQueue.take() Waiting for a blocking operation, the platform thread responsible for running it is blocked for the duration of the operation. (If the virtual thread is not pinned, it will be unloaded from the platform thread when performing blocking operations such as I/O)

How to unload virtual threads

We create 5 unstarted virtual threads through Stream, the tasks of these threads are: print the current thread, then sleep for 10 milliseconds, then print the thread again.Then start these virtual threads and calljion()to make sure the console can see everything:

public static void main(String[] args) throws Exception {

var threads = IntStream.range(0, 5).mapToObj(index -> Thread.ofVirtual().unstarted(() -> {

System.out.println(Thread.currentThread());

try {

Thread.sleep(10);

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

System.out.println(Thread.currentThread());

})).toList();

threads.forEach(Thread::start);

for (Thread thread : threads) {

thread.join();

}

}

//output

src [main] ~/Downloads/jdk-19.jdk/Contents/Home/bin/java --enable-preview main7

VirtualThread[#23]/runnable@ForkJoinPool-1-worker-3

VirtualThread[#22]/runnable@ForkJoinPool-1-worker-2

VirtualThread[#21]/runnable@ForkJoinPool-1-worker-1

VirtualThread[#25]/runnable@ForkJoinPool-1-worker-5

VirtualThread[#24]/runnable@ForkJoinPool-1-worker-4

VirtualThread[#25]/runnable@ForkJoinPool-1-worker-3

VirtualThread[#24]/runnable@ForkJoinPool-1-worker-2

VirtualThread[#21]/runnable@ForkJoinPool-1-worker-4

VirtualThread[#22]/runnable@ForkJoinPool-1-worker-2

VirtualThread[#23]/runnable@ForkJoinPool-1-worker-3

From the console output, we can find that VirtualThread[#21] First run on thread 1 of the ForkJoinPool, and when it returns from sleep, continue to run on thread 4.

Why do virtual threads jump from one platform thread to another after sleep ?

When we read the source code of the sleep method, we will find that the sleep method has been rewritten in Java 19, and the rewritten method also adds virtual thread-related judgments:

public static void sleep(long millis) throws InterruptedException {

if (millis < 0) {

throw new IllegalArgumentException("timeout value is negative");

}

if (currentThread() instanceof VirtualThread vthread) {

long nanos = MILLISECONDS.toNanos(millis);

vthread.sleepNanos(nanos);

return;

}

if (ThreadSleepEvent.isTurnedOn()) {

ThreadSleepEvent event = new ThreadSleepEvent();

try {

event.time = MILLISECONDS.toNanos(millis);

event.begin();

sleep0(millis);

} finally {

event.commit();

}

} else {

sleep0(millis);

}

}

After chasing the code, I found that the method actually called when the virtual thread sleep is Continuation.yield:

@ChangesCurrentThread

private boolean yieldContinuation() {

boolean notifyJvmti = notifyJvmtiEvents;

// unmount

if (notifyJvmti) notifyJvmtiUnmountBegin(false);

unmount();

try {

return Continuation.yield(VTHREAD_SCOPE);

} finally {

// re-mount

mount();

if (notifyJvmti) notifyJvmtiMountEnd(false);

}

}

That is to say Continuation.yield The stack of the current virtual thread will be transferred from the stack of the platform thread to the Java heap memory, and then the stacks of other ready virtual threads will be copied from the Java heap to the stack of the current platform thread to continue execution.perform IO orBlockingQueue.take() Waiting for blocking operations will cause virtual thread switching like sleep. The switching of virtual threads is also a relatively time-consuming operation, but compared with the context switching of platform threads, it is still much lighter.

other

Virtual threads and asynchronous programming

Reactive programming solves the problem that platform threads need to block waiting for other systems to respond. Using an asynchronous API does not block waiting for a response, but instead is notified of the result through a callback. When a response arrives, the JVM will allocate another thread from the thread pool to process the response. so,Handling a single asynchronous request involves multiple threads.

In asynchronous programming, we can reduce the response latency of the system, but due to hardware limitations, the number of platform threads is still limited, so ourSystem throughput is still a bottleneck. Another question is,Asynchronous programs execute in different threads, making it difficult to debug or analyze them.

Virtual threads improve code quality (reduce the difficulty of coding, debugging, and analyzing code) through minor syntax adjustments, and at the same time have the advantages of reactive programming, which can greatly improve system throughput.

Do not pool virtual threads

Because virtual threads are very lightweight and each virtual thread is intended to run only a single task during its lifetime, there is no need to pool virtual threads.

ThreadLocal under virtual thread

public class main {

private static ThreadLocal<String> stringThreadLocal = new ThreadLocal<>();

public static void getThreadLocal(String val) {

stringThreadLocal.set(val);

System.out.println(stringThreadLocal.get());

}

public static void main(String[] args) throws InterruptedException {

Thread testVT1 = Thread.ofVirtual().name("testVT1").unstarted(() ->main5.getThreadLocal("testVT1 local var"));

Thread testVT2 = Thread.ofVirtual().name("testVT2").unstarted(() ->main5.getThreadLocal("testVT2 local var"));

testVT1.start();

testVT2.start();

System.out.println(stringThreadLocal.get());

stringThreadLocal.set("main local var");

System.out.println(stringThreadLocal.get());

testVT1.join();

testVT2.join();

}

}

//output

null

main local var

testVT1 local var

testVT2 local var

The virtual thread supports ThreadLocal in the same way as the platform thread. The platform thread cannot obtain the variables set by the virtual thread, and the virtual thread cannot obtain the variables set by the platform thread. For the virtual thread, the platform thread responsible for running the virtual thread is transparent. . But since virtual threads can create millions, think twice before using ThreadLocal in virtual threads. If we create one million virtual threads in our application, there will be one million ThreadLocal instances and the data they reference. A large number of objects can place a large burden on memory.

Replacing Synchronized with ReentrantLock

Because Synchronized will make the virtual thread fixed on the platform thread, the blocking operation will not unload the virtual thread and affect the throughput of the program, so you need to use ReentrantLock to replace Synchronized:

before:

public synchronized void m() {

try {

// ... access resource

} finally {

//

}

}

after:

private final ReentrantLock lock = new ReentrantLock();

public void m() {

lock.lock(); // block until condition holds

try {

// ... access resource

} finally {

lock.unlock();

}

}

How to migrate

Directly replace the thread pool with a virtual thread pool.If your project uses

CompletableFutureYou can also directly replace the thread pool that executes asynchronous tasks withExecutors.newVirtualThreadPerTaskExecutor().Cancel the pooling mechanism. Virtual threads are very lightweight and do not require pooling.

synchronizedchange toReentrantLockto reduce virtual threads being pinned to platform threads.

Summarize

This article describes the Java threading model, the use, principles, and applicable scenarios of Java virtual threads. It also compares with the popular Go coroutines and finds similarities between the two implementations, hoping to help you understand Java virtual threads. Java19 virtual thread is a preview feature, it is likely to become an official feature in Java21, and the future can be expected. The author’s level is limited, if there is a bad place in writing, please criticize and correct me.

refer to

https://openjdk.org/jeps/425

https://howtodoinjava.com/java/multi-threading/virtual-threads/

https://mccue.dev/pages/5-2-22-go-concurrency-in-java

Public number: DailyHappy, a back-end code writer and a dark food maker.

#Java19 #official #virtual #threads #greatly #improve #system #throughput #open #source #Chinas #penultimate #coder #personal #space #News Fast Delivery