NVIDIA, Arm and Intel co-authored a white paper“FP8 Format for Deep Learning”, which describes the 8-bit floating point (FP8) specification. It provides a common format to accelerate AI development by optimizing memory usage and is suitable for AI training and inference.

There are two variants of this FP8 specification, E5M2 and E4M3.

Compatibility and flexibility

FP8 minimizes deviations from the existing IEEE 754 floating-point format and provides a good balance between hardware and software to leverage existing implementations, accelerate adoption, and increase developer productivity.

The E5M2 uses 5 bits for the exponent and 2 bits for the mantissa, and is a truncated IEEE FP16 format. The E4M3 format makes some adjustments to extend the range that can be represented using a four-digit exponent and three-digit mantissa in cases where increased precision is required at the expense of some numerical range.

The new format saves extra computation cycles because it only uses eight bits. It can be used for AI training and inference without any recasting between accuracies. Furthermore, by minimizing deviations from existing floating-point formats, it provides maximum freedom for future AI innovations, while still adhering to current specifications.

High precision training and inference

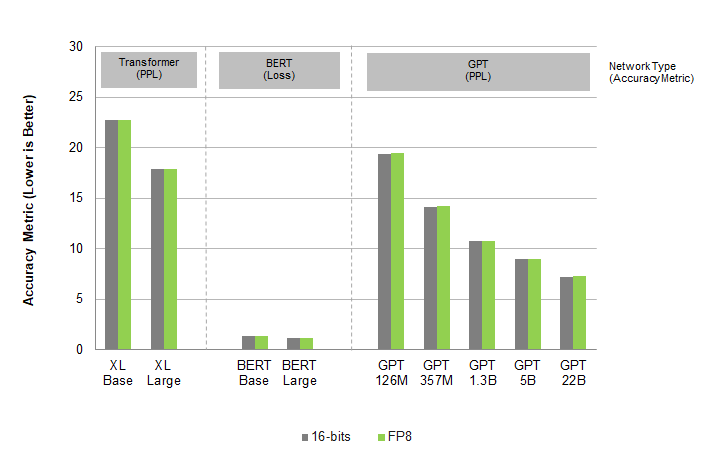

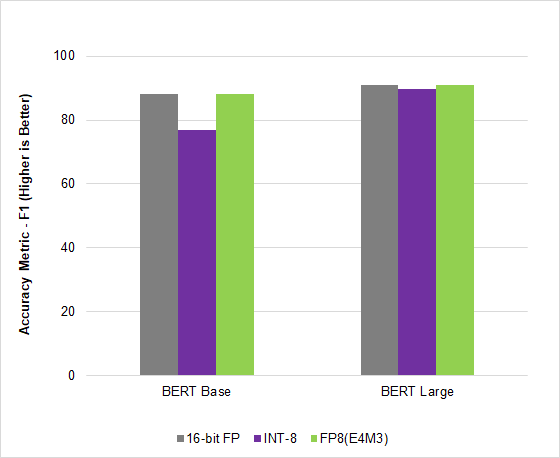

Testing of the FP8 format shows accuracy comparable to 16-bit accuracy across a wide range of use cases, architectures, and networks. Results for Transformer, Computer Vision, and GAN networks all show that FP8 training accuracy is similar to 16-bit accuracy, while providing significant speedup.

The following figure shows the language model AI training test:

The following figure shows the language model AI inference test:

In MLPerf Inference v2.1, a benchmark commonly used in the AI industry, NVIDIA Hopper leverages this new FP8 format to achieve a 4.5x speedup on BERT high-precision models, achieving higher throughput without compromising accuracy quantity.

standardization

NVIDIA, Arm, and Intel published this specification in an open, license-free format to encourage adoption by the AI industry. Additionally, the proposal has been submitted to IEEE.

Through this interchangeable format that preserves accuracy, AI models can run consistently and efficiently across all hardware platforms, helping to advance AI technology.

#NVIDIAArmIntel #jointly #release #FP8 #standardized #specification #interchangeable #format #News Fast Delivery