Until now, AI inference engines have been largely tied to the specific hardware for which they were designed. Such hardware lock-in means that developers will need to build specific software for different hardware, and will likely slow the pace of innovation in the industry as a whole.

Meta has open sourced a new Python framework called AITemplate (AIT). It revolutionizes the above, allowing developers to use GPUs from different manufacturers without sacrificing speed and performance.

AITemplate can provide high-speed inference services, initially will support both Nvidia TensorCore and AMD MatrixCore inference hardware, and open the source code of AITemplate under the Apache 2.0 license agreement.

Ajit Mathews, Director of Engineering at Meta, said: “The current version of AIT primarily supports Nvidia and AMD GPUs, but the platform is extensible to support Intel GPUs in the future if needed. We have now open sourced AIT. code, and we welcome any interested chip suppliers to contribute to it.”

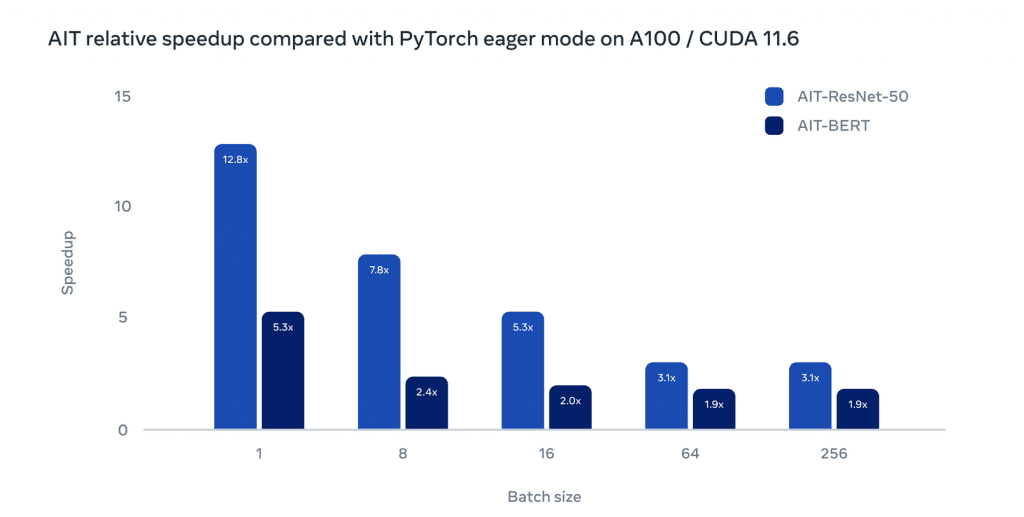

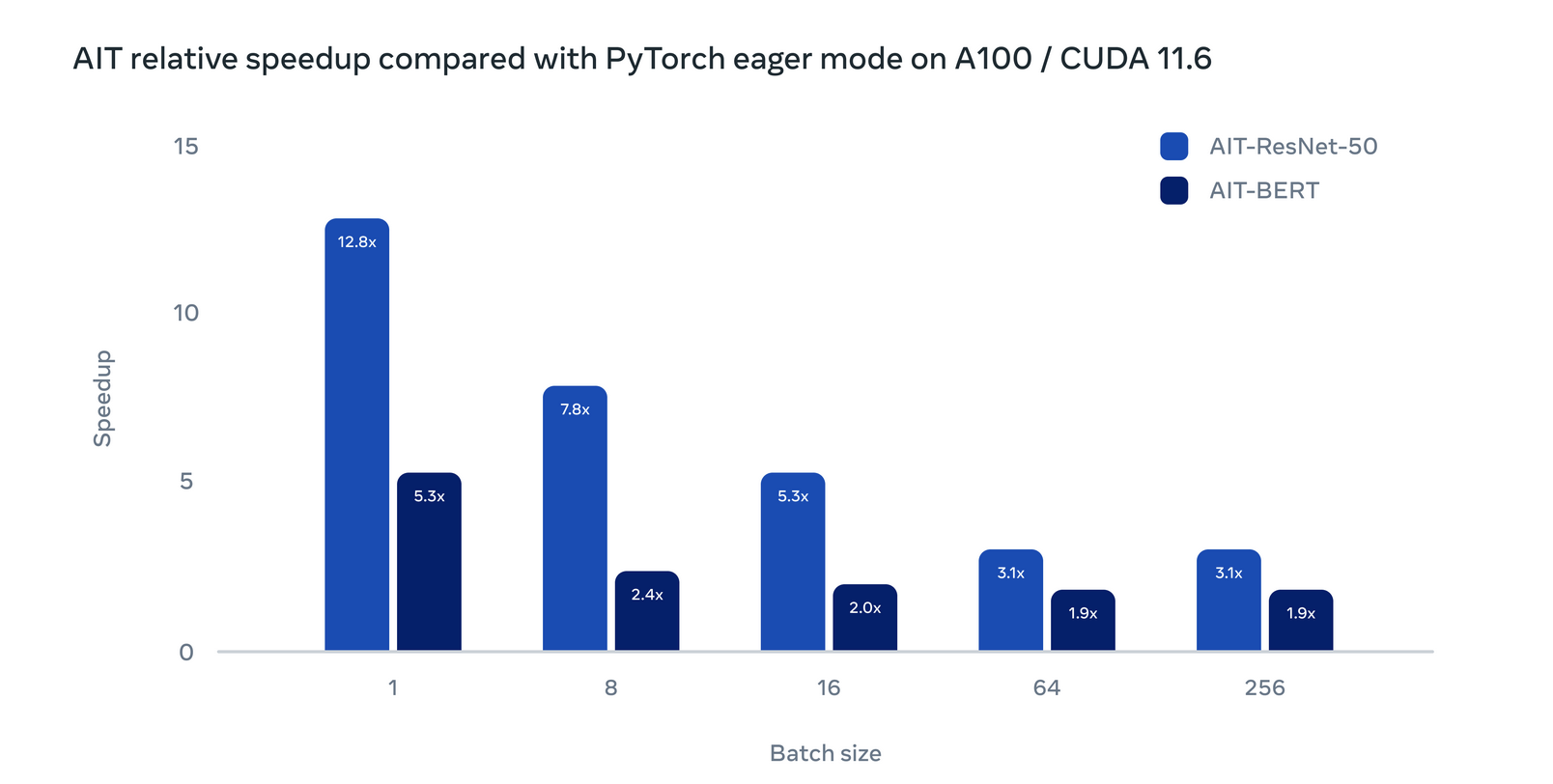

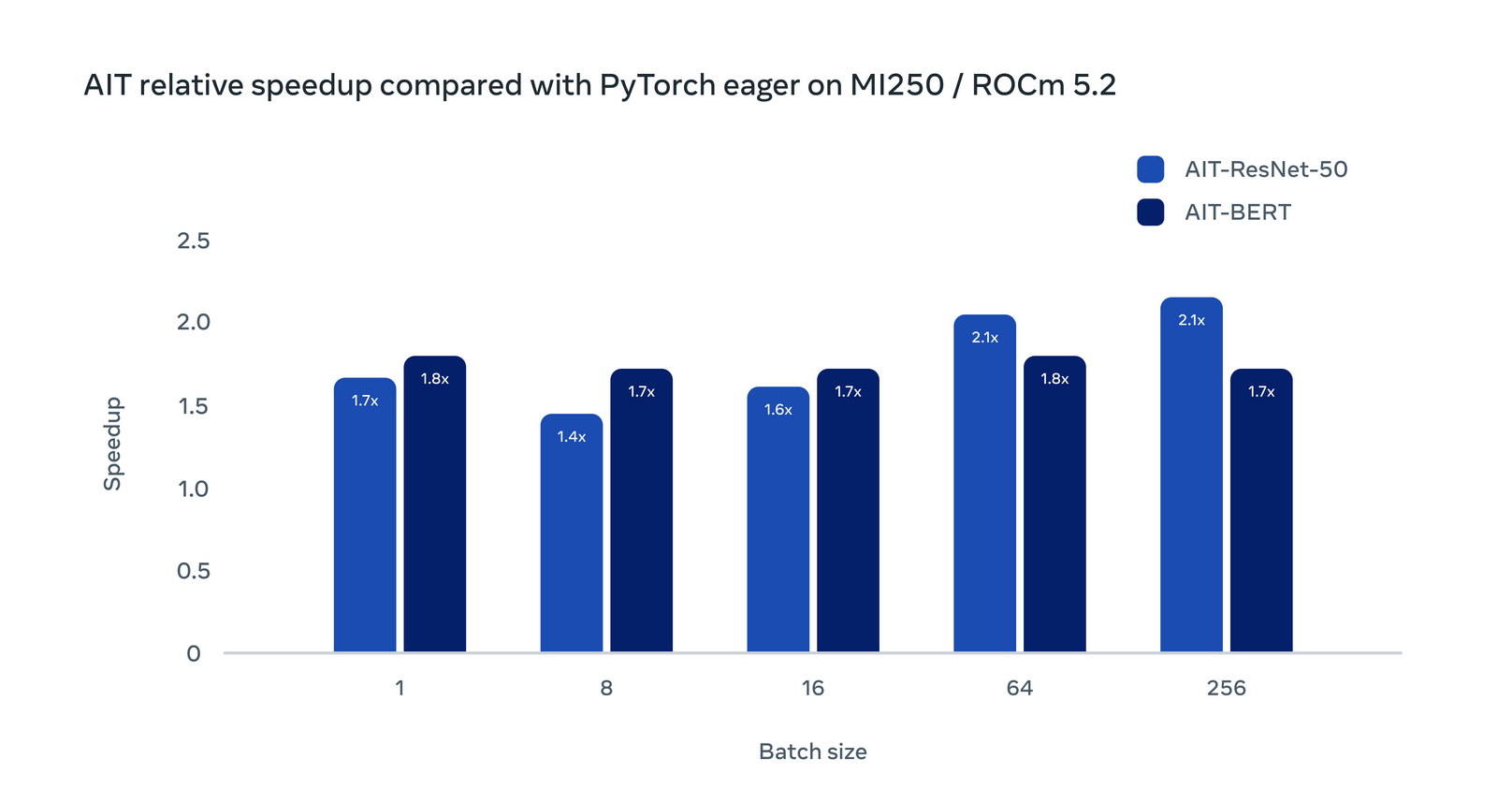

Compared to Eager mode in PyTorch, Meta uses AIT to achieve up to 12x performance improvement on Nvidia A100 AI GPU and up to 4x performance improvement on AMD M1250 GPU.

Meta-led AIT is very similar in concept to SYCL, which is a C++-based heterogeneous parallel programming framework that can be used to accelerate high-performance computing, machine learning, embedded computing, and computing on a fairly broad range of processor architectures A huge amount of desktop applications. But Meta differs in what it implements. SYCL is closer to the GPU programming layer, while AITemplate focuses on high-performance TensorCore/MatrixCore AI primitives.

Meta’s workloads are constantly evolving, and to meet these changing needs, Meta needs open and high-performance solutions, and they also tend to want the upper layers of their technology stack to be hardware-agnostic. Today AIT does this by supporting AMD and Nvidia GPUs.

Ajit Mathews also said: “Many of our current and future inference workloads have the opportunity to benefit from AIT, and we believe that AIT has the potential to be widely adopted by major vendors as the most powerful unified inference engine.”

#Meta #launches #GPUagnostic #framework #News Fast Delivery