Author: Xieyang

introduction

This article mainly introduces why you need to use RocketMQRetry and bottom-up mechanismproducers and consumers triggerRetry conditions and specific behaviorhow in RocketMQReasonable use of retry mechanismto help build resilient, highly available systemsBest Practices.

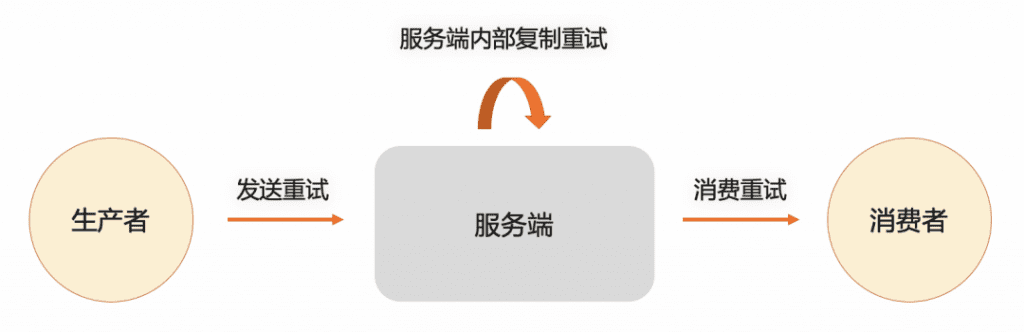

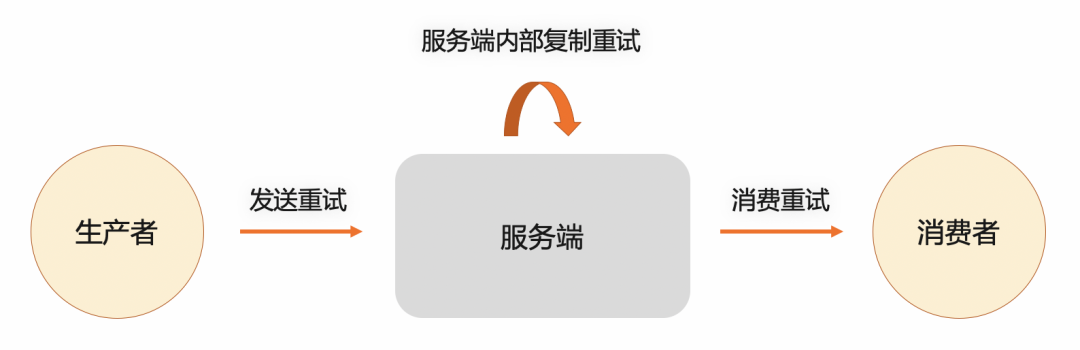

RocketMQ’s retry mechanism includes three parts, namely, producer retry, server internal data replication encounters unexpected problems, and consumer consumption retry. This article only discusses the two user-side implementations of producer retry and consumer consumption retry.

Producer sends retries

When the RocketMQ producer sends a message to the server, the call may fail due to network problems, service exceptions, etc. What should I do at this time? How to ensure that the message is not lost as much as possible?

1. Number of producer retries

RocketMQ has built-in request retry logic in the client, and supports configuring message sending at initializationMaximum number of retries (default is 2), if it fails, it will be resent according to the set number of retries. End until the message is sent successfully, or when the maximum number of retries is reached, and return a call error response after the last failure.forSynchronous sending and asynchronous sending, both support message sending retry.

- Synchronous sending: The calling thread will be blocked until a retry succeeds or the final retry fails (returns an error code or throws an exception).

- Asynchronous sending: The calling thread will not be blocked, but the call result will be returned in the form of a callback, as an exception event or a success event.

2. Producer retry interval

Before introducing producer retries, let’s first understand the concept of flow control. Flow control generally means that the server will limit the behavior of the client to send and receive messages when the pressure on the server is too high and the capacity is insufficient. It is a self-protection of the server. design. RocketMQ will adopt different retry strategies according to whether flow control is currently triggered:

Non-fluidic error scenarios: After retry is triggered by other trigger conditions, bothRetry immediately, no waiting interval.

Flow Control Error Scenario: The system will follow the presetExponential backoff strategy for delayed retries.

- Why introduce backoff and random jitter?

If the fault is caused by overload flow control,Retries will increase the load on the server, making the situation worse, so the client waits a while between attempts when it encounters flow control. The waiting time after each attempt increases exponentially. Exponential backoff can result in long backoff times because the exponential function grows quickly. The exponential backoff algorithm controls the retry behavior with the following parameters, see connection-backoff.md for more information.

INITIAL_BACKOFF: how long to wait before and after the first failed retry, default value: 1 second;

MULTIPLIER : Exponential backoff factor, that is, backoff magnification, default value: 1.6;

JITTER : random jitter factor, default value: 0.2;

MAX_BACKOFF : The upper limit of the waiting interval, default value: 120 seconds;

MIN_CONNECT_TIMEOUT : Minimum retry interval, default: 20 seconds.

ConnectWithBackoff()

current_backoff = INITIAL_BACKOFF

current_deadline = now() + INITIAL_BACKOFF

while (TryConnect(Max(current_deadline, now() + MIN_CONNECT_TIMEOUT))!= SUCCESS)

SleepUntil(current_deadline)

current_backoff = Min(current_backoff * MULTIPLIER, MAX_BACKOFF)

current_deadline = now() + current_backoff + UniformRandom(-JITTER * current_backoff, JITTER * current_backoff)

Special Note: For transaction messages, only transparent retries will be performed, and scenarios such as network timeouts or exceptions will not be retried.

3. Side effects of retrying

Constant retrying looks good, but it also has side effects, mainly including two aspects:Repeated messages, increased pressure on the server

Due to the uncertainty of remote calls, the request timeout triggers the message sending retry process. At this time, the client cannot perceive the processing result of the server; the message sending retry by the client may cause the consumer to consume repeatedly. Primary key and other informationIdempotent processing of messages.

More retries will alsoIncrease the processing pressure on the server side.

4. What are the best practices for users

1) Reasonably set the sending timeout period and the maximum number of sending times

The maximum number of sending times is configured in ClientConfiguration when initializing the client; for some real-time call scenarios, it may cause the message sending request link to be blocked, resulting in high or time-consuming business requests as a whole; it is necessary to reasonably evaluate the request for each call Timeout and maximum number of retries to avoid affecting the time-consuming of the entire link.

2) How to ensure that the sent message is not lost

Due to the complexity of the distributed environment, for example, when the network is unreachable, the RocketMQ client send request retry mechanism cannot guarantee that the message will be sent successfully. The business side needs to catch exceptions and do a good job of redundant protection. There are two common solutions:

- Return business processing failure to the caller;

- Try to store failed messages in the database, and then retry regularly by the background thread to ensure the final consistency of business logic.

3) Pay attention to flow control exceptions, resulting in failure to retry

The root cause of triggering flow control is insufficient system capacity. If message flow control is triggered due to unexpected reasons, and the client’s built-in retry process fails, it is recommended to implement server expansion and temporarily replace the request call to another system for emergency treatment .

4) How does the earlier version of the client use the failure delay mechanism to retry sending?

For RocketMQ 4.x and 3.x, the following clients can enable the failure delay mechanism:

producer.setSendLatencyFaultEnable(true)

Configure the number of retries using:

producer.setRetryTimesWhenSendFailed() producer.setRetryTimesWhenSendAsyncFailed()

consumer consumption retry

A typical problem when message middleware is asynchronously decoupled is if the downstream service fails to process message events, what should be done?

RocketMQ’s message confirmation mechanism and consumption retry strategy can help analyze the following problems:

- How to ensure that the business processes the message completely?

The consumption retry strategy can ensure the integrity of each message processing when designing and implementing consumer logic, and avoid the inconsistency of business status caused by abnormal consumption of some messages.

- How to restore the status of the message being processed when the business application is abnormal?

When the system is abnormal (downtime failure) and other scenarios, how to restore the status of the message in process, and what is the specific behavior of consumption retry.

1. What is consumption retry?

When is consumption considered a failure?

After receiving the message, the consumer will call the user’s consumption function to execute the business logic. If the client returns consumption failure ReconsumeLater, an unexpected exception is thrown, or message processing times out (including queuing timeout in PushConsumer),As long as the server does not receive a response within a certain period of time, the consumption will be considered as a failure.What is consumption retry?

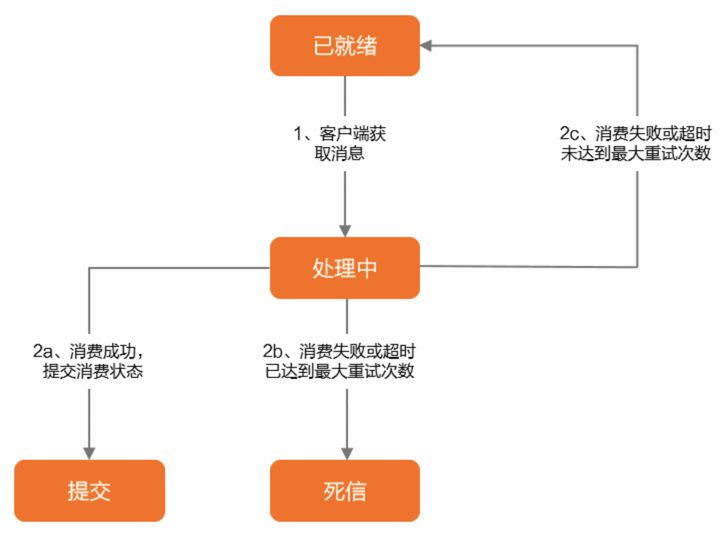

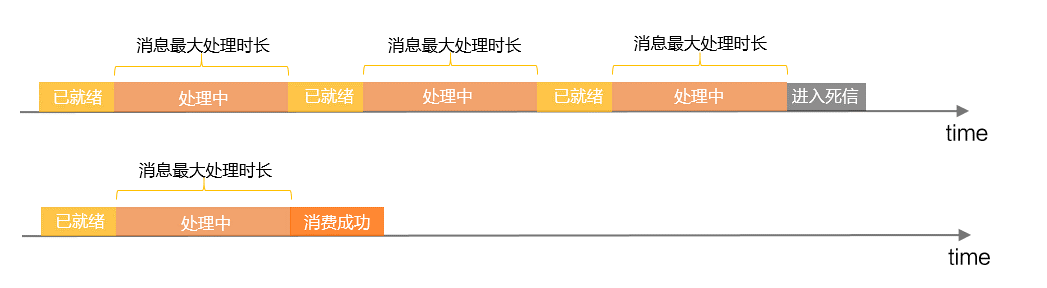

After the consumer fails to consume a certain message, the server will re-deliver the message to the client according to the retry strategy. If the consumption is not successful after exceeding a fixed number, the message will not be retried and will be sent directly to the dead letter queue;Retry Process State Machine: The status and change logic of the message in the retry process;

retry interval: After the previous consumption failed or timed out, the interval between the next retry consumption;

Maximum number of retries: The maximum number of times a message can be retried for consumption.

2. Scenario of message retry

It should be noted that retry is to deal with abnormal situations and give the program the opportunity to consume failed messages again, and should not be used as a normalized link.

Recommended UseScenes:

- Business processing failed. The reason for the failure is related to the current message content. It is expected to be executed successfully after a period of time;

- It is a low-probability event. For a large number of messages, there are only a small number of failures. The subsequent messages have a high probability of being successfully consumed, which is very normal.

Positive example: The consumption logic is to deduct inventory. A very small number of products fail to deduct due to optimistic lock version conflicts. Retrying usually succeeds immediately.

wrong usageScenes:

- It is unreasonable to use consumption failure in the consumption processing logic to split the results of conditional judgments.

Counter-example: The status of the order in the database is already canceled. If you receive a delivery message at this time, you should not return consumption failure during processing, but should return success and mark it as not to be delivered.

- It is unreasonable to use consumption failure to limit processing rate and flow in consumption processing.

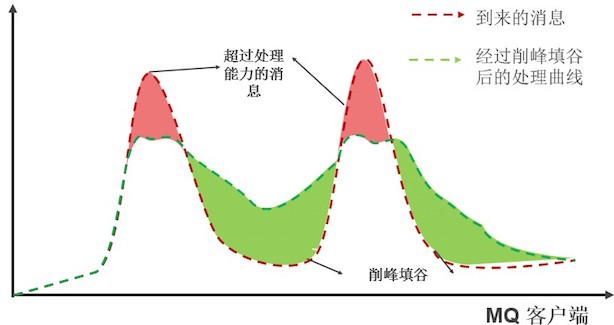

The purpose of current limiting is to temporarily accumulate messages that exceed the flow rate in the queue to achieve peak shaving, rather than allowing messages to enter the retry link.

This approach will cause messages to be repeatedly transmitted between the server and the client, increasing the overhead of the system, mainly including the following aspects:

- RocketMQ’s internal retry involves write amplification, and each retry will generate a new retry message, and a large number of retries will bring serious IO pressure;

- Retry has complex back-off logic, which is internally implemented as a gradient timer. The timer itself does not have the characteristics of high throughput. A large number of retries will cause retry messages to fail to be dequeued in time. The retry interval will be unstable, which will lead to delayed consumption of a large number of retry messages, that is, the period of peak clipping will be greatly extended.

3. Do not use retry instead of throttling

The above misuse scenario is actually a combination of current limiting and retry capabilities for peak clipping. The best peak clipping method recommended by RocketMQ is a combination ofCurrent limiting and stackingOn the premise of protecting itself, the business needs to limit the consumption traffic, and use the accumulation capacity provided by RocketMQ to delay the consumption of messages beyond the current processing of the business, so as to achieve the purpose of peak shaving. Messages that exceed the processing capacity in the figure below should be accumulated on the server side instead of retrying through consumption failure.

If you don’t want to rely on additional products/components to complete this function, you can also use some local tools, such as Guava’s RateLimiter, to complete single-machine current limiting. As shown below, declare a RateLimiter of 50 QPS, acquire a token in a blocking manner before consumption, and process the message as soon as it is obtained, without blocking.

RateLimiter rateLimiter = RateLimiter.create(50);

PushConsumer pushConsumer = provider.newPushConsumerBuilder()

.setClientConfiguration(clientConfiguration)

// 设置订阅组名称

.setConsumerGroup(consumerGroup)

// 设置订阅的过滤器

.setSubscriptionExpressions(Collections.singletonMap(topic, filterExpression))

.setMessageListener(messageView -> {

// 阻塞直到获得一个令牌,也可以配置一个超时时间

rateLimiter.acquire();

LOGGER.info("Consume message={}", messageView);

return ConsumeResult.SUCCESS;

})

.build();

4. PushConsumer consumption retry strategy

When PushConsumer consumes messages, the main states of the messages are as follows:

Ready: Ready state. The message is ready on the message queue RocketMQ version server and can be consumed by consumers;

Inflight: Processing status. The message is acquired by the consumer client and is in the state of consumption that has not yet returned the consumption result;

Commit: Commit status. In the state of successful consumption, the consumer returns a successful response to end the state machine of the message;

DLQ: Dead Letter Status

The final bottom-up mechanism of the consumption logic, if the message fails to be processed and retried continuously until the maximum number of retries is exceeded, the message will not be retried at this time.

The message will be delivered to the dead letter queue. You can recover services by consuming messages from the dead letter queue.Maximum number of retries

The maximum number of retries for a PushConsumer is determined when it is created.

For example, if the maximum number of retries is 3, the message can be delivered up to 4 times, 1 is the original message, and 3 is the retry delivery times.

- Out-of-sequence messages (non-sequential messages): The retry interval is the step time, and the specific time is as follows:

illustrate: If the number of retries exceeds 16 times, the next retry interval will be 2 hours.

- Sequential messages: The retry interval is a fixed time, the default is 3 seconds.

5. SimpleConsumer consumption retry strategy

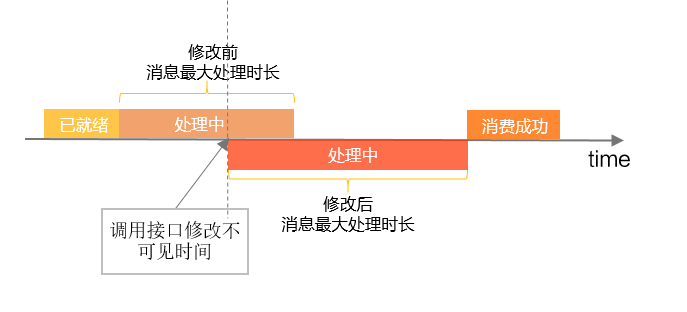

Unlike the PushConsumer consumption retry strategy, the retry interval of the SimpleConsumer consumer is pre-allocated, and each time the consumer gets a message, it will set an invisible time parameter when calling the API InvisibleDuration, which is the maximum processing time of the message. If the message consumption fails to trigger a retry, there is no need to set the time interval for the next retry, and the value of the invisible time parameter is directly reused.

Since the invisible time is pre-allocated, it may be quite different from the message processing time in the actual business. You can modify the invisible time through the API interface.

For example, the preset message processing time takes up to 20 ms, but the message processing cannot be completed within 20 ms in the actual business, you can modify the message invisible time, extend the message processing time, and avoid the message triggering the retry mechanism.

To modify the invisible time of a message, the following conditions must be met:

- Message processing has not timed out

- Message processing uncommitted consumption status

As shown in the figure below, the modification of the message invisible time will take effect immediately, that is, the message invisible time will be recalculated from the moment the API is called.

Same as PushConsumer.

Message retry interval = invisible time – actual message processing time

For example: if the message is invisible for 30 ms, and the actual message processing takes 10 ms to return a failure response, it will take 20 ms before the next message retry, and the message retry interval at this time is 20 ms; if it reaches 30 ms If the message has not been processed and the result has not been returned, the message times out and is retried immediately. At this time, the retry interval is 0 ms.

The consumption retry interval of SimpleConsumer is controlled by the invisible time of the message.

//消费示例:使用SimpleConsumer消费普通消息,主动获取消息处理并提交。

ClientServiceProvider provider1 = ClientServiceProvider.loadService();

String topic1 = "Your Topic";

FilterExpression filterExpression1 = new FilterExpression("Your Filter Tag", FilterExpressionType.TAG);

SimpleConsumer simpleConsumer = provider1.newSimpleConsumerBuilder()

//设置消费者分组。

.setConsumerGroup("Your ConsumerGroup")

//设置接入点。

.setClientConfiguration(ClientConfiguration.newBuilder().setEndpoints("Your Endpoint").build())

//设置预绑定的订阅关系。

.setSubscriptionExpressions(Collections.singletonMap(topic, filterExpression))

.build();

List<MessageView> messageViewList = null;

try {

//SimpleConsumer需要主动获取消息,并处理。

messageViewList = simpleConsumer.receive(10, Duration.ofSeconds(30));

messageViewList.forEach(messageView -> {

System.out.println(messageView);

//消费处理完成后,需要主动调用ACK提交消费结果。

//没有ack会被认为消费失败

try {

simpleConsumer.ack(messageView);

} catch (ClientException e) {

e.printStackTrace();

}

});

} catch (ClientException e) {

//如果遇到系统流控等原因造成拉取失败,需要重新发起获取消息请求。

e.printStackTrace();

}

- Modify the invisible time of the message

Case: A product uses a message queue to send the business logic of decoupling “video rendering”. The sender sends the task number, and the consumer processes the task after receiving the number. Because the business logic of the consumer takes a long time, when the consumer consumes the same task again, the task is not completed and can only return consumption failure. Under this new API, users can call to renew the message by modifying the invisible time, so as to achieve precise control over the status of a single message.

simpleConsumer.changeInvisibleDuration();

simpleConsumer.changeInvisibleDurationAsync();

6. Functional Constraints and Best Practices

- Set the maximum timeout time and times of consumption

Clearly return success or failure to the server as soon as possible, and do not use timeout (sometimes exception throwing) instead of consumption failure.

- Do not use the retry mechanism to limit business traffic

Error example: If the current consumption speed is too high to trigger the current limit, return consumption failure and wait for the next consumption.

Correct example: If the current consumption rate is too high to trigger the current limit, delay getting the message and consume it later.

- Send retry and consumption retry will lead to repeated consumption of the same message, the consumer should have a good idempotent design

Correct example: the logic of consumption in a certain system is to send a text message to a certain user. The text message has been sent successfully. When the consumer application receives the message repeatedly, it should return the consumption success.

Summarize

This article mainly introduces the basic concept of retry, the conditions and specific behaviors that trigger retry when producers and consumers send and receive messages, and the best practice of RocketMQ sending and receiving fault tolerance.

Retry policies help us recover from random, short transient failures and are a powerful mechanism for improving availability while tolerating errors. But please keep in mind that “retry is selfish for a distributed system”, because the client thinks its request is very important and requires the server to spend more resources to process it. Blind retry design is not advisable. Reasonable use of retry Experiments can help us build more resilient and reliable systems.

Welcome to scan the QR code below to join the DingTalk group to communicate together~

Click here to enter the official website for more details~

#Detailed #Explanation #Practices #RocketMQ #Retry #Mechanism #Alibaba #Cloud #Cloud #Native #Personal #Space #News Fast Delivery