Author: vivo Internet Storage Technology Team – Qiu Sidi

In the process of building an enterprise big data system, data collection is the primary link. However, the relevant open source data collection components in the current industry cannot meet the needs of enterprises for large-scale data collection and effective data collection governance, so most companies adopt the method of self-developed collection components. This article provides you with the key design ideas in the design and development process of the log collection Agent through the practical experience in the design of vivo’s log collection service.

I. Overview

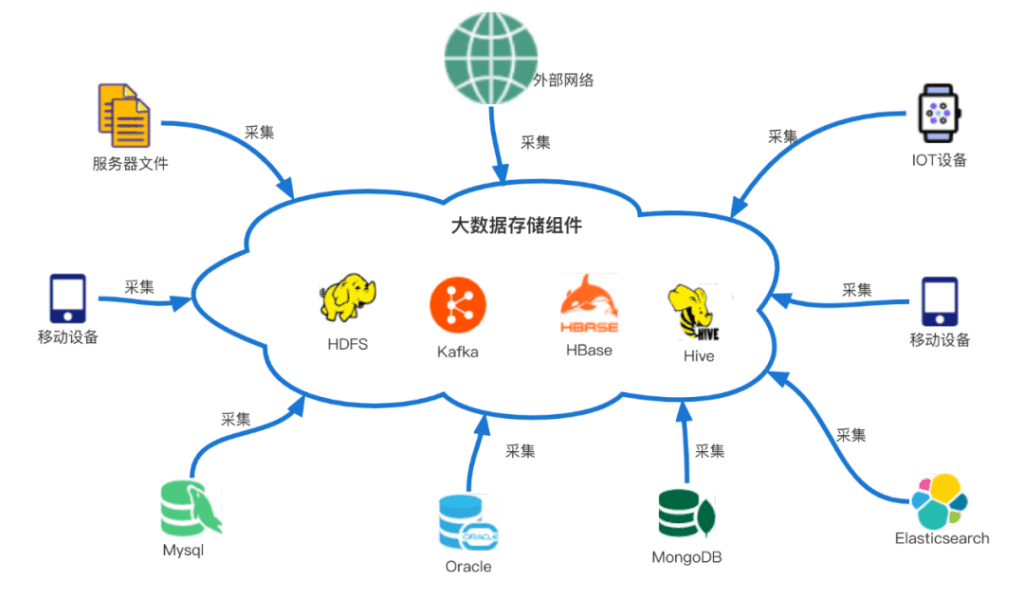

In the process of building an enterprise big data system, data processing generally includes four steps: collection, storage, calculation, and use. Among them, data collection is the most important link in the construction process, and it is also a crucial link. If there is no collection, there will be no data, let alone subsequent data processing and use. Therefore, the data sources of operational reports, decision reports, log monitoring, audit logs, etc. in enterprises we see are all based on data collection. Generally, our definition of data collection is the process of converging data from various scattered sources (including embedded logs of enterprise products, server logs, databases, IOT device logs, etc.) into big data storage components (As shown below). Among them, the log file type collection scenario is the most common among various data collection types. Next, we will propose our design practice around this scenario.

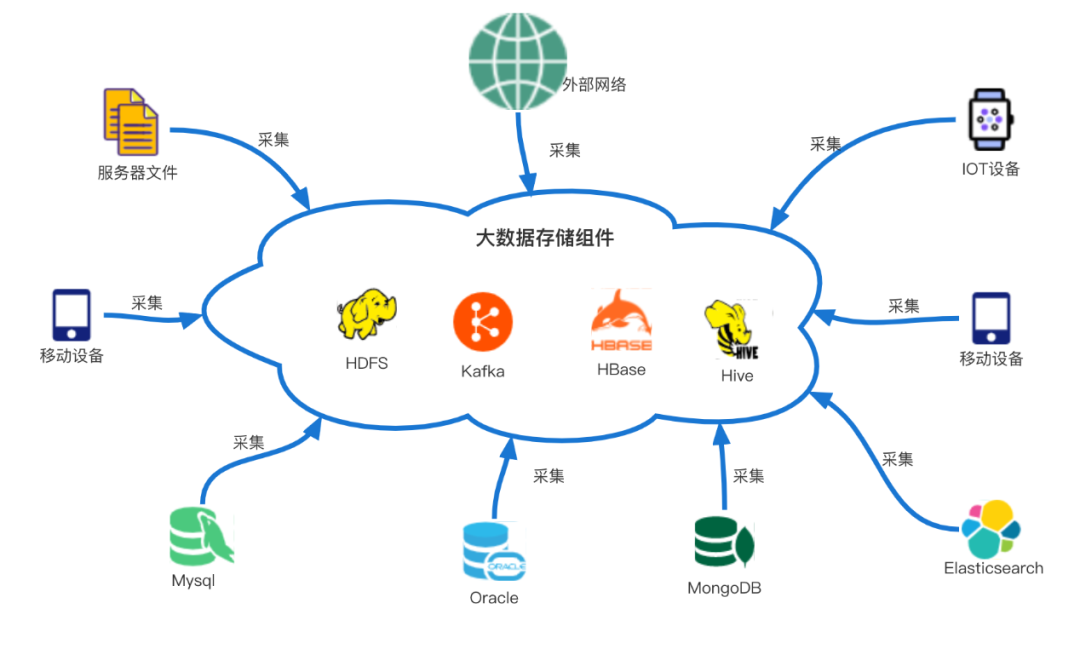

Generally, the log collection service can be divided into several parts (the common architecture in the industry is shown in the figure below): log collection agent components (common open source collection agent components include Flume, Logstash, Scribe, etc.), collection transmission and storage components (such as kafka , HDFS), collection management platform. Bees collection service is a log collection service developed by vivo. This article summarizes the core technical points and some key thoughts in the design of a general log collection agent after the development practice of the key component bees-agent in the Bees collection service. Point, I hope it is useful to everyone.

2. Features & Capabilities

Real-time and offline collection capabilities of basic log files

Based on log files, no intrusive collection of logs

With the ability to customize the filtering of very large logs

Capable of custom filtering collection, matching collection, and formatting

Capable of custom speed limit collection

With second-level real-time collection timeliness

With the ability to continue uploading from breakpoints, no data will be lost when upgrading and stopping

With a visualized and centralized acquisition task management platform

Rich monitoring indicators and alarms (including collection traffic, timeliness, integrity, etc.)

Low system resource overhead (including disk, memory, CPU and network, etc.)

3. Design principles

simple and elegant

Robust and stable

4. Key design

At present, the popular log collection agent components in the industry include Flume, Logstash, Scribe, FileBeats, and Fluentd, which are open source, and Alibaba’s Logtail, which is self-developed. They all have good performance and stability. If you want to get started quickly, you may wish to use them. However, generally large enterprises have personalized collection requirements, such as large-scale management of collection tasks, collection speed limit, collection filtering, etc., as well as collection task platformization and task visualization requirements. In order to meet the above requirements, we have developed a log Collect Agent.

Before doing all the design and development, we set the most basic design principles of the collection Agent, namely simplicity, elegance, robustness and stability.

The general process of log file collection includes: file discovery and monitoring, file reading, log content formatting, filtering, aggregation, and sending. When we start to design such a log collection Agent, we will encounter many key difficulties, such as: where are the log files? How to find out that the log file is newly added? How to monitor log content append? How to identify a file? What should I do if the system restarts after a downtime? How to continue uploading from a breakpoint? Waiting for the questions, next, we will answer the key questions encountered in the process of designing the log collection Agent for you one by one. (Note: The file paths and file names appearing below are demo samples and not real paths)

4.1 Log file discovery and monitoring

How does the Agent know which log files to collect?

The simplest design is to list all the log file paths to be collected in the Agent’s local configuration file, such as /home/sample/logs/access1.log, /home/sample/logs/access2.log, /home/sample/logs/access3.log, etc., so that the Agent can get the corresponding log file list by reading the configuration file, so that it can traverse the file list to read the log information. But the actual situation is that log files are dynamically generated, like general tomcat business logs, a new log file will be generated every hour, and the log name is usually timestamped, named like /data/sample/logs/

access.2021110820.log, so it is not feasible to directly configure a fixed file list.

Therefore, we think that we can use a folder path and log file name to use regular expressions or wildcards to represent (for convenience, wildcards are used to represent uniformly below). The logs on the machine are generally stored in a certain directory, such as /data/sample/logs/, and the file names are generated by rolling due to certain rules (such as timestamps), similar to access.2021110820.log,

access.2021110821.log,

access.2021110822.log, we can simply use the wildcard method of access.*.log to match this type of log. Of course, you can choose your regular expression according to the matching granularity you need. With this wildcard method, our Agent can match a batch of log files generated by rolling.

How to continuously discover and monitor newly generated log files?

Since new log files will be dynamically generated hourly by other applications (such as Nginx, Tomcat, etc.), how can Agent use wildcards to quickly find this newly generated file?

The easiest thing to think of is to use the polling design scheme, that is, to check whether the log files in the corresponding directory have increased through a scheduled task, but there is a problem with this simple scheme, that is, if the polling interval is too long, For example, if the interval is set to 10s or 5s, the timeliness of log collection cannot meet our needs; if the polling interval is too short, such as 500ms, a large number of invalid polling checks will consume a lot of CPU resources. Fortunately, the Linux kernel provides us with an efficient file event monitoring mechanism: the Linux Inotify mechanism. This mechanism can monitor any file operation, such as file creation, file deletion, and file content change, and the kernel will notify the application layer of a corresponding event. The event mechanism of Inotify is much more efficient than the polling mechanism, and there is no situation where the CPU runs idle to waste system resources. In java, using java.nio.file.WatchService, you can refer to the following core code:

public synchronized BeesWatchKey watchDir(File dir, WatchEvent.Kind<?>... watchEvents) throws IOException {if (!dir.exists() && dir.isFile()) {throw new IllegalArgumentException("watchDir requires an exist directory, param: " + dir);}Path path = dir.toPath().toAbsolutePath();BeesWatchKey beesWatchKey = registeredDirs.get(path);if (beesWatchKey == null) {beesWatchKey = new BeesWatchKey(subscriber, dir, this, watchEvents);registeredDirs.put(path, beesWatchKey);logger.info("successfully watch dir: {}", dir);}return beesWatchKey;}public synchronized BeesWatchKey watchDir(File dir) throws IOException {WatchEvent.Kind<?>[] events = {StandardWatchEventKinds.ENTRY_CREATE,StandardWatchEventKinds.ENTRY_DELETE,StandardWatchEventKinds.ENTRY_MODIFY};return watchDir(dir, events);}

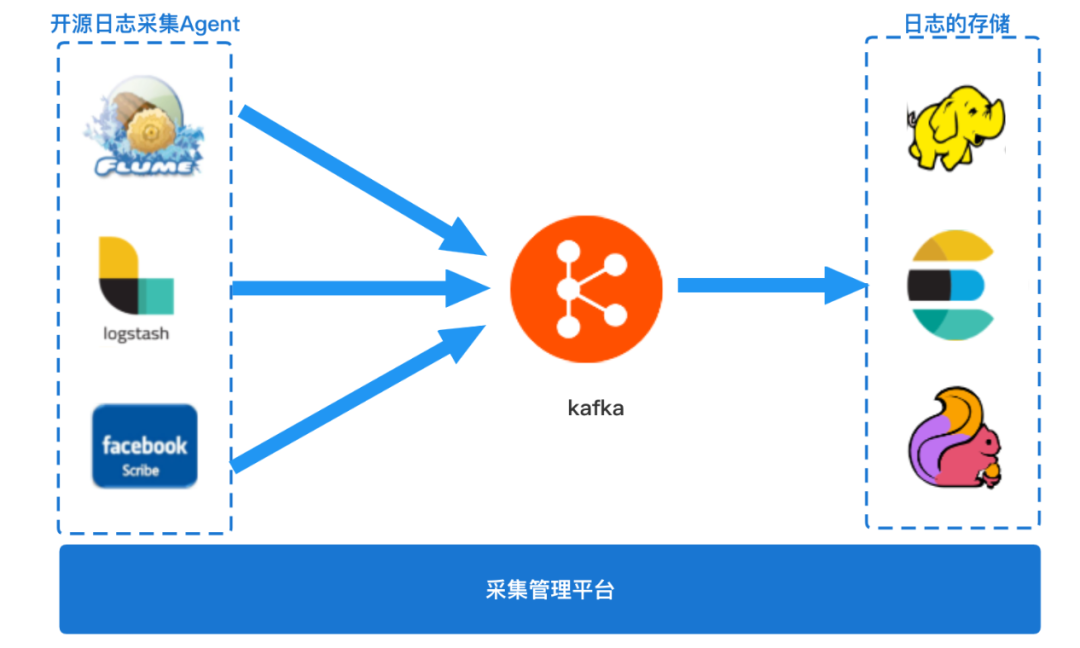

Based on the above considerations, the discovery of log files and the monitoring of log content changes, we use the design scheme of “inotify mechanism as the main + polling mechanism” and “wildcard”, as shown in the following figure:

4.2 Unique identification of log files

To design the unique identifier of the log file, if it is not feasible to directly use the name of the log file, the log file name may be frequently used repeatedly. For example, when the log framework used by some applications outputs logs, the current application is outputting The naming does not have any timestamp information. For example, it is always access.log. Only when the file is written in the current hour is completed, the file is renamed to access.2021110820.log. At this time, the newly produced log file is also named access. log, the file name is repeated for the collection Agent, so the file name cannot be used as the unique identification of the file.

We thought of using the file inode number on the Linux operating system as the file identifier. The Unix/Linux file system uses the inode number to identify different files. Even if the file is moved or renamed, the inode number remains unchanged. When a new file is created, a new unique inode number will be assigned to the new file, so that can be easily distinguished from other files on the existing disk. We can use ls -i access.log to quickly view the inode number of the file, as shown in the following code block:

ls -i access.log62651787 access.log

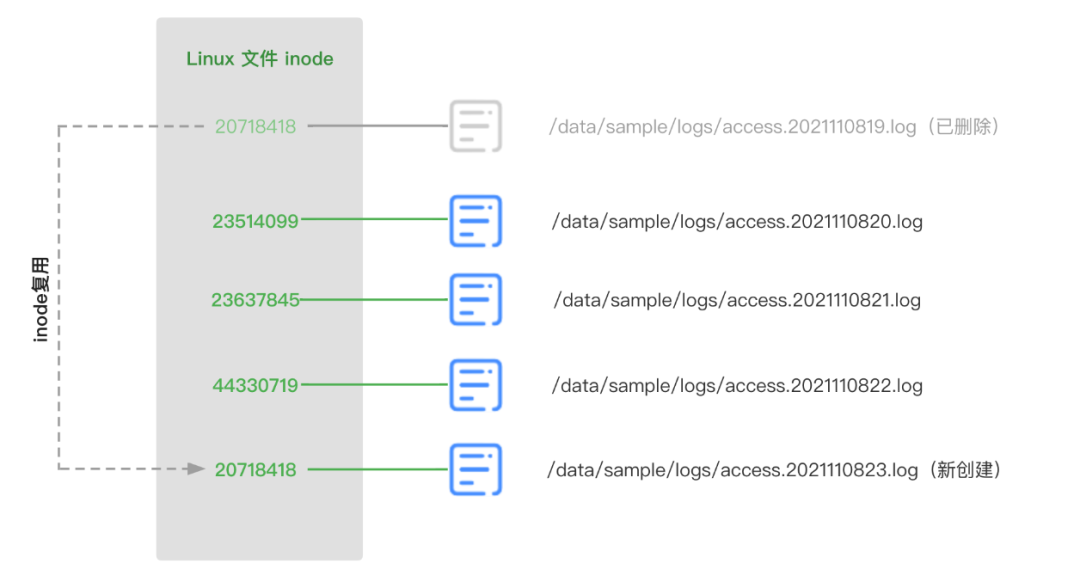

Generally speaking, using the inode number of the system as an identifier can already satisfy most situations, but for more rigorous consideration, the solution can be further upgraded. Because the inode numbers in Linux are reused, the “reuse” here should be distinguished from “duplication”. All files on a machine will not have two duplicate inode numbers at the same time, but when the file is deleted , when another new file is created, the inode number of this file may reuse the inode number of the previously deleted file. If the code logic is not handled well, it is likely to cause the log file to be missed. This point should be noted. In order to avoid this problem, we design the unique identifier of the file as “combination of file inode and file signature”. The file signature here uses the Hash value of the first 128 bytes of the file content. The code reference is as follows:

public static String signFile(File file) throws IOException {String filepath = file.getAbsolutePath();String sign = null;RandomAccessFile raf = new RandomAccessFile(filepath, "r");if (raf.length() >= SIGN_SIZE) {byte[] tbyte = new byte[SIGN_SIZE];raf.seek(0);raf.read(tbyte);sign = Hashing.sha256().hashBytes(tbyte).toString();}return sign;}

Add a little knowledge about inodes. The Linux inode will be full, and the inode information storage itself will consume some hard disk space, because the inode number is only a small part of the inode content, and the inode content mainly contains the metadata information of the file: such as the number of bytes of the file, the file You can use the stat command to check the complete inode information of a file (stat access.log) for the position of the data block, the read-write and execution permissions of the file, and the timestamp of the file. Because of this design, the operating system divides the hard disk into two areas: one is the data area, which stores file data; the other is the inode area, which stores the information contained in the inode. The size of each inode node is generally 128 bytes or 256 bytes. To view the total number of inodes and the used number of each hard disk partition, you can use the df -i command. Since each file must have an inode, if the log files on a log machine are small and there are too many log files, it may happen that the operating system runs out of inodes, that is, the inode area disk is full, but the hard disk in the data area we use has not yet full stock. At this time, new files cannot be created on the hard disk. Therefore, in the log printing specification, it is necessary to avoid generating a large number of small log files.

4.3 Reading of log content

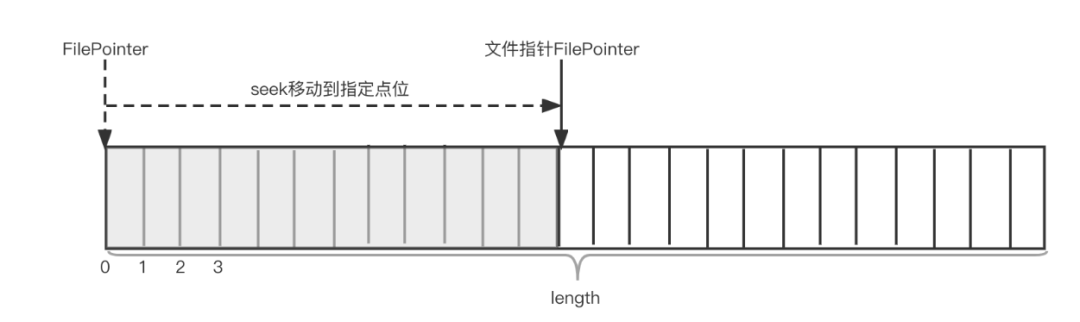

After discovering and being able to effectively monitor the log file, how should we read the real-time appended log content in the log file? To read the log content, we expect to read the log content of each line from the log file line by line, and each line is separated by \n or \r. Obviously, we cannot simply use InputStreamReader to read directly, because Reader can only read the entire log file from beginning to end according to characters, which is not suitable for reading real-time appended log content; the most suitable choice should be to use RandomAccessFile. RandomAccessFile provides code developers with a pointer that can be set, through which developers can access the random location of the file, refer to the following figure:

In this way, when the thread reads to the end of the file at a certain moment, it only needs to record the current position, and the thread enters the waiting state until the new log content is written, the thread restarts, and can continue after startup Read from the end of the last time, the code reference is as follows. In addition, RandomAccessFile will also be used to start reading from the specified point after the process hangs or the crash is restored, without re-reading from the entire file header. The ability to continue uploading from breakpoints will be mentioned later.

RandomAccessFile raf = new RandomAccessFile(file, "r");byte[] buffer;private void readFile() {if ((raf.length() - raf.getFilePointer()) < BUFFER_SIZE) {buffer = new byte[(int) (raf.length() - raf.getFilePointer())];} else {buffer = new byte[BUFFER_SIZE];}raf.read(buffer, 0, buffer.length);}

4.4 Realize resuming upload from breakpoint

Machine downtime, Java process OOM restart, Agent upgrade restart, etc. are common things, so how to ensure the correctness of collected data under these circumstances? The main consideration of this problem is the ability to collect Agent resumed uploads. Generally, we need to record the current collection point (collection point, that is, the position pointed to by the last pointer in RandomAccessFile, an integer value) during the collection process. When the Agent successfully sends the data in the corresponding buffer to Kafka, At this point, you can update the latest point value to the memory first, and persist the collected point value in the memory to the point file on the local disk through a scheduled task (3s by default). In this way, when the process stops and restarts, load the collection point in the disk file this time, and use RandomAccessFile to move to the corresponding point, realizing the ability to continue collecting from the last stop point, and the Agent can Restore to the original state, so as to realize the breakpoint resume transmission, effectively avoid the risk of repeated collection or missing collection.

Each collection task targeted by the Agent will have a corresponding point file. If an Agent has multiple collection tasks, it will correspond to multiple point files. The content format of a point file is a JSON array (as shown in the figure below). Among them, file indicates the name of the file collected by the task, inode is the inode of the file, and pos is the reduction of position, indicating the value of the point;

[{"file": "/home/sample/logs/bees-agent.log","inode": 2235528,"pos": 621,"sign": "cb8730c1d4a71adc4e5b48931db528e30a5b5c1e99a900ee13e1fe5f935664f1"}]

4.5 Real-time data transmission

The previous part mainly introduced design schemes such as real-time discovery of log files, real-time log content change monitoring, and log content reading. Next, we will introduceAgent’s data sending.

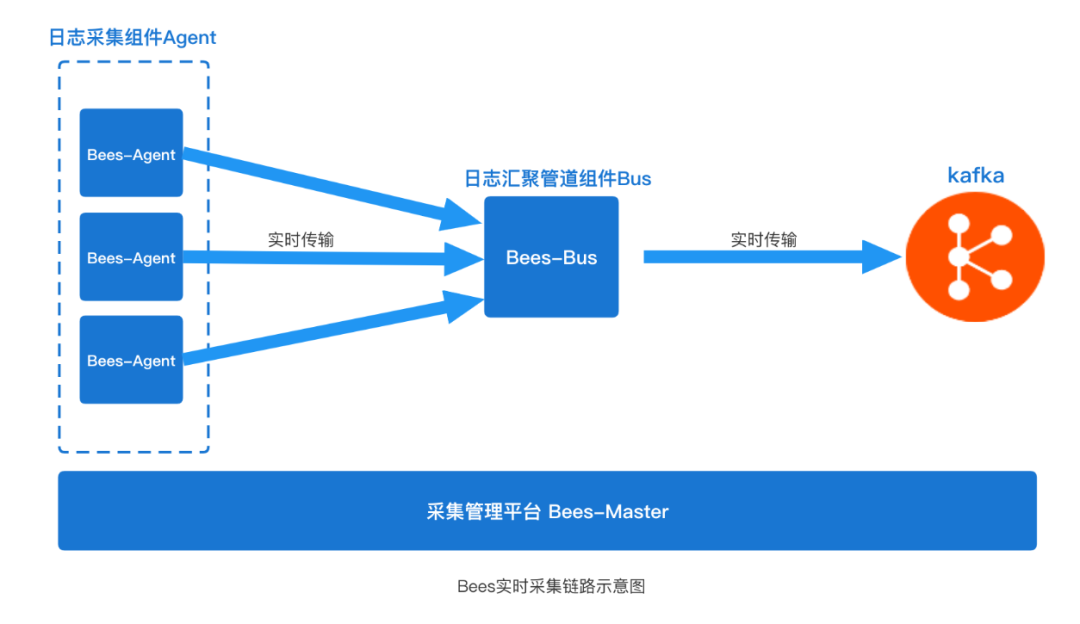

The simplest model is that the Agent sends data directly to the Kafka distributed message middleware through the Kafka Client, which is also a simple and feasible solution. In fact, in the Bees collection link architecture, we added a “component bees-bus” (as shown in the figure below) to the data link between Agent and Kafka.

The bees-bus component mainly plays the role of aggregating data, similar to the role of Flume in the aggregation of collection links. The Agent implements the communication between NettyRpcClient and the Bus based on the Netty open source framework to realize data transmission. The network transmission part has a lot of content, which is not the focus of this article (for details, please refer to the implementation of Flume NettyAvroRpcClient).

Here is a little supplement, the main purpose of our introduction of bees-bus is as follows:

The convergence comes from the excessive number of network connections of the Agent, so as to prevent all Agents from directly connecting to the Kafka broker and causing greater pressure on it;

After the data is aggregated to the Bus, the Bus has the ability to output multiple channels of traffic, which can realize Kafka data disaster recovery across computer rooms;

In the case of a sudden increase in traffic, the disk IO of the broker machine where the topic partition is located will be busy, which will cause data to be back-pressured to the client. Since the Kafka replica migration is time-consuming, the recovery after a problem occurs is slow. Bus can play a layer The role of the buffer layer.

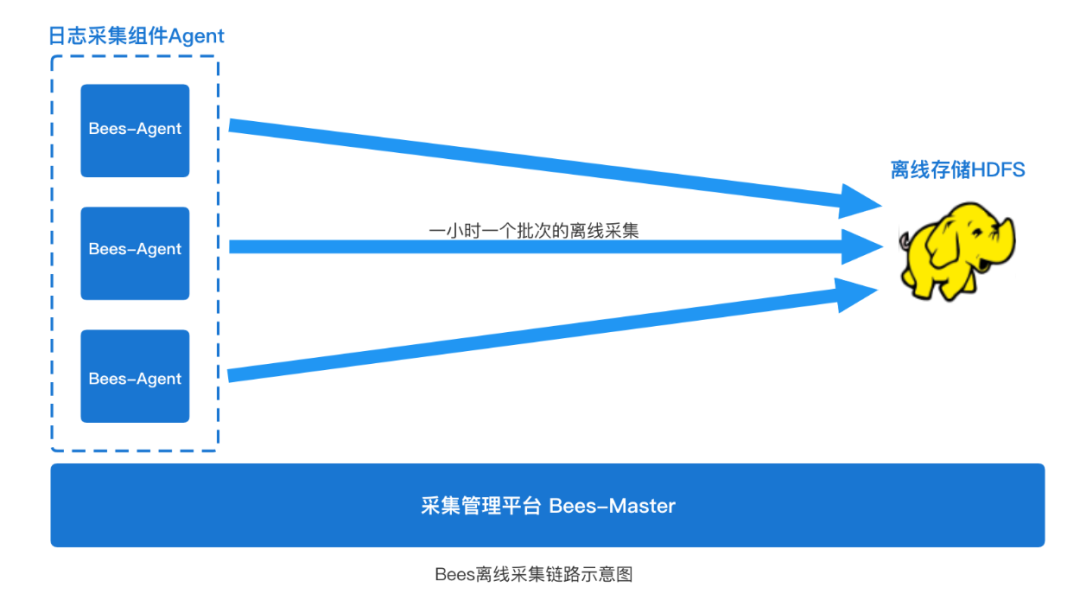

4.6 Offline Collection Capability

In addition to the above common real-time log collection scenarios (generally log collection to message middleware such as Kafka), Bees collection also has an offline log collection scenario. The so-called offline log collection generally refers to collecting log files to HDFS (refer to the figure below).

These log data are used for downstream Hive offline data warehouse construction and offline report analysis. The timeliness of the data in this scenario is not so strong, and the data is generally used on a daily basis (we often say T+1 data), so log data collection does not need to be collected line by line in real time like real-time log collection. Offline collection can generally be collected in batches at a fixed time. By default, we regularly collect a complete hourly log file generated in the previous hour every hour. For example, at 05:00, the collection agent starts to collect the log file (access.2021110820.log) generated in the previous hour. The file saves the complete (20:00~20:59) log content generated within 20 points.

To realize the offline collection capability, our Agent realizes it by integrating the basic capabilities of the HDFS Client. Using FSDataOutputStream in the HDFS Client can quickly complete a file PUT to the HDFS directory.

One thing to pay special attention to is that offline collection requires a special addition of a current-limiting collection capability. Due to the feature of offline collection, at the hour or so, the Agents on all machines will start the collection almost at the same time. If the log volume is large and the collection speed is too fast, the company’s network bandwidth may be rapidly occupied at this time and soar. Exceeding the upper limit of the bandwidth of the entire network will further affect the normal services of other businesses and cause failures; there is also a need to pay attention to the fact that offline collection has a large demand for machine disk resources at the hour, and through current-limited collection, it can be effectively reduced. The hourly peak value of the resource avoids affecting other services.

4.7 Log File Cleanup Strategy

Business logs are continuously generated and dropped on the disk of the machine. The log file size of a single hour may be tens of MB for a small one or tens of GB for a large one. The disk is likely to be full within a few hours, resulting in new The log cannot be written and the log is lost. On the other hand, it may lead to more fatal problems. The Linux operating system reports “No space left on device exception”, causing various failures of other processes; so the log files on the machine need to be cleaned up. strategy.

The strategy we adopt is that all machines start a shell log cleaning script by default, regularly check the log files in the fixed directory, and stipulate that the life cycle of the log files is 6 hours. Once the log files are found to be 6 hours ago, It will be deleted (execute rm command).

Because the deletion of log files is not initiated and executed by the log collection Agent itself, there may be a situation where “the collection speed cannot keep up with the deletion speed (6 hours behind the collection)”. For example, the log file is still being collected, but the deletion script has detected that the life cycle of the file has reached 6 hours and is ready to delete it; in this case, we only need to do a little bit to ensure that the read handle of the log file for the collection Agent is In this case, even if the log cleaning process executes the rm operation on the file (after executing rm, the file is only unlinked from the directory structure of the file system, and the actual file has not been completely deleted from the disk), the collection Agent continues to The opened handle can still collect this file normally; this kind of “acquisition speed cannot keep up with the deletion speed” cannot exist for a long time, and there is also a risk of disk fullness, which needs to be identified through alarms. Fundamentally speaking, it needs to be through load balancing or The method of reducing the amount of logs is to reduce the situation that the logs of a single machine cannot be collected for a long time.

4.8 System resource consumption and control

The agent collection process is deployed on a machine together with the business process, and uses the resources of the business machine (CPU, memory, disk, network) together. Therefore, when designing, it is necessary to consider controlling the consumption of machine resources by the agent collection process. At the same time, it is necessary to monitor the consumption of machine resources by the Agent process. On the one hand, it ensures that the business has stable resources to run normally; on the other hand, it can ensure the normal operation of the Agent’s own process. Usually we can adopt the following scheme:

1. For CPU consumption control.

We can more conveniently use the CPU isolation scheme at the Linux system level to control, such as TaskSet; through the TaskSet command, we can set the collection process to be bound to a limited CPU core when the collection process starts (process binding core, That is, set the affinity between the process and the CPU. After setting, the Linux scheduler will allow this process/thread to run only on the bound core); The use of the above does not affect each other.

2. Consumption control for memory.

Since the collection Agent is developed in java language and runs based on the JVM, we can control it through the heap parameter configuration of the JVM; the bees-agent is generally configured with 512MB by default, and the theoretical minimum value can be 64MB, which can be collected according to the actual machine resource conditions and log files The size is configured; in fact, the memory usage of the Agent is relatively stable, and the risk of memory consumption is small.

3. Consumption control for disk.

Since the collection Agent is an IO-intensive process, we need to focus on ensuring the disk IO load; there is no mature disk IO isolation solution at the system level, so it can only be implemented at the application layer. We need to know the baseline performance of the disk where the process is located, and then, on this basis, set the peak acquisition rate of the acquisition process (for example: 3MB/s, 5MB/s) through the Agent’s own speed limit acquisition capability; in addition , It is also necessary to do a good job of basic monitoring and alarming of disk IO load, monitoring and alarming of the collection rate of the collection agent, and further guarantee disk IO resources through these monitoring alarms and on-duty analysis.

4. Consumption control for the network.

The network mentioned here should focus on the upper limit of cross-computer room bandwidth. Avoid the collection of a large number of Agent logs at the same time, causing the bandwidth across the computer room to reach the upper limit, causing business failures. Therefore, monitoring and alarming are also required for the use of network bandwidth. The relevant monitoring data is reported to the platform for summary calculation, and the platform issues a reasonable collection rate to the Agent after intelligent calculation.

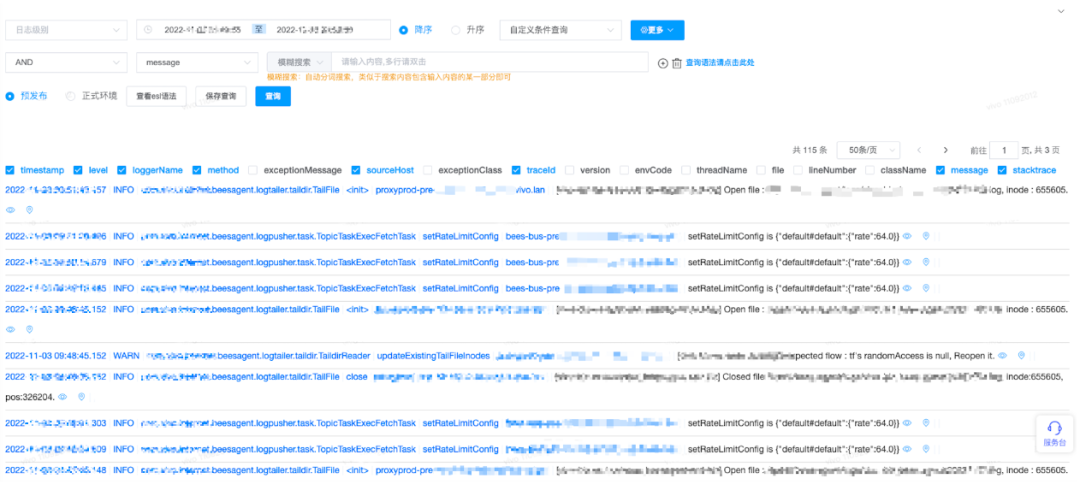

4.9 Self-log monitoring

In order to better monitor the status of all Agents on the line, it is necessary to be able to easily view the log4j logs of these Agent processes themselves. In order to achieve this goal, we design the log collection generated by the Agent itself as an ordinary log collection task, that is, collect the Agent process itself and collect the logs generated by itself, so all the logs of the Agent can be collected and aggregated by the Agent. To the downstream Kafka, to the Elasticsearch storage engine, and finally to view through Kibana or other log visualization platforms.

4.10 Platform Management

The current production environment has tens of thousands of Agent instances and tens of thousands of collection tasks. In order to carry out effective centralized operation, maintenance and management of these scattered and data-heavy Agents, we have designed a visual platform. The management platform has the following Agent control capabilities: Agent live network version viewing, Agent survival heartbeat management, Agent Collection task delivery, start, stop management, Agent collection speed limit management, etc. It should be noted that the communication method between the Agent and the platform is designed to use a simple HTTP communication method, that is, the Agent uses a regular heartbeat (default 5 minutes) Initiate an HTTP request to the platform, the HTTP request body will contain the Agent’s own information, such as idc, ip, hostname, current collection task information, etc., and the content of the HTTP return body will contain the task information issued by the platform to the Agent, such as which task Start, which task to stop, the specific parameters of the task change, etc.

5. Comparison with open source capabilities

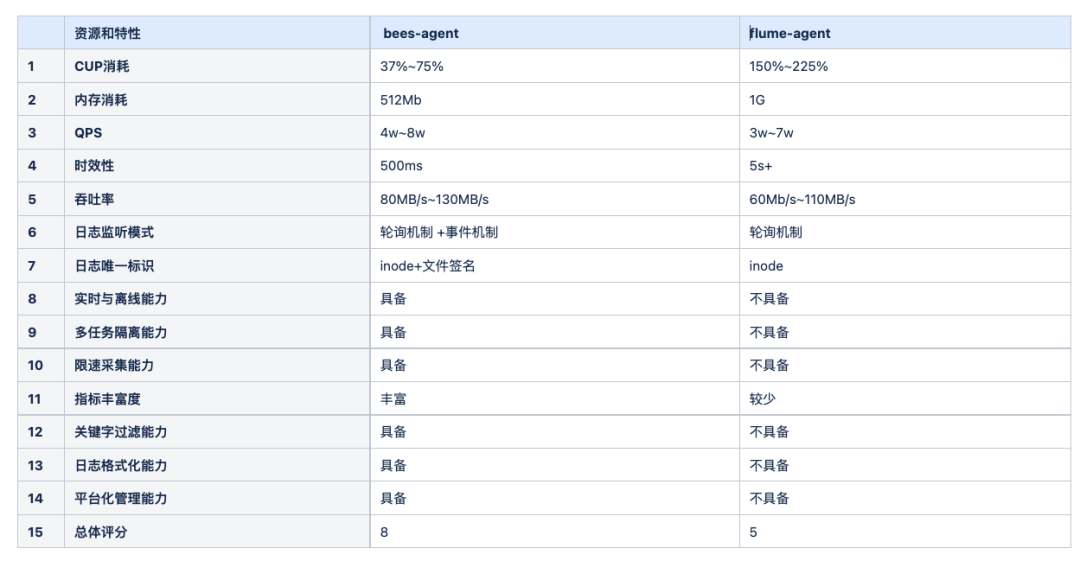

Comparison between bees-agent and flume-agent

Memory requirements are greatly reduced.bees-agent adopts a channelless design, which greatly saves memory overhead. When each Agent starts, the minimum theoretical value of the JVM stack can be set to 64MB;

The real-time performance is better.bees-agent adopts the Linux inotify event mechanism. Compared with the Flume Agent polling mechanism, the timeliness of collecting data can be within 1s;

The unique identifier of the log file, bees-agent Use inode + file signature, more accuratethere will be no miscollection and recollection of log files;

User resource isolation.The log collection tasks of different topics of bees-agent use different threads to isolate the collection without affecting each other;

A truly graceful exit.During the normal collection process of bees-agent, use the platform’s “stop command” at any time to allow the Agent to exit gracefully, without the embarrassing situation of being unable to exit, and to ensure that no logs are lost;

Richer metrics data.bees-agent includes collection rate, total collection progress, machine information, JVM heap status, number of classes, JVM GC times, etc.;

Richer customization capabilities.bees-agent has keyword matching collection capabilities, log formatting capabilities, platform management capabilities, etc.;

6. Summary

The previous article introduced some core technical points in the design process of vivo log collection Agent: including the discovery and monitoring of log files, the unique identifier design of log files, the architecture design of real-time and offline collection of log files, and the cleaning strategy of log files , the collection process’s consumption control of system resources, and the idea of platform management, etc. These key design ideas cover most of the core functions of the self-developed collection agent, and also cover the difficulties and pain points, which can make the subsequent development process more efficient. unimpeded. Of course, there are still some advanced collection capabilities that are not covered in this article, such as “how to do a good job of reconciling the integrity of log collection data”, “collection design for database-type scenarios”, etc. You can continue to explore solutions.

From 2019, the log collection scenario of vivo’s big data business is supported by the Bees data collection service. bees-agent continues to serve in the production environment, and has a record of stable operation for more than 3 years. There are tens of thousands of bees-agent instances running. At the same time, it supports the collection of tens of thousands of log files online, and collects PB-level logs every day. . Practice has proved that the stability, robustness, rich functions, performance and reasonable resources of bees-agent all meet the initial design expectations, and the design ideas in this paper have been proven to be effective again and again.

END

you may also like

#Design #Practice #Vivo #Big #Data #Log #Collection #Agent #vivo #Internet #Technology #News Fast Delivery