Summary:In this article, we will introduce relevant indicators for testing, how to conduct large-scale testing, and how we can achieve large-scale cluster access.

This article is shared from the HUAWEI CLOUD community “Breaking through 100 times the cluster scale! Release of Karmada Large-Scale Test Report”, author: HUAWEI CLOUD Cloud Native Team.

Summary

With the large-scale implementation of cloud-native technologies in more and more enterprises and organizations, how to efficiently and reliably manage large-scale resource pools to meet growing business challenges has become a key challenge for cloud-native technologies. For a long time in the past, different vendors tried to expand the scale of a single cluster by customizing Kubernetes native components. This increased the scale but also introduced problems such as complex single-cluster O&M and unclear cluster upgrade paths. The multi-cluster technology can horizontally expand the scale of the resource pool without intruding and modifying the Kubernetes single cluster, and reduces the cost of enterprise operation and maintenance management while expanding the resource pool.

In the process of large-scale implementation of Karmada, the scalability and large-scale of Karmada has gradually become a new focus of community users. Therefore, we tested Karmada in a large-scale environment to obtain the performance baseline indicators of Karmada managing multiple Kubernetes clusters.For a multi-cluster system represented by Karmada, the size of a single cluster is not the limiting factor restricting the size of its resource pool.Therefore, we refer toThe standard configuration of large-scale clusters of Kubernetes and the production practice of users, tested the user scenario of Karmada managing 100 Kubernetes clusters of 5k nodes and 2wPod at the same time. Limited by the test environment and testing tools, this test does not seek to test the upper limit of the Karmada multi-cluster system, but hopes to cover the typical scenarios of using multi-cluster technology in production on a large scale.According to the analysis of test results, the cluster federation with Karmada as the core canStable support for 100 large-scale clustersmanage more than 500,000 nodes and 2 million Pods, which can meet the needs of users in large-scale production.

In this article, we will introduce relevant indicators for testing, how to conduct large-scale testing, and how we can achieve large-scale cluster access.

background

With the continuous development of cloud native technology and the continuous enrichment of usage scenarios, multi-cloud and distributed cloud have gradually become the trend leading the development of cloud computing. According to a 2021 survey report by the famous analysis company Flexera, more than 93% of enterprises are using the services of multiple cloud vendors at the same time. On the one hand, they are limited by the business carrying capacity and fault recovery capabilities of a single Kubernetes cluster. On the other hand, in the current era of globalization, enterprises are more inclined to choose a hybrid cloud or multi-public cloud architecture for the purpose of avoiding being monopolized by a single manufacturer, or due to factors such as cost. At the same time, users in the Karmada community also put forward demands for large-scale node and application management under multi-cluster during the landing process.

Introduction

Karmada (Kubernetes Armada) is a Kubernetes management system that enables you to run your cloud-native applications across clusters and clouds without modifying the application. By using the Kubernetes native API and providing advanced scheduling capabilities on top of it, Karmada enables a truly open multi-cloud Kubernetes.

Karmada aims to provide complete automation for multi-cluster application management in multi-cloud and hybrid cloud scenarios. It has key features such as centralized multi-cloud management, high availability, failure recovery, and traffic scheduling.

The Karmada control plane consists of the following components:

- Karmada API Server

- Karmada Controller Manager

- Karmada Scheduler

ETCD stores Karmada’s API objects, and karmada-apiserver provides a REST port to communicate with all other components, and then karmada-controller-manager performs corresponding reconciliation operations on the API objects you submit to karmada-apiserver.

karmada-controller-manager runs various controllers that watch Karmada objects and then send requests to member cluster apiservers to create regular Kubernetes resources.

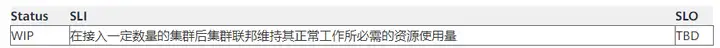

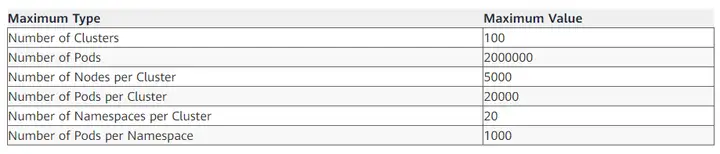

Dimensions and thresholds of the resource pool size of a multi-cluster system

The resource pool size of a multi-cluster system does not only refer to the number of clusters, that is, Scalability!=#Num of Clusters. In fact, the size of a multi-cluster resource pool includes the measurement of many dimensions. It is useless to only consider the number of clusters without considering other dimensions. meaningful.

We scale a multi-cluster resource poolby priorityDescribed as three dimensions shown below:

- Num of Clusters: The number of clusters is the most direct and important dimension to measure the resource pool size and carrying capacity of a multi-cluster system. When the other dimensions remain unchanged, the more clusters the system can access, the greater the resource pool size of the system. The bigger it is, the stronger the carrying capacity.

- Num of Resources (API Objects): For the control plane of a multi-cluster system, the storage is not unlimited, but the number and overall size of resource objects created on the control plane are limited by the storage of the system control plane, which is also a constraint An important dimension of resource pool size in a multi-cluster system. The resource object here not only refers to the resource template delivered to the member clusters, but also includes resources such as cluster scheduling policies and multi-cluster services.

- Cluster Size: The cluster size is a dimension that cannot be ignored to measure the resource pool size of a multi-cluster system. On the one hand, when the number of clusters is equal, the larger the size of a single cluster, the larger the resource pool of the entire multi-cluster system.On the other hand, the upper-level capabilities of a multi-cluster system depend on the system’s resource image of the cluster. For example, in the scheduling process of multi-cluster applications, cluster resources are an indispensable factor.. To sum up, the size of a single cluster is closely related to the entire multi-cluster system, but the size of a single cluster is also not a limiting factor restricting a multi-cluster system.Users can increase the cluster size of a single cluster by optimizing the native Kubernetes components to achieve the purpose of expanding the resource pool of the entire multi-cluster system, but this is not the focus of measuring the performance of the multi-cluster system. In this test, the community refers to the standard configuration of large-scale clusters of kubernetes and the performance of test tools, and formulates the scale of the test cluster to suit the single-cluster configuration in the actual production environment. In the standard cluster configuration, Node and Pod are undoubtedly the two most important resources. Node is the smallest carrier of resources such as computing and storage, and the number of Pods represents the application carrying capacity of a cluster. In fact, single-cluster resource objects also include common resources such as service, configmap, and secret. The introduction of these variables will make the testing process more complicated, so this test will not pay too much attention to these variables.

For a multi-cluster system, it is obviously impossible to expand each dimension without limit and meet the indicators of SLIs/SLOs. Each dimension is not completely independent. If one dimension is stretched, other dimensions will be compressed, which can be adjusted according to the usage scenario. Taking the two dimensions of Clusters and Nodes as an example, in the scenario of stretching 5k nodes of a single cluster to 10k nodes under 100 clusters or expanding the number of clusters to 200 clusters while maintaining the same single cluster specification, the specifications of other dimensions will inevitably be affected . It would be a huge workload to test and analyze various scenarios. In this test, we will focus on selecting typical scenario configurations for test and analysis. On the basis of satisfying SLIs/SLOs, a single cluster supports 5k nodes and 100 cluster access and management of 20k pod scale.

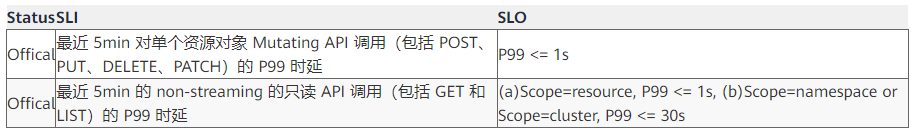

SLIs/SLOs

Scalability and performance are important properties of multi-cluster federation. As a user of multi-cluster federation, we expect quality of service guarantees in the above two aspects. Before performing large-scale performance testing, we need to define metrics for measurement. Referring to the SLI (Service Level Indicator)/SLO (Service Level Objectives) of the Kubernetes community and the typical application of multi-cluster, the Karmada community defines the following SLI/SLO to measure the service quality of multi-cluster federation.

- Resource Distribution Latency

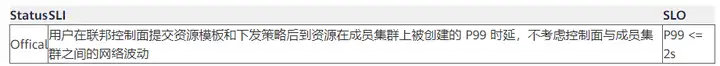

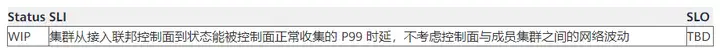

- Cluster Registration Latency

note:

- The above metrics do not take into account the network fluctuations of the control plane and member clusters. Also, SLOs within a single cluster are not taken into account.

- Resource usage is a very important indicator for multi-cluster systems, but the upper-layer services provided by different multi-cluster systems are different, so the resource requirements for each system will also be different. We do not enforce a limit on this metric.

- The cluster registration delay is the delay from the cluster registration to the control plane to the availability of the cluster on the federation side. It depends somewhat on how the control plane gathers the state of the member clusters.

test tools

ClusterLoader2

ClusterLoader2 is an open source Kubernetes cluster load testing tool, which can test the SLIs/SLOs indicators defined by Kubernetes to verify whether the cluster meets various service quality standards. In addition, ClusterLoader2 provides visual data for cluster problem location and cluster performance optimization. ClusterLoader2 will eventually output a Kubernetes cluster performance report, showing a series of performance index test results. However, in the process of Karmada performance testing, since ClusterLoader2 is a test tool customized for Kubernetes single cluster, and it cannot obtain the resources of all clusters in a multi-cluster scenario, we only use ClusterLoader2 to distribute the resources managed by Karmada .

Prometheus

Prometheus is an open source tool for monitoring and alerting, which includes functions such as data collection, data reporting, and data visualization. After analyzing the processing of various monitoring indicators by Clusterloader2, we use Prometheus to monitor the indicators on the control plane according to specific query statements.

kind

Kind is a tool for running Kubernetes-native clusters with containers. In order to test Karmada’s application distribution capabilities, we need a real single-cluster control plane to manage applications distributed by the federated control plane. Kind is able to simulate a real cluster while saving resources.

Fake-kubelet

Fake-kubelet is a tool that simulates nodes and maintains pods on virtual nodes. Compared with Kubemark, fake-kubelet only does the necessary work to maintain nodes and Pods. It is very suitable for simulating large-scale nodes and pods to test the performance of the control plane in a large-scale environment.

Test cluster deployment scheme

The Kubernetes control plane is deployed on a single master node. etcd, kube-apiserver, kube-scheduler and kube-controller are deployed as single instances. Karmada’s control plane components are deployed on this master node. They are also deployed as single instances. All Kubernetes components and Karmada components run on high-performance nodes, and we do not limit resources on them. We use kind to simulate a single master node cluster, and fake-kubelet to simulate the working nodes in the cluster.

Test environment information

Control plane operating system version

Ubuntu 18.04.6 LTS (Bionic Beaver)

Kubernetes version

Kubernetes v1.23.10

Karmada version

Karmada v1.3.0-4-g1f13ad97

The node configuration where the Karmada control plane resides

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 64

On-line CPU(s) list: 0-63

Thread(s) per core: 2

Core(s) per socket: 16

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Xeon(R) Gold 6266C CPU @ 3.00GHz

Stepping: 7

CPU MHz: 3000.000

BogoMIPS: 6000.00

Hypervisor vendor: KVM

Virtualization type: full

L1d cache: 32K

L1i cache: 32K

L2 cache: 1024K

L3 cache: 30976K

NUMA node0 CPU(s): 0-31

NUMA node1 CPU(s): 32-63Disk /dev/vda: 200 GiB, 214748364800 bytes, 419430400 sectorsComponent parameter configuration

--max-requests-inflight=2000

--max-mutating-requests-inflight=1000- karmada-aggregated-server

--kube-api-qps=200

--kube-api-burst=400--kube-api-qps=200

--kube-api-burst=400- karmada-controller-manager

--kube-api-qps=200

--kube-api-burst=400--kube-api-qps=40

--kube-api-burst=60test execution

Before using Clusterloader2 for performance testing, we need to define the performance testing strategy through the configuration file. The configuration file we use is as follows:

unfold me to see the yaml

name: test

namespace:

number: 10

tuningSets:

- name: Uniformtinyqps

qpsLoad:

qps: 0.1

- name: Uniform1qps

qpsLoad:

qps: 1

steps:

- name: Create deployment

phases:

- namespaceRange:

min: 1

max: 10

replicasPerNamespace: 20

tuningSet: Uniformtinyqps

objectBundle:

- basename: test-deployment

objectTemplatePath: "deployment.yaml"

templateFillMap:

Replicas: 1000

- namespaceRange:

min: 1

max: 10

replicasPerNamespace: 1

tuningSet: Uniform1qps

objectBundle:

- basename: test-policy

objectTemplatePath: "policy.yaml"

# deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{.Name}}

labels:

group: test-deployment

spec:

replicas: {{.Replicas}}

selector:

matchLabels:

app: fake-pod

template:

metadata:

labels:

app: fake-pod

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: type

operator: In

values:

- fake-kubelet

tolerations:

- key: "fake-kubelet/provider"

operator: "Exists"

effect: "NoSchedule"

containers:

- image: fake-pod

name: {{.Name}}

# policy.yaml

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: test

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

placement:

replicaScheduling:

replicaDivisionPreference: Weighted

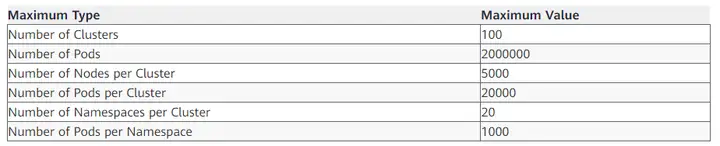

replicaSchedulingType: DividedThe detailed configuration of Kubernetes resources is shown in the following table:

For detailed test methods and procedures, please refer to

https://github.com/kubernetes/perf-tests/blob/master/clusterloader2/docs/GETTING_STARTED.md[1]

Test Results

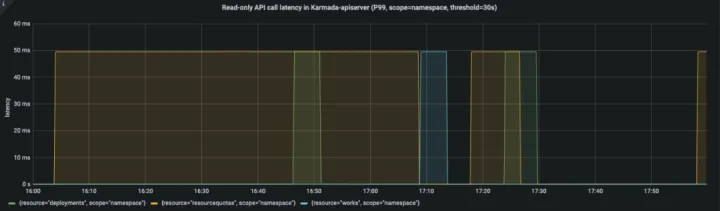

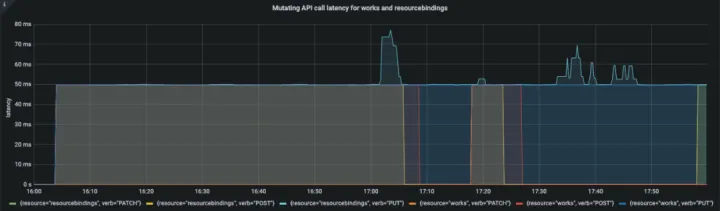

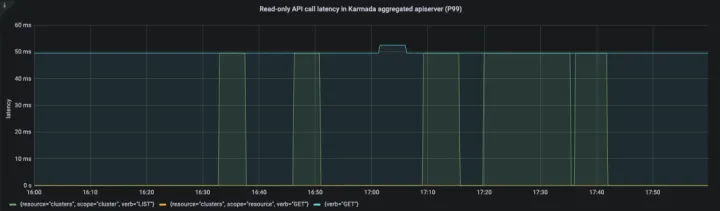

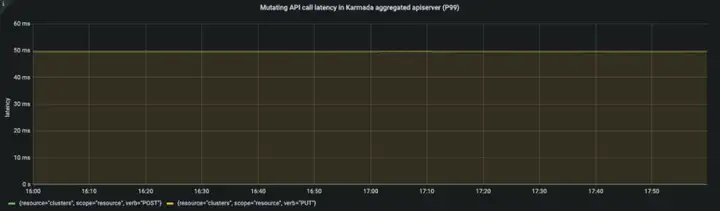

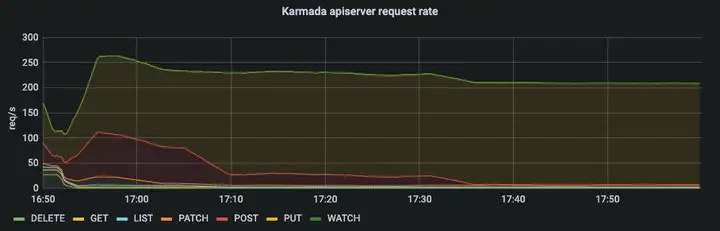

APIResponsivenessPrometheus:

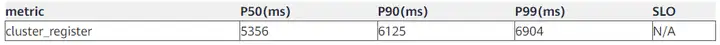

Cluster Registration Latency:

note: Karmada’s Pull mode is suitable for private cloud scenarios. Compared to Push mode, the member cluster runs a component called karmada-agent. It pulls user-submitted applications from the control plane and runs them on member clusters. During pull mode cluster registration, it will include time to install karmada-agent. If the image of karmada-agent is ready, it depends a lot on the latency of Pod startup in a single cluster. The registration delay in Pull mode is not discussed here.

Resource Distribution Latency:

Push Mode

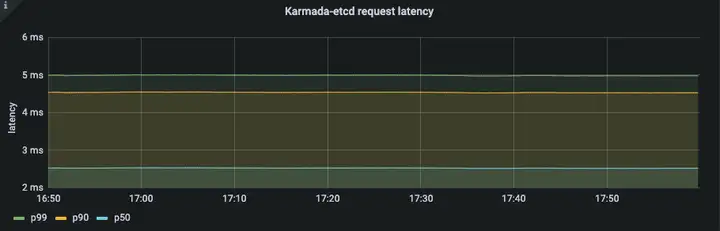

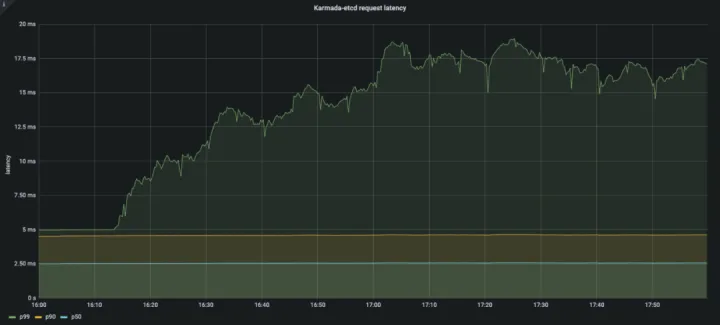

Etcd latency:

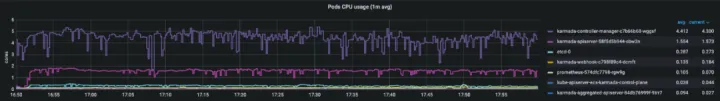

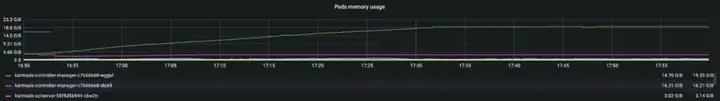

Resource Usage:

Pull Mode

Etcd latency:

Resource Usage:

The karmada-agent in the member cluster consumes 40M CPU(cores) and 266Mi Memory(bytes).

Conclusion and Analysis

In the above test results, the API call latency and resource distribution latency are in line with the SLIs/SLOs defined above. Throughout the process, the resources consumed by the system are within a controllable range. therefore,Karmada can stably support 100 large-scale clusters, and manage more than 500,000 nodes and 2,000,000 pods. In production, Karmada can efficiently support hundreds of large-scale clusters. Next, we will analyze the data of each test indicator in detail.

Separation of Concerns: Resource Templates and Policies

Karmada uses Kubernetes native API to express cluster federation resource templates, and uses reusable policy API to express cluster scheduling policies. It not only allows Karmada to easily integrate the Kubernetes ecosystem, but also greatly reduces the number of resources on the control plane. Based on this, the number of resources on the control plane does not depend on the number of clusters in the entire multi-cluster system, but on the number of multi-cluster applications.

Karmada’s architecture integrates the simplicity and extensibility of the Kubernetes architecture. Karmada-apiserver is similar to Kubernetes’ kube-apiserver as the entry point of the control plane. You can tune these components with the parameters you want in a single-cluster configuration.

During the entire resource distribution process, the API call delay is within a reasonable range.

Cluster registration and resource distribution

In Karmada version 1.3, we provided the ability to register Pull mode clusters based on Bootstrap tokens. This method not only simplifies the process of cluster registration, but also enhances the security of cluster registration. Now whether it is Pull mode or Push mode, we can use the karmadactl tool to complete the cluster registration. Compared with Push mode, Pull mode will run a component called karmada-agent in the member cluster.

Cluster registration latency includes the time required for the control plane to collect member cluster status. During cluster lifecycle management, Karmada collects member cluster versions, supported API lists, and cluster health status information. In addition, Karmada collects the resource usage of member clusters and models the member clusters based on this, so that the scheduler can better select the target cluster for the application. In this case, the cluster registration delay is closely related to the size of the cluster. The metrics above show the latency required to join a 5,000-node cluster until it becomes available.You can model the cluster resources by shutting down[2]To make the cluster registration delay independent of the size of the cluster, in this case, the index of cluster registration delay will be less than 2s.

Whether in Push mode or Pull mode, Karmada delivers resources to member clusters at a very fast speed. The only difference is that karmada-controller-manager is responsible for the resource distribution of all Push mode clusters, while karmada-agent is only responsible for the Pull mode cluster where it is located. Therefore, in the process of sending resources under high concurrency conditions, Pull will be faster than Push mode under the same configuration conditions. You can also adjust the number of concurrent work in the same time period to improve performance by adjusting the –concurrent-work-syncs parameter of karmada-controller-manager.

Resource usage comparison between Push mode and Pull mode

In Karmada 1.3, we did a lot of work to reduce Karmada’s resource usage for managing large clusters.Now we are happy to announce that Karmada 1.3 reduces the 85% memory consumption and 32% CPU consumption. In general, the Pull mode has obvious advantages in memory usage, but not much difference in other resources.

In Push mode, the resource consumption of the control plane is mainly concentrated on karmada-controller-manager, while the pressure on karmada-apiserver is not great.

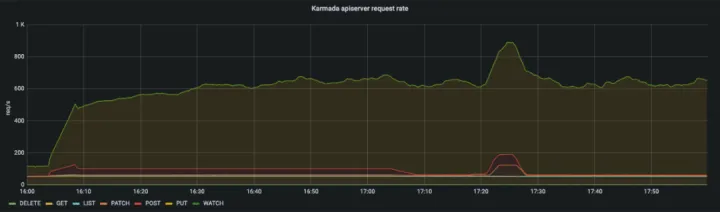

From the qps of karmada-apiserver and the request delay of karmada-etcd, we can see that the request volume of karmada-apiserver is kept at a low level. In Push mode, most requests come from karmada-controller-manager. You can configure the two parameters –kube-api-qps and –kube-api-burst to control the number of requests within a certain range.

In Pull mode, the resource consumption of the control plane is mainly concentrated on karmada-apiserver, not karmada-controller-manager.

From the qps of karmada-apiserver and the request delay of karmada-etcd, we can see that the request volume of karmada-apiserver remains at a high level. In Pull mode, each member cluster’s karmada-agent needs to maintain a persistent connection with the karmada-apiserver. We can easily conclude that the total number of requests from karmada-apiserver is N times that configured in karmada-agent (N=#Num of clusters) during the process of delivering applications to all clusters. Therefore, in the scenario of a large-scale Pull mode cluster, we recommend increasing the values of the –max-requests-inflight and –max-mutating-requests-inflight parameters of karmada-apiserver, and the –quota-backend of karmada-etcd The value of the -bytes parameter to improve the throughput of the control plane.

Now Karmada provides a cluster resource model[3]The ability to make scheduling decisions based on cluster idle resources. During resource modeling, it collects information about all cluster nodes and Pods. This will consume a certain amount of memory in large-scale scenarios.If you do not use this capability, you can turn off cluster resource modeling[4]To further reduce resource consumption.

Summary and Outlook

According to the analysis of test results, Karmada can stably support 100 large-scale clusters, manage more than 500,000 nodes and 2 million Pods.

In terms of usage scenarios, the Push mode is suitable for managing Kubernetes clusters on public clouds, while the Pull mode covers private cloud and edge-related scenarios relative to the Push mode. In terms of performance and security, the overall performance of the Pull mode is better than that of the Push mode. Each cluster is managed by the karmada-agent component in the cluster and is completely isolated. However, while the Pull mode improves performance, it also needs to correspondingly improve the performance of karmada-apiserver and karmada-etcd to cope with the challenges of high-traffic and high-concurrency scenarios. For specific methods, please refer to the optimization of large-scale clusters in kubernetes. Generally speaking, users can choose different deployment modes according to usage scenarios, and improve the performance of the entire multi-cluster system through parameter tuning and other means.

Due to the limitations of the test environment and test tools, this test has not yet tested the upper limit of the Karmada multi-cluster system. At the same time, the performance test of the multi-cluster system is still in the ascendant stage. In the next step, we will continue to optimize the test tools for the multi-cluster system. Organize test methods more efficiently to cover larger scale and more scenarios.

References

[1]https://github.com/kubernetes/perf-tests/blob/master/clusterloader2/docs/GETTING_STARTED.md: https://github.com/kubernetes/perf-tests/blob/master/clusterloader2/docs/GETTING_STARTED.md

[2]Turn off cluster resource modeling: https://karmada.io/docs/next/userguide/scheduling/cluster-resources#disable-cluster-resource-modeling

[3]Cluster resource model: https://karmada.io/docs/next/userguide/scheduling/cluster-resources

[4]Turn off cluster resource modeling: https://karmada.io/docs/next/userguide/scheduling/cluster-resources#disable-cluster-resource-modeling

Click to follow and learn about Huawei Cloud’s fresh technologies for the first time~

#Karmada #LargeScale #Test #Report #Released #Breakthrough #Times #Cluster #Scale