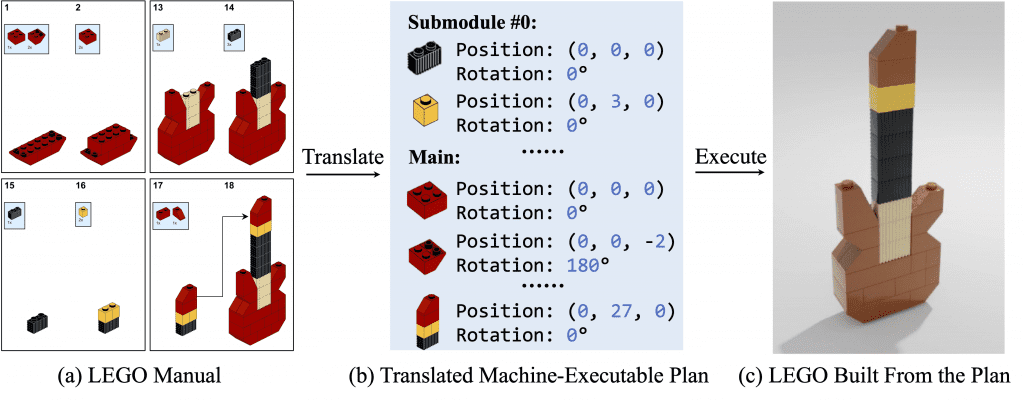

MEPNet is a learning-based framework that translates image-based, step-by-step assembly manuals created by human designers into machine-understandable instructions.

The researchers formulate the problem as a sequential prediction task: at each step, the model reads the manual, locates the parts to be added to the current shape, and infers where they are in three-dimensional space. The challenges posed by this task are establishing a “2D-to-3D” correspondence between manual images and real 3D objects, as well as 3D pose prediction for unseen 3D objects, since in one step the added The new part may be a brand new small building block, or it may be an object assembled from previous steps.

Run the following commands to install the necessary dependencies.

conda create -n lego_release python=3.9.12

conda activate lego_release

pip -r requirements.txt

According to this documentation, manual installation may be required pytoch3d 0.5.0.

Download the evaluation dataset and model checkpoint from here, unzip them to the root of the code, and run

bash scripts/eval/eval_all.sh

Results will be saved to results/.

To train a model from scratch, first download the training and validation datasets from here and unpack them separately into data/datasets/synthetic_trainand data/datasets/synthetic_valTable of contents.

After downloading the dataset, run the following command to preprocess it

bash scripts/process_dataset.sh

Then run the script to train the model

bash scripts/train/train_mepnet.sh

You can add in wandb --wandbOptions for recording and visualization of training scripts.

#MEPNet #Homepage #Documentation #Downloads #Imagebased #2D3D #Transformation #Framework #News Fast Delivery