1 The dilemma of the Java language in the cloud-native era

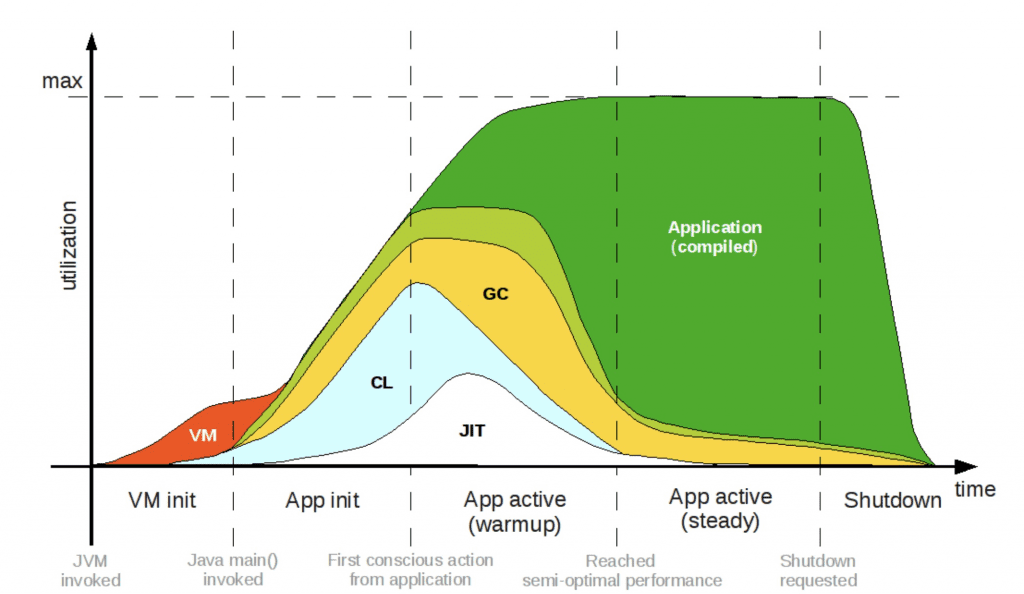

After years of evolution, the functions and performance of the Java language are constantly developing and improving. Systems such as instant compilers and garbage collectors can reflect the excellence of the Java language, but it takes a while to enjoy the improvements brought about by these functions. Time to run to achieve the best performance. In general, Java is designed for large-scale, long-term use of server-side applications.

In the cloud-native era, the advantage of Java language compiling once and running everywhere no longer exists. In theory, using containerization technology, all languages can be deployed on the cloud, and Java applications that cannot be separated from the JVM often face JDK memory usage that is larger than the application itself. The dilemma; Java’s dynamic loading and unloading features also make more than half of the built application image useless code and dependencies, which make Java applications take up a lot of memory. However, the long startup time and the long time for performance to reach the peak make it unable to compete with fast languages such as Go and Node.js in scenarios such as Serverless.

Schematic diagram of the running life cycle of a Java application

2 GraalVM

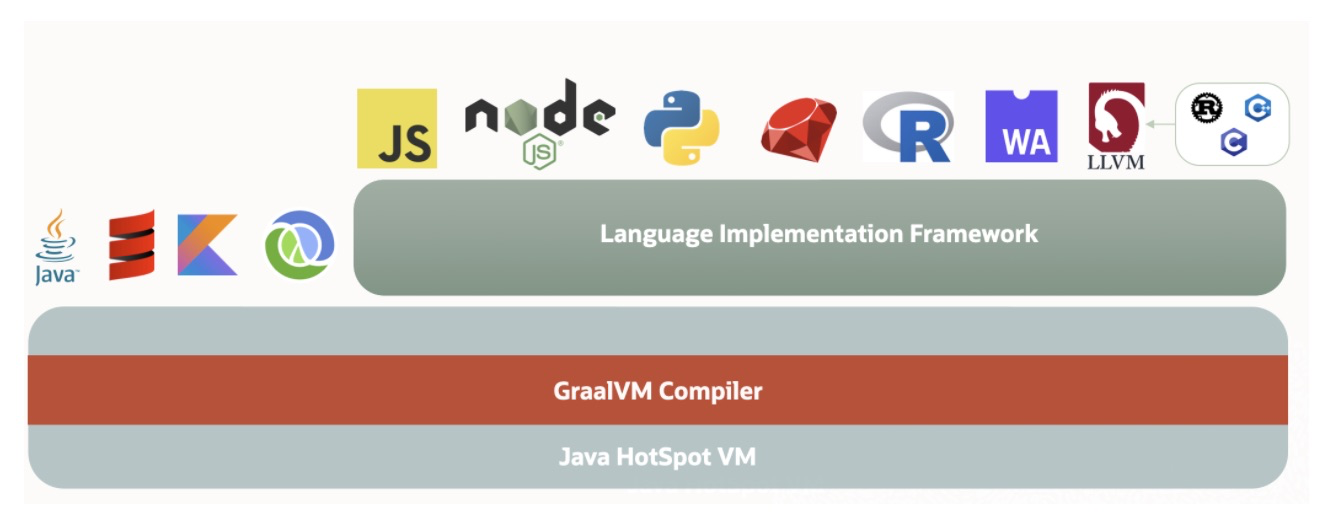

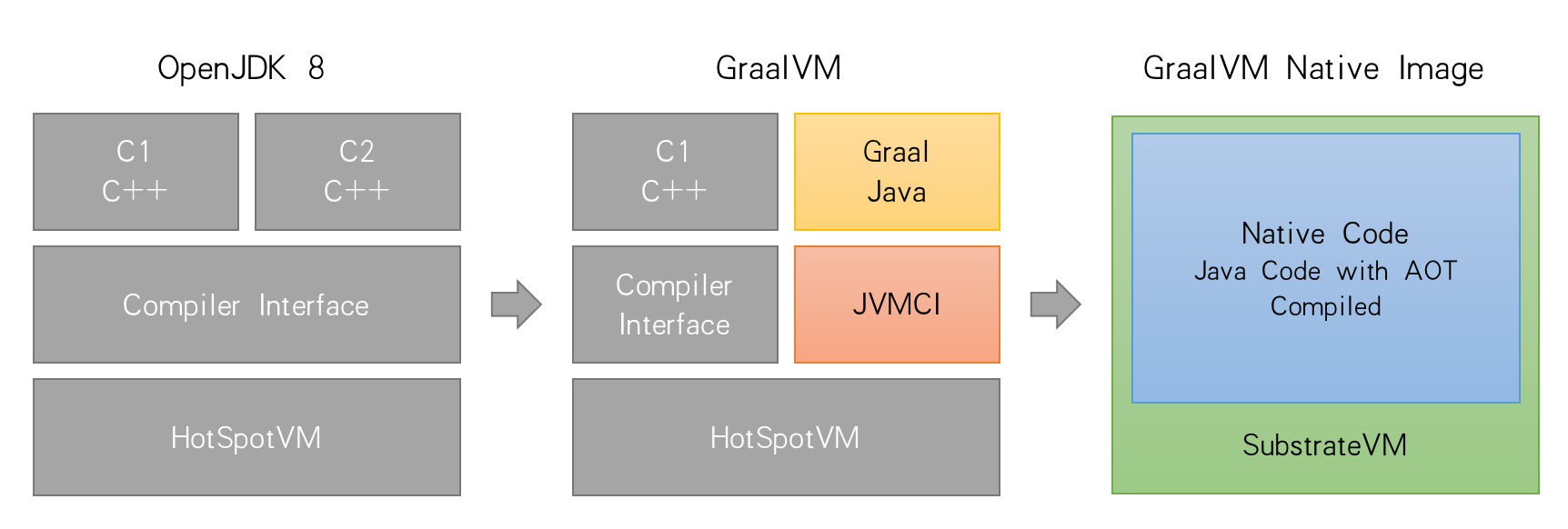

Faced with the discomfort of Java in the cloud-native era, GraalVM may be the best antidote. GraalVM is an open-source high-performance multi-language runtime platform developed based on Java launched by Oracle Labs. It can run on traditional OpenJDK, or can be compiled into an executable file through AOT (Ahead-Of-Time) to run independently, or even Can be integrated into the database to run. In addition, it also removes the boundaries between programming languages, and supports the compilation of codes mixed with different programming languages into the same binary code through just-in-time compilation technology, so as to realize seamless communication between different languages. seam switching.

This article mainly briefly introduces the changes that GraalVM can bring to us from three aspects:

1) The emergence of Java-based Graal Compiler is of inestimable value for learning and researching virtual machine code compilation technology. Compared with the extremely complicated server-side compiler written in C++, the cost of optimizing the compiler and learning is much higher. decrease.

2) The static compilation framework Substrate VM framework provides Java with the possibility to compete with other languages in the cloud-native era, greatly reduces the memory occupied by Java applications, and can speed up the startup speed by dozens of times.

3) Intermediate language interpreters represented by Truffle and Sulong. Developers can use the API provided by Truffle to quickly implement a language interpreter in Java, thereby realizing the effect of running other languages on the JVM platform and bringing Java to the world. More and more imaginative possibilities come.

GraalVM multilingual support

3 GraalVM overall structure

graal

├── CONTRIBUTING.md

├── LICENSE

├── README.md

├── SECURITY.md

├── THIRD_PARTY_LICENSE.txt

├── bench-common.libsonnet

├── ci-resources.libsonnet

├── ci.hocon

├── ci.jsonnet

├── ci_includes

├── common-utils.libsonnet

├── common.hocon

├── common.json

├── common.jsonnet

├── compiler

├── docs

├── espresso

├── graal-common.json

├── java-benchmarks

├── regex

├── repo-configuration.libsonnet

├── sdk

├── substratevm

├── sulong

├── tools

├── truffle

├── vm

└── wasm

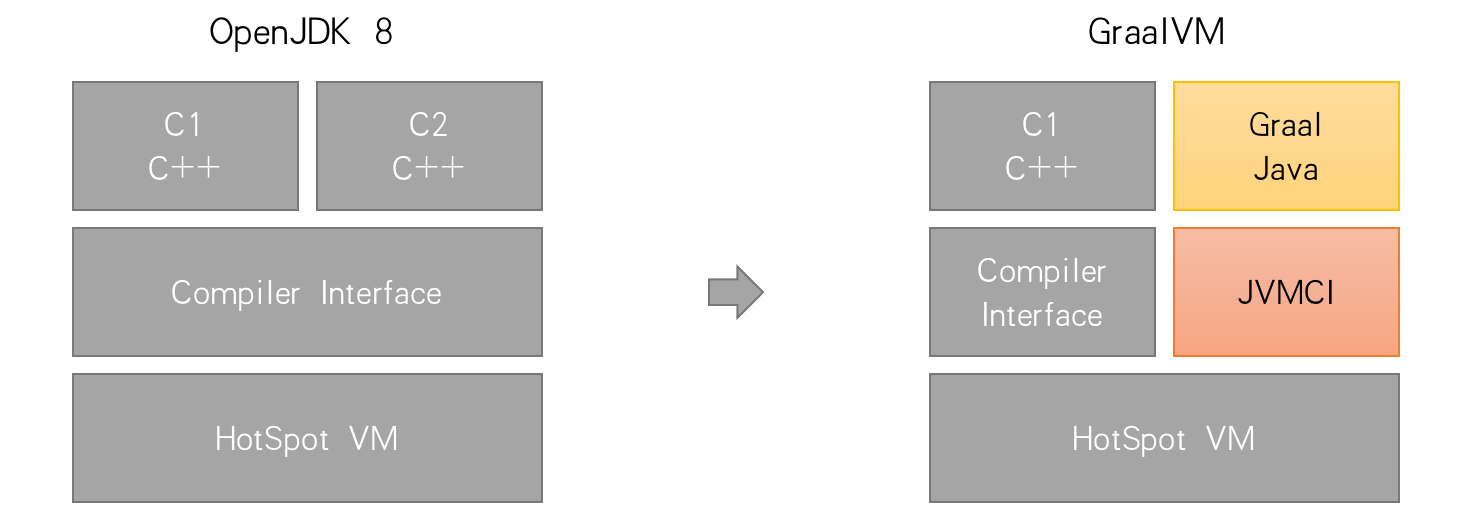

3.1 Compiler

The full name of the Compiler subproject is the GraalVM compiler, which is a Java compiler written in the Java language. High compilation efficiency, high output quality, support both ahead of time compilation (AOT) and just-in-time compilation (JIT), and compilers for different virtual machines including HotSpot.

It uses the same intermediate representation (Sea of Nodes IR) as C2, and directly inherits a large number of high-quality optimization technologies from HotSpot’s server-side compiler in terms of back-end optimization. platform.

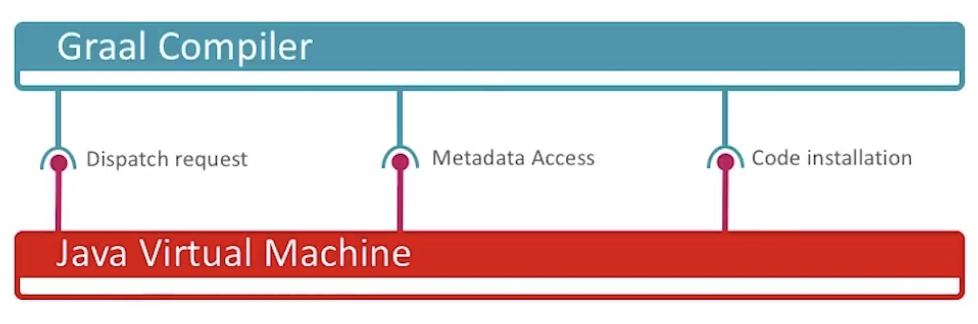

Graal Compiler is a server-side just-in-time compiler jointly owned by GraalVM and HotSpotVM (from JDK10 onwards), and is a future replacement for the C2 compiler. In order to decouple the Java virtual machine from the compiler, ORACLE introduced the Java-Level JVM Compiler Interface (JVMCI) Jep 243: extract the compiler from the virtual machine, and communicate with the virtual machine through the interface (https:// openjdk.java.net/jeps/243)

Specifically, the interaction between the just-in-time compiler and the Java virtual machine can be divided into the following three aspects.

- Respond to compile requests;

- Obtain the metadata required for compilation (such as classes, methods, fields) and the profile that reflects the program execution status;

- Deploy the generated binaries to the code cache.

Example of compile time difference provided by oracle

3.2 Substrate VM

Substrate VM provides a compilation tool chain for statically compiling Java programs into native code, including compilation framework, static analysis tools, C++ support framework, and runtime support.Convert bytecode to machine code before the program runs

advantage:

- Static accessibility analysis starts from the specified compilation entry, which effectively controls the scope of compilation and solves the problem of code expansion;

- A variety of runtime optimizations have been implemented. For example: traditional java classes are initialized when they are first used, and then they have to check whether they have been initialized each time they are called. GraalVM optimizes them to be initialized at compile time;

- There is no need to consume CPU resources for instant compilation during operation, and the program can also achieve ideal performance at the beginning of startup;

shortcoming:

- Static analysis is a resource-intensive calculation that consumes a lot of CPU, memory and time;

- Static analysis has very limited analysis capabilities for reflection, JNI, and dynamic proxies. At present, GraalVM can only be solved by additional configuration;

- Java serialization also has a number of dynamic features that violate the closedness assumption: reflection, JNI, dynamic class loading, currently GraalVM also needs to be solved through additional configuration, and cannot handle all serialization, such as the serialization of Lambda objects, and the performance is JDK half of

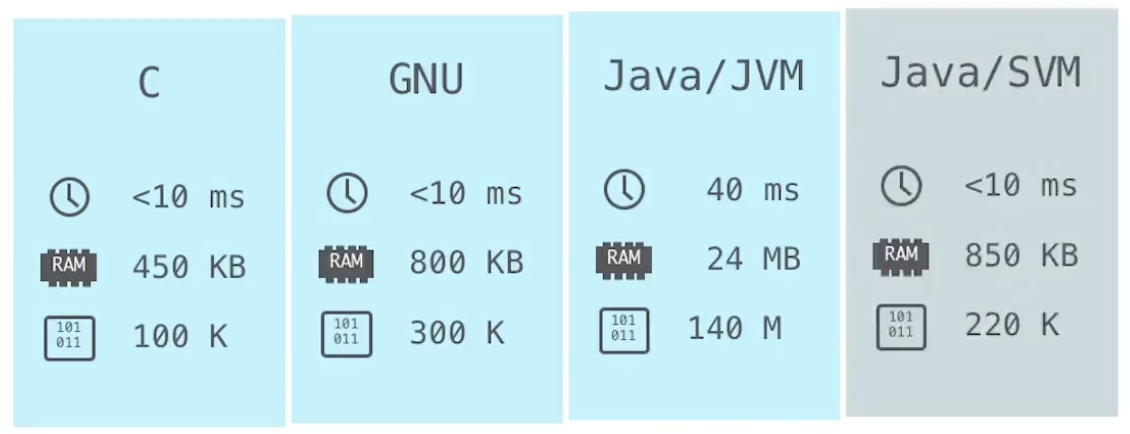

Startup time comparison

Memory usage comparison

3.3 Truffle

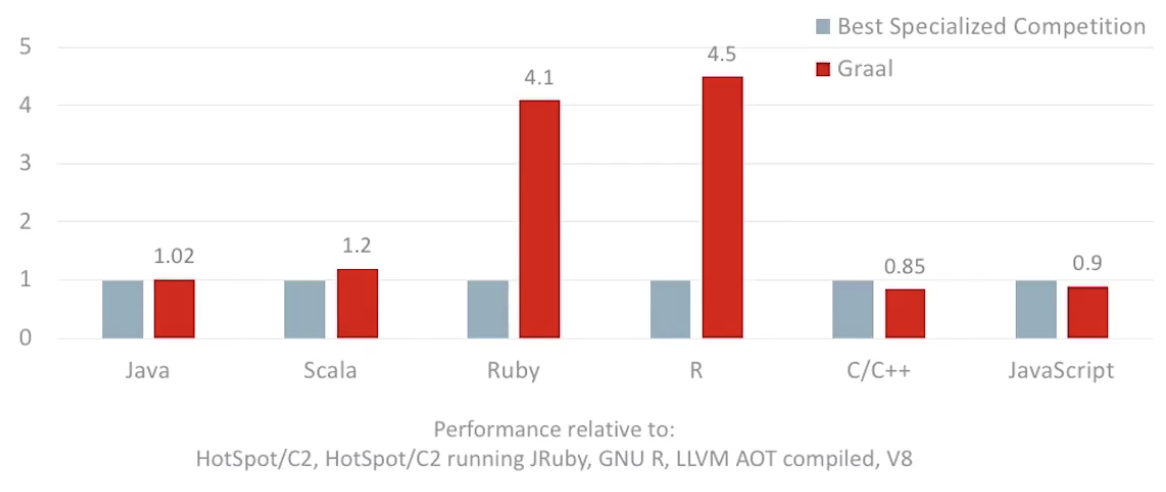

We know that general compilers are divided into front-end and back-end. The front-end is responsible for lexical analysis, syntax analysis, type checking and intermediate code generation, and the back-end is responsible for compilation optimization and object code generation. A more tricky way is to compile the new language into a known language, such as Scala and Kotlin, which can be compiled into Java bytecode, so that you can directly enjoy JVM’s JIT, GC and other optimizations. Targeted compiled languages. In contrast, interpreted languages such as JavaScript, Ruby, R, Python, etc., rely on interpreted executors for parsing and execution. In order to allow such interpreted languages to execute more efficiently, developers usually need to develop virtual machines. And implement garbage collection, just-in-time compilation and other components, so that the language can be executed in a virtual machine, such as Google’s V8 engine. If these languages can also run on the JVM and reuse the various optimization schemes of the JVM, it will reduce the consumption of many reinvented wheels. This is also the goal of the Truffle project.

Truffle is an interpreter implementation framework written in Java. It provides an interpreter development framework interface, which can help developers use Java to quickly develop language interpreters for languages they are interested in. Currently, JavaScript, Ruby, R, Python and other languages have been implemented and maintained.

It only needs to implement the lexical analyzer and syntax analyzer of the relevant language based on Truffle, and the interpretation executor for the abstract syntax tree (AST) generated by the syntax analysis, and then it can run on any Java virtual machine and enjoy various operations provided by the JVM. time optimization.

GraalVM multilingual runtime performance speedup

3.3.1 Partial Evaluation

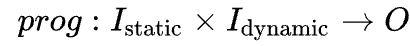

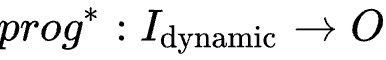

The implementation principle of Truffle is based on the concept of Partial Evaluation: Suppose the program prog is to convert input into output

Among them, Istatic is static data, known constant at compile time, and Idynamic is unknown data at compile time, then the program can be equivalent to:

new program progAs a specialization of prog, it should be executed more efficiently than the original program. This conversion from prog to progThe process is called Partial Evaluation. We can regard Truffle’s preloaded interpretation executor as prog, and a program written in Truffle language as Istatic, and convert prog to prog* through Partial Evaluation.

The following quotes an official Oracle example to explain. The following program implements the operation of reading parameters and adding parameters. It needs to read and add three parameters:

The AST generated by this program is parsed as

sample = new Add(new Add(new Arg(0), new Arg(1)), new Arg(2));

The continuous method inlining through the Partial Evaluator will eventually become the following code:

3.3.2 Node rewriting

Node rewriting is another key optimization in Truffle.

In a dynamic language, the types of many variables can only be determined at runtime. Taking “addition” as an example, the symbol + can represent integer addition or floating-point addition. Truffle’s language interpreter will collect the operation type (profile) represented by each AST node, and optimize the collected profile at compile time, for example: if the collected profile shows that this is an integer addition operation , Truffle will transform the AST during just-in-time compilation, treating “+” as an integer addition.

Of course, this kind of optimization also has errors. For example, the above addition operation may be integer addition or string addition. If the AST tree is deformed at this time, then we have to discard the compiled machine code and fall back to AST interpretation and execution. The core behind this kind of profile-based optimization is speculative optimization based on assumptions and de-optimization when assumptions fail.

After just-in-time compilation, if the actual type of the AST node is found to be different from the assumed type during operation, Truffle will actively call the de-optimization API provided by the Graal compiler, return to the state of interpreting and executing the AST node, and re-collect the AST node type information. After that, Truffle will again use the Graal compiler for a new round of just-in-time compilation.

According to statistics, among JavaScript methods and Ruby methods, 80% will stabilize after 5 method calls, 90% will stabilize after 7 calls, and 99% will stabilize after 19 method calls.

3.4 Sulong

The Sulong subproject is a high-tech runtime tool provided by GraalVM for LLVM’s intermediate language bitcode, and a bitcode interpreter based on the Truffle framework. Sulong provides solutions to execute in JVM for all languages that can be compiled to LLVM bitcode (such as C, C++, etc.).

4 references

- Lin Ziyi “GraalVM and Static Compilation”;

- Zhou Zhiming’s “In-depth Understanding of Java Virtual Machine”;

- Java Developer’s Introduction to GraalVM:-Zheng Yudi

- Truffle/Graal: From Interpreters to Optimizing Compilers via Partial Evaluation: -Carnegie Mellon University

Author: Wang Zihao

#Preliminary #Exploration #GraalVMJVM #Black #Technology #Cloud #Native #Era #Cloud #Developers #Personal #Space #News Fast Delivery