SantaCoder is a language model with 1.1 billion parameters that can be used for code generation and completion suggestions in several programming languages such as Python, Java, and JavaScript.

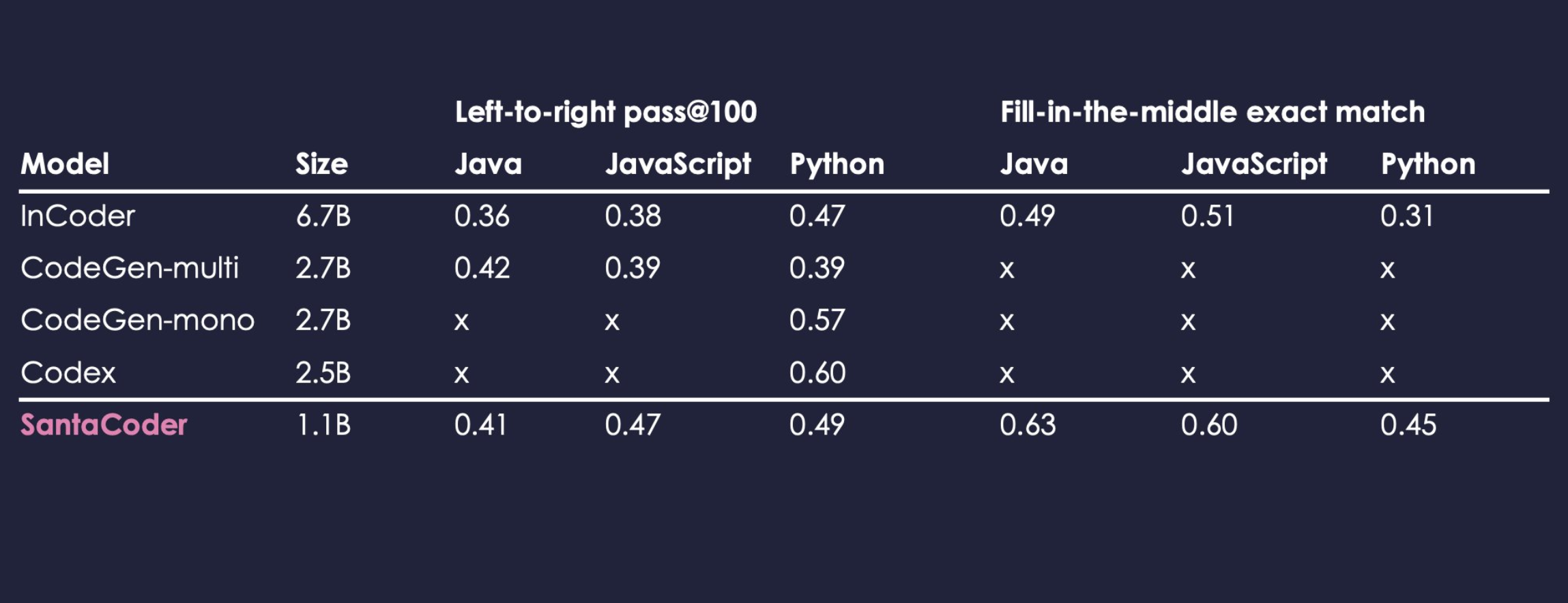

According to the official information, the basis for training SantaCoder is The Stack (v1.1) data set. Although SantaCoder is relatively small in size, with only 1.1 billion parameters, it is lower than InCoder (6.7 billion) or CodeGen- multi (2.7 billion), but SantaCoder’s performance is much better than these large multilingual models. However, the parameters are far less than those of GPT-3 and other super-large language models with parameters exceeding 100 billion levels. The range of programming languages applicable to SantaCoder is also relatively limited, and only Python, Java and JavaScript are supported.

The model has been trained on Python, Java and JavaScript source code. The main language in the source language is English, but other languages also exist. Thus, the model is able to generate code snippets given some context, but there is no guarantee that the generated code works as expected. It may be inefficient, contain bugs or bugs.

#SantaCoder #Homepage #Documentation #Downloads #Lightweight #Programming #Model #News Fast Delivery